Tantalus (Leamer)

-

Upload

payments8543 -

Category

Documents

-

view

214 -

download

0

Transcript of Tantalus (Leamer)

-

7/28/2019 Tantalus (Leamer)

1/16

Journal o Economic PerspectivesVolume 24, Number 2Spring 2010Pages 3146

MM y frst reaction to The Credibility Revolution in Empirical Economics,y frst reaction to The Credibility Revolution in Empirical Economics,authored by Joshua D. Angrist and Jrn-Steen Pischke, was: Wow! Thisauthored by Joshua D. Angrist and Jrn-Steen Pischke, was: Wow! Thispaper makes a stunningly good case or relying on purposeully random-paper makes a stunningly good case or relying on purposeully random-ized or accidentally randomized experiments to relieve the doubts that aictized or accidentally randomized experiments to relieve the doubts that aictinerences rom nonexperimental data. On urther reection, I realized that I mayinerences rom nonexperimental data. On urther reection, I realized that I mayhave been overcome with irrational exuberance. Moreover, with this great honorhave been overcome with irrational exuberance. Moreover, with this great honorbestowed on my con article, I couldnt easily throw this child o mine overboard.bestowed on my con article, I couldnt easily throw this child o mine overboard.We economists trudge relentlessly toward Asymptopia, where data are unlimitedWe economists trudge relentlessly toward Asymptopia, where data are unlimitedand estimates are consistent, where the laws o large numbers apply perectly andand estimates are consistent, where the laws o large numbers apply perectly andwhere the ull intricacies o the economy are completely revealed. But its a rus-where the ull intricacies o the economy are completely revealed. But its a rus-trating journey, since, no matter how ar we travel, Asymptopia remains infnitely artrating journey, since, no matter how ar we travel, Asymptopia remains infnitely araway. Worst o all, when we eel pumped up with our progress, a tectonic shit canaway. Worst o all, when we eel pumped up with our progress, a tectonic shit canoccur, like the Panic o 2008, making it seem as though our long journey has let usoccur, like the Panic o 2008, making it seem as though our long journey has let usdisappointingly close to the State o Complete Ignorance whence we began.disappointingly close to the State o Complete Ignorance whence we began.The pointlessness o much o our daily activity makes us receptive when theThe pointlessness o much o our daily activity makes us receptive when thePriests o our tribe ring the bells and announce a shortened path to Asymptopia.Priests o our tribe ring the bells and announce a shortened path to Asymptopia.(Remember the Cowles Foundation oering asymptotic properties o simultaneous(Remember the Cowles Foundation oering asymptotic properties o simultaneousequations estimates and structural parameters?) We may listen, but we dont hear,equations estimates and structural parameters?) We may listen, but we dont hear,when the Priests warn that the new direction is only or those with Faith, thosewhen the Priests warn that the new direction is only or those with Faith, thosewith complete belie in the Assumptions o the Path. It oten takes years down thewith complete belie in the Assumptions o the Path. It oten takes years down thePath, but sooner or later, someone articulates the concerns that gnaw away in each oPath, but sooner or later, someone articulates the concerns that gnaw away in each o

Tantalus on the Road to Asymptopia

Edward E. Leamer is Professor of Economics, Management and Statistics, University ofEdward E. Leamer is Proessor o Economics, Management and Statistics, University oCalifornia at Los Angeles, Los Angeles, California. His e-mail address isCaliornia at Los Angeles, Los Angeles, Caliornia. His e-mail address is [email protected]@anderson.ucla.eduanderson.ucla.edu..doi=10.1257/jep.24.2.31

Edward E. Leamer

-

7/28/2019 Tantalus (Leamer)

2/16

32 Journal o Economic Perspectives

us and asks i the Assumptions are valid. (T. C. Liu (1960) and Christopher Sims

(1980) were the ones who proclaimed that the Cowles Emperor had no clothes.)

Small seeds o doubt in each o us inevitably turn to despair and we abandon that

direction and seek another.Two o the latest products-to-end-all-suering are nonparametric estimationTwo o the latest products-to-end-all-suering are nonparametric estimationand consistent standard errors, which promise results without assumptions, as iand consistent standard errors, which promise results without assumptions, as iwe were already in Asymptopia where data are so plentiul that no assumptionswe were already in Asymptopia where data are so plentiul that no assumptionsare needed. But like procedures that rely explicitly on assumptions, these neware needed. But like procedures that rely explicitly on assumptions, these newmethods work well in the circumstances in which explicit or hidden assumptionsmethods work well in the circumstances in which explicit or hidden assumptionshold tolerably well and poorly otherwise. By disguising the assumptions on whichhold tolerably well and poorly otherwise. By disguising the assumptions on whichnonparametric methods and consistent standard errors rely, the purveyors o thesenonparametric methods and consistent standard errors rely, the purveyors o thesemethods have made it impossible to have an intelligible conversation about themethods have made it impossible to have an intelligible conversation about thecircumstances in which their gimmicks do not work well and ought not to be used.circumstances in which their gimmicks do not work well and ought not to be used.As or me, I preer to carry parameters on my journey so I know where I am andAs or me, I preer to carry parameters on my journey so I know where I am andwhere I am going, not travel stoned on the latest euphoria drug.where I am going, not travel stoned on the latest euphoria drug.This is a story o Tantalus, grasping or knowledge that remains always beyondThis is a story o Tantalus, grasping or knowledge that remains always beyondreach. In Greek mythology Tantalus was avored among all mortals by being askedreach. In Greek mythology Tantalus was avored among all mortals by being askedto dine with the gods. But he misbehavedsome say by trying to take divine oodto dine with the gods. But he misbehavedsome say by trying to take divine oodback to the mortals, some say by inviting the gods to a dinner or which Tantalusback to the mortals, some say by inviting the gods to a dinner or which Tantalusboiled his son and served him as the main dish. Whatever the etiquette aux pas,boiled his son and served him as the main dish. Whatever the etiquette aux pas,Tantalus was punished by being immersed up to his neck in water. When he bowedTantalus was punished by being immersed up to his neck in water. When he bowedhis head to drink, the water drained away, and when he stretched up to eat the ruithis head to drink, the water drained away, and when he stretched up to eat the ruithanging above him, wind would blow it out o reach. It would be much healthierhanging above him, wind would blow it out o reach. It would be much healthieror all o us i we could accept our ate, recognize that perect knowledge will beor all o us i we could accept our ate, recognize that perect knowledge will beorever beyond our reach and fnd happiness with what we have. I we stoppedorever beyond our reach and fnd happiness with what we have. I we stoppedgrasping or the apple o Asymptopia, we would discover that our pool o Tantalusgrasping or the apple o Asymptopia, we would discover that our pool o Tantalusis ull o small but enjoyable insights and wisdom.is ull o small but enjoyable insights and wisdom.Can we economists agree that it is extremely hard work to squeeze truthsCan we economists agree that it is extremely hard work to squeeze truthsrom our data sets and what we genuinely understand will remain uncomort-rom our data sets and what we genuinely understand will remain uncomort-ably limited? We need words in our methodological vocabulary to express theably limited? We need words in our methodological vocabulary to express thelimits. We need sensitivity analyses to make those limits transparent. Those wholimits. We need sensitivity analyses to make those limits transparent. Those whothink otherwise should be required to wear a scarlet-letterthink otherwise should be required to wear a scarlet-letter OOaround their necks,around their necks,or overconfdence. Angrist and Pischke obviously know this. Their paper isor overconfdence. Angrist and Pischke obviously know this. Their paper ispeppered with concerns about quasi-experiments and with criticisms o instru-peppered with concerns about quasi-experiments and with criticisms o instru-mental variables thoughtlessly chosen. I think we would make progress i wemental variables thoughtlessly chosen. I think we would make progress i westopped using the words instrumental variables and used instead surrogatesstopped using the words instrumental variables and used instead surrogatesmeaning surrogates or the experiment that we wish we could have conducted.meaning surrogates or the experiment that we wish we could have conducted.The psychological power o the vocabulary requires a surrogate to be chosenThe psychological power o the vocabulary requires a surrogate to be chosenwith much greater care than an instrument.with much greater care than an instrument.As Angrist and Pischke persuasively argue, either purposeully randomizedAs Angrist and Pischke persuasively argue, either purposeully randomizedexperiments or accidentally randomized natural experiments can be extremelyexperiments or accidentally randomized natural experiments can be extremelyhelpul, but Angrist and Pischke seem to me to overstate the potential beneftshelpul, but Angrist and Pischke seem to me to overstate the potential beneftso the approach. Since hard and inconclusive thought is needed to transer theo the approach. Since hard and inconclusive thought is needed to transer the

-

7/28/2019 Tantalus (Leamer)

3/16

Edward E. Leamer 33

results learned rom randomized experiments into other domains, there mustresults learned rom randomized experiments into other domains, there mustthereore remain uncertainty and ambiguity about the breadth o applicationthereore remain uncertainty and ambiguity about the breadth o applicationo any fndings rom randomized experiments. For example, how does Cardso any fndings rom randomized experiments. For example, how does Cards(1990) study o the eect on the Miami labor market o the Mariel boatlit o(1990) study o the eect on the Miami labor market o the Mariel boatlit o125,000 Cuban reugees in 1980 inorm us o the eects o a 2000 mile wall along125,000 Cuban reugees in 1980 inorm us o the eects o a 2000 mile wall alongthe southern border o the United States? Thoughts are also needed to justiy thethe southern border o the United States? Thoughts are also needed to justiy thechoice o instrumental variables, and a critical element o doubt and ambiguitychoice o instrumental variables, and a critical element o doubt and ambiguitynecessarily aicts any instrumental variables estimate. (You and I know thatnecessarily aicts any instrumental variables estimate. (You and I know thattruly consistent estimators are imagined, not real.) Angrist and Pischke under-truly consistent estimators are imagined, not real.) Angrist and Pischke under-stand this. But their students and their students students may come to thinkstand this. But their students and their students students may come to thinkthat it is enough to wave a clove o garlic and chant randomization to solve allthat it is enough to wave a clove o garlic and chant randomization to solve allour problems just as an earlier cohort o econometricians have acted as i it wereour problems just as an earlier cohort o econometricians have acted as i it wereenough to chant instrumental variable.enough to chant instrumental variable.I will begin this comment with some thoughts about the inevitable limits oI will begin this comment with some thoughts about the inevitable limits orandomization, and the need or sensitivity analysis in this area, as in all areasrandomization, and the need or sensitivity analysis in this area, as in all areaso applied empirical work. To be provocative, I will argue here that the fnancialo applied empirical work. To be provocative, I will argue here that the fnancialcatastrophe that we have just experienced powerully illustrates a reason whycatastrophe that we have just experienced powerully illustrates a reason whyextrapolating rom natural experiments will inevitably be hazardous. The misin-extrapolating rom natural experiments will inevitably be hazardous. The misin-terpretation o historical data that led rating agencies, investors, and even mysel toterpretation o historical data that led rating agencies, investors, and even mysel toguess that home prices would decline very little and deault rates would be tolerableguess that home prices would decline very little and deault rates would be tolerableeven in a severe recession should serve as a caution or all applied econometrics. Ieven in a severe recession should serve as a caution or all applied econometrics. Iwill also oer some thoughts about how the difculties o applied econometric workwill also oer some thoughts about how the difculties o applied econometric workcannot be evaded with econometric innovations, oering some under-recognizedcannot be evaded with econometric innovations, oering some under-recognizeddifculties with instrumental variables and robust standard errors as examples. Idifculties with instrumental variables and robust standard errors as examples. Iconclude with some comments about the shortcomings o an experimentalist para-conclude with some comments about the shortcomings o an experimentalist para-digm as applied to macroeconomics, and with some warnings about the willingnessdigm as applied to macroeconomics, and with some warnings about the willingnesso applied economists to apply push-button methodologies without sufcient hardo applied economists to apply push-button methodologies without sufcient hardthought regarding their applicability and shortcomings.thought regarding their applicability and shortcomings.

Randomization Is Not EnoughRandomization Is Not EnoughAngrist and Pischke oer a compelling argument that randomization is oneAngrist and Pischke oer a compelling argument that randomization is onelarge step in the right direction. Which it is! But like all the other large steps welarge step in the right direction. Which it is! But like all the other large steps wehave already taken, this one doesnt get us where we want to be.have already taken, this one doesnt get us where we want to be.In addition to randomized treatments, most scientifc experiments also haveIn addition to randomized treatments, most scientifc experiments also havecontrols over the important conounding eects. These controls are needed tocontrols over the important conounding eects. These controls are needed toimprove the accuracy o the estimate o the treatment eect and also to determineimprove the accuracy o the estimate o the treatment eect and also to determineclearly the range o circumstances over which the estimate applies. (In a laboratoryclearly the range o circumstances over which the estimate applies. (In a laboratoryvacuum, we would fnd that a eather alls as ast as a bowling ball. In the real worldvacuum, we would fnd that a eather alls as ast as a bowling ball. In the real worldwith air, wind, and humidity, all bets are o, pending urther study.)with air, wind, and humidity, all bets are o, pending urther study.)In place o experimental controls, economists can, should, and usuallyIn place o experimental controls, economists can, should, and usuallydo include control variables in their estimated equations, whether the data aredo include control variables in their estimated equations, whether the data are

-

7/28/2019 Tantalus (Leamer)

4/16

34 Journal o Economic Perspectives

nonexperimental or experimental. To make my point about the eect o thesenonexperimental or experimental. To make my point about the eect o thesecontrols it will be helpul to reer to the prototypical model:controls it will be helpul to reer to the prototypical model:yt = +(0 + 1zt)xt + wt + t,

where xis the treatment,ythe response, zis a set o interactive conounders,wis a

set o additive conounders, where stands or all the other unnamed, unmeasured

eects which we sheepishly assume behaves like a random variable, distributed

independently o the observables. Here (0+1zt) is the variable treatment eect

that we wish to estimate. One set o problems is caused by the additive conounding

variables w,which can be uncomortably numerous. Another set o problems is

caused by the interactive conounding variables z,which may include eatures o

the experimental design as well as characteristics o the subjects.

Consider frst the problem o the additive conounders. We have been taughtConsider frst the problem o the additive conounders. We have been taughtthat experimental randomization o the treatment eliminates the requirementthat experimental randomization o the treatment eliminates the requirementto include additive controls in the equation because the correlation between theto include additive controls in the equation because the correlation between thecontrols and the treatment is zero by design and regression estimates with orcontrols and the treatment is zero by design and regression estimates with orwithout the controls are unbiased, indeed identical. Thats true in Asymptopia,without the controls are unbiased, indeed identical. Thats true in Asymptopia,but its not true here in the Land o the Finite Sample where correlation is an ever-but its not true here in the Land o the Finite Sample where correlation is an ever-present act o lie and where issues o sensitivity o conclusions to assumptions canpresent act o lie and where issues o sensitivity o conclusions to assumptions canarise even with randomized treatments i the correlations between the randomizedarise even with randomized treatments i the correlations between the randomizedtreatment and the additive conounders, by chance, are high enough.treatment and the additive conounders, by chance, are high enough.Indeed, i the number o additive conounding variables is equal to or largerIndeed, i the number o additive conounding variables is equal to or largerthan the number o observations, any treatmentthan the number o observations, any treatment x, randomized or not, will be, randomized or not, will beperectly collinear with the conounding variables (the undersized sample problem).perectly collinear with the conounding variables (the undersized sample problem).Then, to estimate the treatment eect, we would need to make judgments aboutThen, to estimate the treatment eect, we would need to make judgments aboutwhich o the conounding variables to exclude. That would ordinarily require a sensi-which o the conounding variables to exclude. That would ordinarily require a sensi-tivity analysis, unless through Divine revelation economists were told exactly whichtivity analysis, unless through Divine revelation economists were told exactly whichcontrols to include and which to exclude. Though the number o randomized trialscontrols to include and which to exclude. Though the number o randomized trialsmay be large, an important sensitivity question can still arise because the numbermay be large, an important sensitivity question can still arise because the numbero conounding variables can be increased without limit by using lagged values ando conounding variables can be increased without limit by using lagged values andnonlinear orms. In other words, i you cannot commit to some notion o smoothnessnonlinear orms. In other words, i you cannot commit to some notion o smoothness

o the unctional orm o the conounders and some notion o limited or smootho the unctional orm o the conounders and some notion o limited or smoothtime delays in response, you will not be able to estimate the treatment eect eventime delays in response, you will not be able to estimate the treatment eect evenwith a randomized experiment, unless experimental controls keep the conoundingwith a randomized experiment, unless experimental controls keep the conoundingvariables constant or Divine inspiration allows you to omit some o the variables.variables constant or Divine inspiration allows you to omit some o the variables.You are ree to dismiss the preceding paragraph as making a mountain outYou are ree to dismiss the preceding paragraph as making a mountain outo a molehill. By reducing the realized correlation between the treatment ando a molehill. By reducing the realized correlation between the treatment andthe controls, randomization allows a larger set o additive control variables tothe controls, randomization allows a larger set o additive control variables tobe included beore we conront the sensitivity issues caused by collinearity. Forbe included beore we conront the sensitivity issues caused by collinearity. Forthat reason, though correlation between the treatment and the conounders withthat reason, though correlation between the treatment and the conounders withnonexperimental data is anonexperimental data is a hugeproblem, it is much less important when the treat-problem, it is much less important when the treat-ment is randomized. With that problem neutralized, concern shits elsewhere.ment is randomized. With that problem neutralized, concern shits elsewhere.

-

7/28/2019 Tantalus (Leamer)

5/16

Tantalus on the Road to Asymptopia 35

The big problem with randomized experiments is not additive conounders;The big problem with randomized experiments is not additive conounders;its the interactive conounders. This is the heterogeneity issue that especiallyits the interactive conounders. This is the heterogeneity issue that especiallyconcerns Heckman (1992) and Deaton (2008) who emphasized the need to studyconcerns Heckman (1992) and Deaton (2008) who emphasized the need to studycausal mechanisms, which I am summarizing in terms o the interactivecausal mechanisms, which I am summarizing in terms o the interactive zvariables. Angrist and Pischke completely understand this point, but they seemvariables. Angrist and Pischke completely understand this point, but they seeminappropriately dismissive when they accurately explain extrapolation o causalinappropriately dismissive when they accurately explain extrapolation o causaleects to new settings is always speculative, which is true, but the extrapolationeects to new settings is always speculative, which is true, but the extrapolationspeculation is more transparent and more worrisome in the experimental casespeculation is more transparent and more worrisome in the experimental casethan in the nonexperimental case.than in the nonexperimental case.Ater all, in nonexperimental nonrandomized settings, when judiciousAter all, in nonexperimental nonrandomized settings, when judiciouschoice o additive conounders allows one to obtain just about any estimate ochoice o additive conounders allows one to obtain just about any estimate othe treatment eect, there is little reason to worry about extrapolation o causalthe treatment eect, there is little reason to worry about extrapolation o causaleects to new settings. Whats to extrapolate anyway? Our lack o knowledge?eects to new settings. Whats to extrapolate anyway? Our lack o knowledge?Greater concern about extrapolation is thus an indicator o the progress thatGreater concern about extrapolation is thus an indicator o the progress thatcomes rom randomization.comes rom randomization.When the randomization is accidental, we may pretend that the instrumentalWhen the randomization is accidental, we may pretend that the instrumentalvariables estimator is consistent, but we all know that the assumptions that justiyvariables estimator is consistent, but we all know that the assumptions that justiythat conclusion cannot possibly hold exactly. Those who use instrumental variablesthat conclusion cannot possibly hold exactly. Those who use instrumental variableswould do well to anticipate the inevitable barrage o questions about the appropriate-would do well to anticipate the inevitable barrage o questions about the appropriate-ness o their instruments. Ever-present asymptotic bias casts a large dark shadow onness o their instruments. Ever-present asymptotic bias casts a large dark shadow oninstrumental variables estimates and is what limits the applicability o the estimateinstrumental variables estimates and is what limits the applicability o the estimateeven to the setting that is observed, not to mention extrapolation to new settings. Ineven to the setting that is observed, not to mention extrapolation to new settings. Inaddition, small sample bias o instrumental variables estimators, even in the consis-addition, small sample bias o instrumental variables estimators, even in the consis-tent case, is a huge neglected problem with practice, made worse by the existence otent case, is a huge neglected problem with practice, made worse by the existence omultiple weak instruments. This seems to be one o the points o the Angrist andmultiple weak instruments. This seems to be one o the points o the Angrist andPischke paperpurposeul randomization is better than accidental randomization.Pischke paperpurposeul randomization is better than accidental randomization.But when the randomization is purposeul, a whole new set o issues arisesBut when the randomization is purposeul, a whole new set o issues arisesexperimental contaminationwhich is much more serious with human subjectsexperimental contaminationwhich is much more serious with human subjectsin a social system than with chemicals mixed in beakers or parts assembled intoin a social system than with chemicals mixed in beakers or parts assembled intomechanical structures. Anyone who designs an experiment in economics would domechanical structures. Anyone who designs an experiment in economics would dowell to anticipate the inevitable barrage o questions regarding the valid transerencewell to anticipate the inevitable barrage o questions regarding the valid transerenceo things learned in the lab (one value oo things learned in the lab (one value oz) into the real world (a dierent value o) into the real world (a dierent value oz).).

With interactive conounders explicitly included, the overall treatment eectWith interactive conounders explicitly included, the overall treatment eect00++zttis not a number but a variable that depends on the conounding eects.is not a number but a variable that depends on the conounding eects.Absent observation o the interactive compounding eectsAbsent observation o the interactive compounding eects z, what is estimated is, what is estimated issome kind o average treatment eect which is called by Imbens and Angrist (1994)some kind o average treatment eect which is called by Imbens and Angrist (1994)a Local Average Treatment Eect, which is a little like the lawyer who explaineda Local Average Treatment Eect, which is a little like the lawyer who explainedthat when he was a young man he lost many cases he should have won but as hethat when he was a young man he lost many cases he should have won but as hegrew older he won many that he should have lost, so that on the average justice wasgrew older he won many that he should have lost, so that on the average justice wasdone. In other words, i you act as i the treatment eect is a random variable bydone. In other words, i you act as i the treatment eect is a random variable bysubstitutingsubstituting ttoror 00++ztt, the notation inappropriately relieves you o the heavy, the notation inappropriately relieves you o the heavyburden o considering what are the interactive conounders and fnding some wayburden o considering what are the interactive conounders and fnding some wayto measure them. Less elliptically, absent observation oto measure them. Less elliptically, absent observation oz, the estimated treatment, the estimated treatment

-

7/28/2019 Tantalus (Leamer)

6/16

36 Journal o Economic Perspectives

eect should be transerredeect should be transerred only into those settings in which the conoundinginto those settings in which the conoundinginteractive variables have values close to the mean values in the experiment. I littleinteractive variables have values close to the mean values in the experiment. I littlethought has gone into identiying these possible conounders, it seems probablethought has gone into identiying these possible conounders, it seems probablethat little thought will be given to the limited applicability o the results in otherthat little thought will be given to the limited applicability o the results in othersettings. This is the error made by the bond rating agencies in the recent fnancialsettings. This is the error made by the bond rating agencies in the recent fnancialcrashthey transerred fndings rom one historical experience to a domain incrashthey transerred fndings rom one historical experience to a domain inwhich they no longer applied because, I will suggest, social conounders were notwhich they no longer applied because, I will suggest, social conounders were notincluded. More on this below.included. More on this below.

Sensitivity Analysis and Sensitivity Conversations are What We NeedSensitivity Analysis and Sensitivity Conversations are What We NeedI thus stand by the view in my 1983 essay that econometric theory promisesI thus stand by the view in my 1983 essay that econometric theory promisesmore than it can deliver, because it requires a complete commitment to assump-more than it can deliver, because it requires a complete commitment to assump-tions that are actually only hal-heartedly maintained. The only way to createtions that are actually only hal-heartedly maintained. The only way to createcredible inerences with doubtul assumptions is to perorm a sensitivity analysiscredible inerences with doubtul assumptions is to perorm a sensitivity analysisthat separates the ragile inerences rom the sturdy ones: those that dependthat separates the ragile inerences rom the sturdy ones: those that dependsubstantially on the doubtul assumptions and those that do not. Since I wrotesubstantially on the doubtul assumptions and those that do not. Since I wrotemy con in econometrics challenge much progress has been made in economicmy con in econometrics challenge much progress has been made in economictheory and in econometric theory and in experimental design, but there hastheory and in econometric theory and in experimental design, but there hasbeen little progress technically or procedurally on this subject o sensitivity anal-been little progress technically or procedurally on this subject o sensitivity anal-yses in econometrics. Most authors still support their conclusions with the resultsyses in econometrics. Most authors still support their conclusions with the results

implied by several models, and they leave the rest o us wondering how hard theyimplied by several models, and they leave the rest o us wondering how hard theyhad to work to fnd their avorite outcomes and how sure we have to be abouthad to work to fnd their avorite outcomes and how sure we have to be aboutthe instrumental variables assumptions with accidentally randomized treatmentsthe instrumental variables assumptions with accidentally randomized treatmentsand about the extent o the experimental bias with purposeully randomizedand about the extent o the experimental bias with purposeully randomizedtreatments. Its like a court o law in which we hear only the experts on the plain-treatments. Its like a court o law in which we hear only the experts on the plain-tis side, but are wise enough to know that there are abundant arguments ortis side, but are wise enough to know that there are abundant arguments orthe deense.the deense.I have been making this point in the econometrics sphere since beore I wroteI have been making this point in the econometrics sphere since beore I wroteSpecifcation Searches: Ad Hoc Inerence with Nonexperimental Datain 1978.in 1978.11 That bookThat bookwas stimulated by my observation o economists at work who routinely pass theirwas stimulated by my observation o economists at work who routinely pass theirdata through the flters o many models and then choose a ew results or reportingdata through the flters o many models and then choose a ew results or reportingpurposes. The range o models economists are willing to explore creates ambi-purposes. The range o models economists are willing to explore creates ambi-guity in the inerences that can properly be drawn rom our data, and I have beenguity in the inerences that can properly be drawn rom our data, and I have beenrecommending mathematical methods o sensitivity analysis that are intended torecommending mathematical methods o sensitivity analysis that are intended todetermine the limits o that ambiguity.determine the limits o that ambiguity.

1 Parenthetically, i you are alert, you might have been unsettled by the use o the word with in mytitle: Ad Hoc Inerence with Nonexperimental Data, since inerences are made with toolsbutrom data. That ismy very subtle way o suggesting that knowledge is created by an interactive exploratory process, quiteunlike the preprogrammed estimation dictated by traditional econometric theory.

-

7/28/2019 Tantalus (Leamer)

7/16

Edward E. Leamer 37

The language that I used to make the case or sensitivity analysis seems not toThe language that I used to make the case or sensitivity analysis seems not tohave penetrated the consciousness o economists. What I called extreme boundshave penetrated the consciousness o economists. What I called extreme boundsanalysis in my 1983 essay is a simple example that is the best-known approach,analysis in my 1983 essay is a simple example that is the best-known approach,though poorly understood and inappropriately applied. Extreme bounds analysisthough poorly understood and inappropriately applied. Extreme bounds analysisis not an ad hoc but intuitive approach, as described by Angrist and Pischke.is not an ad hoc but intuitive approach, as described by Angrist and Pischke.It is a solution to a clearly and precisely defned sensitivity question, which is toIt is a solution to a clearly and precisely defned sensitivity question, which is todetermine the range o estimates that the data could support given a preciselydetermine the range o estimates that the data could support given a preciselydefned range o assumptions about the prior distribution. Its a correspondencedefned range o assumptions about the prior distribution. Its a correspondencebetween the assumption space and the estimation space. Incidentally, i you couldbetween the assumption space and the estimation space. Incidentally, i you couldsee the wisdom in fnding the range o estimates that the data allow, I would worksee the wisdom in fnding the range o estimates that the data allow, I would workto provide tools that identiy the range oto provide tools that identiy the range o t-values, a more important measure o-values, a more important measure othe ragility o the inerences.the ragility o the inerences.A prior distribution is a oreign concept or most economists, and I tried toA prior distribution is a oreign concept or most economists, and I tried tocreate a bridge between the logic o the analysis and its application by expressingcreate a bridge between the logic o the analysis and its application by expressingthe bounds in language that most economists could understand. Here it is:the bounds in language that most economists could understand. Here it is:Include in the equation the treatment variable and a single linear combina-Include in the equation the treatment variable and a single linear combina-tion o the additive controls. Then fnd the linear combination o controls thattion o the additive controls. Then fnd the linear combination o controls thatprovides the greatest estimated treatment eect and the linear combination thatprovides the greatest estimated treatment eect and the linear combination thatprovides the smallest estimated treatment eect. That corresponds to the rangeprovides the smallest estimated treatment eect. That corresponds to the rangeo estimates that can be obtained when it is known that the controls are doubtulo estimates that can be obtained when it is known that the controls are doubtul(zero being the most likely estimate, a priori) but there is complete ambiguity(zero being the most likely estimate, a priori) but there is complete ambiguityabout the probable importance o the variables, arbitrary scales, and arbitraryabout the probable importance o the variables, arbitrary scales, and arbitrarycoordinate systems. What is ad hoc are the ollow-on methods, or example,coordinate systems. What is ad hoc are the ollow-on methods, or example,computing the standard errors o the bounds or reporting a distribution o esti-computing the standard errors o the bounds or reporting a distribution o esti-mates as in Sala-i-Martin (1997).mates as in Sala-i-Martin (1997).A culture that insists on statistically signifcant estimates is not naturallyA culture that insists on statistically signifcant estimates is not naturallyreceptive to another reason our data are uninormative (too much dependence onreceptive to another reason our data are uninormative (too much dependence onarbitrary assumptions). One reason these methods are rarely used is their honestyarbitrary assumptions). One reason these methods are rarely used is their honestyseems destructive; or, to put it another way, a anatical commitment to anciulseems destructive; or, to put it another way, a anatical commitment to anciulormal models is oten needed to create the appearance o progress. But we needormal models is oten needed to create the appearance o progress. But we needto change the culture and regard the fnding o no persuasive evidence in theseto change the culture and regard the fnding o no persuasive evidence in thesedata on the same ooting as a statistically signifcant and sturdy estimate. Keepdata on the same ooting as a statistically signifcant and sturdy estimate. Keepin mind that the sensitivity correspondence between assumptions and inerencesin mind that the sensitivity correspondence between assumptions and inerencescan go in either direction. We can ask what set o inerences corresponds to acan go in either direction. We can ask what set o inerences corresponds to aparticular set o assumptions, but we can also ask what assumptions are neededparticular set o assumptions, but we can also ask what assumptions are neededto support a hoped-or inerence. That is exactly what an economic theorem does.to support a hoped-or inerence. That is exactly what an economic theorem does.The intellectual value o the Factor Price Equalization Theorem does not deriveThe intellectual value o the Factor Price Equalization Theorem does not deriverom its truthulness; its value comes rom the act that it provides a minimal set orom its truthulness; its value comes rom the act that it provides a minimal set oassumptions that imply Factor Price Equalization, thus ocusing attention onassumptions that imply Factor Price Equalization, thus ocusing attention on whyactor prices are not equalized.actor prices are not equalized.To those o you who do data analysis, I thus pose two questions that I thinkTo those o you who do data analysis, I thus pose two questions that I thinkevery empirical enterprise should be able to answer: What eature o the data leadsevery empirical enterprise should be able to answer: What eature o the data leadsto that conclusion? What set o assumptions is essential to support that inerence?to that conclusion? What set o assumptions is essential to support that inerence?

-

7/28/2019 Tantalus (Leamer)

8/16

38 Journal o Economic Perspectives

The Troubles on Wall Street and Three-Valued LogicThe Troubles on Wall Street and Three-Valued LogicWith the ashes o the mathematical models used to rate mortgage-backedWith the ashes o the mathematical models used to rate mortgage-backed

securities still smoldering on Wall Street, now is an ideal time to revisit the sensi-securities still smoldering on Wall Street, now is an ideal time to revisit the sensi-tivity issues. Justin Fox (2009) suggests in his titletivity issues. Justin Fox (2009) suggests in his title The Myth o the Rational Market,A History o Risk, Reward, and Delusion on Wall Street that we have been making athat we have been making amodeling error and that the problems lie in the assumption o rational actors,modeling error and that the problems lie in the assumption o rational actors,which presumably can be remedied by business-as-usual ater adding a behav-which presumably can be remedied by business-as-usual ater adding a behav-ioral variable or two into the model. I think the roots o the problem are deeper,ioral variable or two into the model. I think the roots o the problem are deeper,calling or a change in the way we do business and calling or a book that mightcalling or a change in the way we do business and calling or a book that mightbe titled:be titled: The Myth o the Data Generating Process: A History o Delusion in Academia..Rationality o fnancial markets is a pretty straightorward consequence o theRationality o fnancial markets is a pretty straightorward consequence o theassumption that fnancial returns are drawn rom a data generating processassumption that fnancial returns are drawn rom a data generating processwhose properties are apparent to experienced investors and econometricians,whose properties are apparent to experienced investors and econometricians,ater studying the historical data. I we dont know the data generating process,ater studying the historical data. I we dont know the data generating process,then the efcient markets edifce alls apart. Even simple-minded fnance ideas,then the efcient markets edifce alls apart. Even simple-minded fnance ideas,like the benefts rom diversifcation, become suspect i we cannot reliably assesslike the benefts rom diversifcation, become suspect i we cannot reliably assesspredictive means, variances, and covariances.predictive means, variances, and covariances.But it isnt just fnance that rests on this myth o a data-generating process.But it isnt just fnance that rests on this myth o a data-generating process.It is the whole edifce o empirical economics. Lets ace it. The evolving, inno-It is the whole edifce o empirical economics. Lets ace it. The evolving, inno-vating, sel-organizing, sel-healing human system we call the economy is not wellvating, sel-organizing, sel-healing human system we call the economy is not welldescribed by a fctional data-generating process. The point o the sensitivitydescribed by a fctional data-generating process. The point o the sensitivityanalyses that I have been advocating begins with the admission that the historicalanalyses that I have been advocating begins with the admission that the historicaldata are compatible with countless alternative data-generating models. I there isdata are compatible with countless alternative data-generating models. I there isone, the best we can do is to get close; we are never going to know it.one, the best we can do is to get close; we are never going to know it.Conronted with our collective colossal ailure to anticipate the problemsConronted with our collective colossal ailure to anticipate the problemswith mortgage-backed securities, we economists have been stampeding shame-with mortgage-backed securities, we economists have been stampeding shame-lessly back to Keynesian thinking about macroeconomics, scurrying to rereadlessly back to Keynesian thinking about macroeconomics, scurrying to rereadKeynes (1936)Keynes (1936) General Theory o Employment, Interest and Money. We would do well. We would do wellto go back a little urther in time to 1921 when both Keynesto go back a little urther in time to 1921 when both Keynes Treatise on Probabilityand Frank Knightsand Frank Knights Risk, Uncertainty and Proft were published. Both o thesewere published. Both o thesebooks are about the myth o the data generating process. Both deal with thebooks are about the myth o the data generating process. Both deal with thelimits o the expected utility maximization paradigm. Both serve as oundationslimits o the expected utility maximization paradigm. Both serve as oundationsor the arguments in avor o sensitivity analysis in my con paper. Both are aboutor the arguments in avor o sensitivity analysis in my con paper. Both are aboutthree-valued logic.three-valued logic.Here is how three-valued logic works. Suppose you can confdently determineHere is how three-valued logic works. Suppose you can confdently determinethat it is a good idea to bring your umbrella i the chance o rain is 10 percent orthat it is a good idea to bring your umbrella i the chance o rain is 10 percent orhigher, but carrying the umbrella involves more cost than expected beneft i thehigher, but carrying the umbrella involves more cost than expected beneft i thechance o rain is less. When the data point clearly to a probability either in excess ochance o rain is less. When the data point clearly to a probability either in excess o10 percent or less than 10 percent, then we are in a world o Knightian risk in which10 percent or less than 10 percent, then we are in a world o Knightian risk in whichthe decision can be based on expected utility maximization. But suppose there isthe decision can be based on expected utility maximization. But suppose there isone model that suggests the probability is 15 percent while another equally goodone model that suggests the probability is 15 percent while another equally goodmodel suggests the probability is 5 percent. Then we are in a world o Knightianmodel suggests the probability is 5 percent. Then we are in a world o Knightian

-

7/28/2019 Tantalus (Leamer)

9/16

Tantalus on the Road to Asymptopia 39

uncertainty in which expected utility maximization doesnt produce a decision.uncertainty in which expected utility maximization doesnt produce a decision.When any number between 0.05 and 0.15 is an equally goodWhen any number between 0.05 and 0.15 is an equally good22 assessment o theassessment o thechance o rain we are dealing with epistemic probabilities that are not numbers butchance o rain we are dealing with epistemic probabilities that are not numbers butintervals, in the spirit o Keynes (1921). While the decision is two-valuedyou eitherintervals, in the spirit o Keynes (1921). While the decision is two-valuedyou eithertake your umbrella or you dontthe state o mind is three-valued: yes (take thetake your umbrella or you dontthe state o mind is three-valued: yes (take theumbrella), no (leave it behind), or I dont know. The point o adopting three-valuedumbrella), no (leave it behind), or I dont know. The point o adopting three-valuedlogic like Keynes and Knight is to encourage us to think clearly about the limits ologic like Keynes and Knight is to encourage us to think clearly about the limits oour knowledge and the limits o the expected utility maximization paradigm. Withour knowledge and the limits o the expected utility maximization paradigm. Withall o the ocus on decision making with two-valued logic, the proession has doneall o the ocus on decision making with two-valued logic, the proession has doneprecious little work on decision under ambiguity and three-valued logic.precious little work on decision under ambiguity and three-valued logic.33In other words, when I asked us to take the con out o econometrics IIn other words, when I asked us to take the con out o econometrics Iwas only saying the obvious: I the range o inerences that can reasonably bewas only saying the obvious: I the range o inerences that can reasonably besupported by the data we have is too wide to point to one and only one deci-supported by the data we have is too wide to point to one and only one deci-sion, we need to admit that the data leave us conused. Thus my contribution tosion, we need to admit that the data leave us conused. Thus my contribution toeconometrics has been conusion! You, though, reuse to admit that you are justeconometrics has been conusion! You, though, reuse to admit that you are justas conused as I.as conused as I.Three-valued logic seems especially pertinent when extending the results oThree-valued logic seems especially pertinent when extending the results oexperiments into other domainsthere is a lot o we dont know there. Even inexperiments into other domainsthere is a lot o we dont know there. Even inthe case o mechanical systems, with statistical properties much better understoodthe case o mechanical systems, with statistical properties much better understoodthan economics/fnancial human systems, it is not assumed that models will workthan economics/fnancial human systems, it is not assumed that models will workunder extreme conditions. Engineers may design aircrat that according to theirunder extreme conditions. Engineers may design aircrat that according to theircomputer models can y, but until real airplanes are actually tested in normalcomputer models can y, but until real airplanes are actually tested in normaland stressul conditions, the aircrat are not certifed to carry passengers. Indeed,and stressul conditions, the aircrat are not certifed to carry passengers. Indeed,Boeings composite plastic 787 Dreamliner has been suering production delaysBoeings composite plastic 787 Dreamliner has been suering production delaysbecause wing damage has shown up when the stress on the wings was well belowbecause wing damage has shown up when the stress on the wings was well belowthe load the wings must bear to be ederally certifed to carry passengers (Gates,the load the wings must bear to be ederally certifed to carry passengers (Gates,2009). Too bad we couldnt have stress-tested those mortgage-backed securities2009). Too bad we couldnt have stress-tested those mortgage-backed securitiesbeore we started ying around the world with them. Too bad the rating agen-beore we started ying around the world with them. Too bad the rating agen-cies did not use three-valued logic with another bond rating: AAA-H, meaningcies did not use three-valued logic with another bond rating: AAA-H, meaninghypothetically AAA according to a model but not yet certifed as recession-proo.hypothetically AAA according to a model but not yet certifed as recession-proo.There is o course more than one reason why mortgage-backed securitiesThere is o course more than one reason why mortgage-backed securitiesdidnt y when the weather got rough, but it will suit my purposes here i I makedidnt y when the weather got rough, but it will suit my purposes here i I makethe argument that it was an inappropriate extrapolation o data rom one acci-the argument that it was an inappropriate extrapolation o data rom one acci-dental experiment to a dierent setting that is at the root o the problem. To makedental experiment to a dierent setting that is at the root o the problem. To makethis argument, it is enough to look at the data in Los Angeles. Dont expect a ullthis argument, it is enough to look at the data in Los Angeles. Dont expect a ulleconometric housing model. Its only a provocative illustration.econometric housing model. Its only a provocative illustration.Figure 1 contrasts nonarm payrolls in Los Angeles and in the United StatesFigure 1 contrasts nonarm payrolls in Los Angeles and in the United Statesoverall during the last three recessions. In 2001 and in 2008, the L.A. job marketoverall during the last three recessions. In 2001 and in 2008, the L.A. job market2 Please dont think I am assigning a uniorm distribution over this interval, since i that were the case,the probability would be precisely equal to the mean: 0.10.3 Bewley (1986), Klibano, Marinacci, and Mukerji (2005), and Hanany and Klibano (2009) andreerences therein are exceptions.

-

7/28/2019 Tantalus (Leamer)

10/16

40 Journal o Economic Perspectives

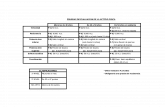

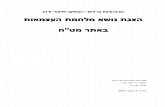

declined in parallel with the U.S. decline, but the recession o 199091 was espe-declined in parallel with the U.S. decline, but the recession o 199091 was espe-cially severe in Los Angeles. U.S. payroll jobs dropped by 1.5 percent during thiscially severe in Los Angeles. U.S. payroll jobs dropped by 1.5 percent during thisrecession, while jobs in the Los Angeles metropolitan statistical area declined byrecession, while jobs in the Los Angeles metropolitan statistical area declined by11 percent and did not return to their 1990 levels until 1999. This was a natural11 percent and did not return to their 1990 levels until 1999. This was a naturalexperiment known as the end o the Cold War, with Los Angeles treated and with,experiment known as the end o the Cold War, with Los Angeles treated and with,or example, San Francisco in the control group. (The 2001 recession had the treat-or example, San Francisco in the control group. (The 2001 recession had the treat-ment reversed, with San Francisco treated to a tech bust but Los Angeles in thement reversed, with San Francisco treated to a tech bust but Los Angeles in thecontrol group.)control group.)Thus, I suggest, the L.A. data in the early 1990s is a test case o the eect oThus, I suggest, the L.A. data in the early 1990s is a test case o the eect oa severe recession on mortgage deaults. Figure 2 illustrates the number o homesa severe recession on mortgage deaults. Figure 2 illustrates the number o homessold and the median prices in Los Angeles rom January 1985 to August 2009. Thesold and the median prices in Los Angeles rom January 1985 to August 2009. Thehorizontal line segments indicate the period over which all homes purchased withhorizontal line segments indicate the period over which all homes purchased witha loan to value ratio o 90 percent (indicating a 10 percent downpayment) anda loan to value ratio o 90 percent (indicating a 10 percent downpayment) andinterest-only payments will be underwater at the next trough. I all these homesinterest-only payments will be underwater at the next trough. I all these homeswere returned to the banks under the worst case scenario when the price hitswere returned to the banks under the worst case scenario when the price hitsbottom, the bank losses are 90 percent o the gap between that line segment andbottom, the bank losses are 90 percent o the gap between that line segment andthe price at origination. Clearly the underwater problem is much more intense inthe price at origination. Clearly the underwater problem is much more intense inthe latest downturn than it was in the 1990s.the latest downturn than it was in the 1990s.In the frst episode, volume peaked much beore prices, alling by 50 percentIn the frst episode, volume peaked much beore prices, alling by 50 percentin 28 months. Though volume was crashing, the median price continued toin 28 months. Though volume was crashing, the median price continued toincrease, peaking in May 1991, 101 percent above its value at the start o theseincrease, peaking in May 1991, 101 percent above its value at the start o thesedata in January 1985. Then commenced a slow price decline with a trough indata in January 1985. Then commenced a slow price decline with a trough in

Figure 1

Payroll Jobs in Los Angeles and U.S. Overall

(recessions shaded)

Note:The fgure shows the change in number o employees on nonarm payrolls, in the Los Angelesarea and in the U.S. overall, relative to January 1990, seasonally adjusted. The Los Angeles area is theLos AngelesLong BeachSanta Ana, Caliornia, Metropolitan Statistical Area.

12

8

4

0

4

8

12

16

20

24

28

1990 1992 1994 1996 1998 2000 2002 2004 2006 2008

U.S. overall

Los Angeles

Dec. 1993 troughPercentchangeinjobs(relativetoJan.

1990)

-

7/28/2019 Tantalus (Leamer)

11/16

Edward E. Leamer 41

December 1996, with the median price 29 percent below its previous peak, aDecember 1996, with the median price 29 percent below its previous peak, adecline o 5 percent per year.decline o 5 percent per year.I have been ond o summarizing these data by saying that or homes, itsI have been ond o summarizing these data by saying that or homes, itsa volume cycle, not a price cycle. This very slow price-discovery occurs becausea volume cycle, not a price cycle. This very slow price-discovery occurs becausepeople celebrate investment gains, but deny losses. Owner-occupants o homespeople celebrate investment gains, but deny losses. Owner-occupants o homescan likewise hold onto long-ago valuations and insist on prices that the marketcan likewise hold onto long-ago valuations and insist on prices that the marketcannot support. Because o that denial, there are many ewer transactions, andcannot support. Because o that denial, there are many ewer transactions, andthe transactions that do occur tend to be at the sellers prices, not equilibriumthe transactions that do occur tend to be at the sellers prices, not equilibriummarket prices.market prices.The slow price discovery acts like a time-out, allowing the undamentals toThe slow price discovery acts like a time-out, allowing the undamentals tocatch up to valuations and keeping oreclosure rates at minimal levels.catch up to valuations and keeping oreclosure rates at minimal levels.44 In theIn theearly phase o the current housing correction, history seemed to be repeatingearly phase o the current housing correction, history seemed to be repeatingitsel, since volume was dropping rapidly even as prices continued to rise. But thenitsel, since volume was dropping rapidly even as prices continued to rise. But thenbegan a rapid 51 percent drop in home prices between August 2007 to March 2009,began a rapid 51 percent drop in home prices between August 2007 to March 2009,creating a huge amount o underwater valuation.creating a huge amount o underwater valuation.4The frst underwater problem illustrated in Figure 2 could be almost completely remedied by a switchrom 90 to 80 percent loan-to-value ratios starting when volumes began to drop, because most o theunderwater loans came ater that break in the market.

Figure 2

Los Angeles Housing Market: Median Home Price and Sales Volume

Source:Caliornia Association o Realtors.Note:The median price and sales volume are a 3 -month moving average, seasonally adjusted. The linesegments indentiy homes that will be underwater at the coming cycle trough assuming 90 percentloan to value at origination and interest only payments. (Denoting the price at time tbyptand the priceat the trough bypmin, with 10 percent down, the loan balance is 0.9pt, and the home is underwater atthe trough i 0.9 pt>pmin or ipt>pmin /0.9.)

6,000

5,000

4,000

3,000

2,000

700,000

500,000

400,000

300,000

200,000

100,000Salesvolume

Sales volume(left axis)

Medianprice($)

Median price(right axis)

Line segments identify underwater homes at cycle troughs assuming 90% loan to value

1986

1985

1987

1988

1989

1990

1991

1992

1994

1993

1995

1996

1997

1998

1999

2000

2002

2001

2003

2004

2005

2006

2007

2008

2009

-

7/28/2019 Tantalus (Leamer)

12/16

42 Journal o Economic Perspectives

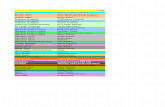

Why did the L.A. 1990s data mislead with regard to the current housingWhy did the L.A. 1990s data mislead with regard to the current housingcorrection? One possible answer is untested social eects and unmeasured subjectcorrection? One possible answer is untested social eects and unmeasured subjecteectstwo very important interactive conounders. Innovations in mortgageeectstwo very important interactive conounders. Innovations in mortgageorigination in 20032005 extended the home-ownership peripheries o our citiesorigination in 20032005 extended the home-ownership peripheries o our citiesboth in terms o income and location. When the subprime mortgage window shutboth in terms o income and location. When the subprime mortgage window shutdown, the demand or owner-occupied homes at the extended peripheries wasdown, the demand or owner-occupied homes at the extended peripheries waseliminated virtually overnight. Since these properties were fnanced with loanseliminated virtually overnight. Since these properties were fnanced with loansthat could only work i the houses paid or themselves via appreciation, the banksthat could only work i the houses paid or themselves via appreciation, the banksbecame the new owners. Accounting rules do not allow banks to deny losses the waybecame the new owners. Accounting rules do not allow banks to deny losses the wayowner-occupants do, and banks immediately dumped the oreclosed homes ontoowner-occupants do, and banks immediately dumped the oreclosed homes ontothe market at the worst time, in the worst way, with broken windows and burned-upthe market at the worst time, in the worst way, with broken windows and burned-uplawns, concentrated in time and space, causing very rapid (or even exaggerated)lawns, concentrated in time and space, causing very rapid (or even exaggerated)price discovery in the aected peripheries. In contrast, oreclosures in the 1990s,price discovery in the aected peripheries. In contrast, oreclosures in the 1990s,illustrated in Figure 3 or the case o Southern Caliornia, were delayed, and wereillustrated in Figure 3 or the case o Southern Caliornia, were delayed, and weredispersed in time and space.dispersed in time and space.We all need to learn both narrower and broader lessons here. I expected priceWe all need to learn both narrower and broader lessons here. I expected pricediscovery in housing markets to be slow, as it had been ater the bursting o previousdiscovery in housing markets to be slow, as it had been ater the bursting o previousbubbles, and I was completely wrong. I will be more careul about interactive socialbubbles, and I was completely wrong. I will be more careul about interactive socialconounders in the uture.conounders in the uture.

Figure 3

Southern California Foreclosures

Source:MDA DataQuick.Note:The concentration o oreclosures in time and space in Southern Caliornia in the latest housingcorrection is illustrated in Figure 3, which displays quarterly oreclosures since 1987 or both Los

Angeles County and The Inland Empire, composed o the two peripheral counties east o LosAngeles County (Riverside and San Bernadino), where subprime lending was very prevalent. In bothLos Angeles and the Inland Empire in the 1990s, the rise in oreclosures trai led the price movementby several years. In contrast, the time-concentrated spike in oreclosures in 2007 occurred at the verystart o the price erosion and has been much more extreme in the Inland Empire than in Los Angeles.

02,000

4,000

6,000

8,000

10,000

12,000

14,000

16,000

18,00020,000

1987 1989 1991 1993 1995 1997 200720031999 200920052001

Inland Empire

Los Angeles

-

7/28/2019 Tantalus (Leamer)

13/16

Tantalus on the Road to Asymptopia 43

White-WashingWhite-WashingIt should not be a surprise at this point in this essay that I part ways withIt should not be a surprise at this point in this essay that I part ways with

Angrist and Pischke in their apparent endorsement o Whites (1980) paper on howAngrist and Pischke in their apparent endorsement o Whites (1980) paper on howto calculate robust standard errors. Angrist and Pischke write: Robust standardto calculate robust standard errors. Angrist and Pischke write: Robust standarderrors, automated clustering, and larger samples have also taken the steam outerrors, automated clustering, and larger samples have also taken the steam outo issues like heteroskedasticity and serial correlation. A legacy o Whites (1980)o issues like heteroskedasticity and serial correlation. A legacy o Whites (1980)paper on robust standard errors, one o the most highly cited rom the period, ispaper on robust standard errors, one o the most highly cited rom the period, isthe near-death o generalized least squares in cross-sectional applied work.the near-death o generalized least squares in cross-sectional applied work.An earlier generation o econometricians corrected the heteroskedasticityAn earlier generation o econometricians corrected the heteroskedasticityproblems with weighted least squares using weights suggested by an explicit hetero-problems with weighted least squares using weights suggested by an explicit hetero-skedasticity model. These earlier econometricians understood that reweighting theskedasticity model. These earlier econometricians understood that reweighting theobservations can have dramatic eects on the actual estimates, but they treatedobservations can have dramatic eects on the actual estimates, but they treatedthe eect on the standard errors as a secondary matter. A robust standard errorthe eect on the standard errors as a secondary matter. A robust standard errorcompletely turns this around, leaving the estimates the same but changing thecompletely turns this around, leaving the estimates the same but changing thesize o the confdence interval. Why should one worry about the length o thesize o the confdence interval. Why should one worry about the length o theconfdence interval, but not the location? This mistaken advice relies on asymp-confdence interval, but not the location? This mistaken advice relies on asymp-totic properties o estimators.totic properties o estimators.55I call it White-washing. Best to remember thatI call it White-washing. Best to remember thatno matter how ar we travel, we remain always in the Land o the Finite Sample,no matter how ar we travel, we remain always in the Land o the Finite Sample,infnitely ar rom Asymptopia. Rather than mathematical musings about lie ininfnitely ar rom Asymptopia. Rather than mathematical musings about lie inAsymptopia, we should be doing the hard work o modeling the heteroskedasticityAsymptopia, we should be doing the hard work o modeling the heteroskedasticityand the time dependence to determine i sensible reweighting o the observationsand the time dependence to determine i sensible reweighting o the observationsmaterially changes the locations o the estimates o interest as well as the widths omaterially changes the locations o the estimates o interest as well as the widths othe confdence intervals.the confdence intervals.Estimation with instrumental variables is another case o inappropriate reli-Estimation with instrumental variables is another case o inappropriate reli-ance on asymptotic properties. In fnite samples, these estimators can seriouslyance on asymptotic properties. In fnite samples, these estimators can seriouslydistort the evidence or the same reason that the ratio o two sample means,distort the evidence or the same reason that the ratio o two sample means, __y//__x, is, isa poor summary o the data evidence about the ratio o the means, E(a poor summary o the data evidence about the ratio o the means, E(y)/E()/E(x), when), whenthe fnite sample leaves a dividing-by-zero-problem because the denominatorthe fnite sample leaves a dividing-by-zero-problem because the denominator __xis not statistically ar rom zero. This problem is greatly amplifed with multipleis not statistically ar rom zero. This problem is greatly amplifed with multipleweak instruments, a situation that is quite common. It is actually quite easibleweak instruments, a situation that is quite common. It is actually quite easiblerom a Bayesian perspective to program an alert into our instrumental variablesrom a Bayesian perspective to program an alert into our instrumental variablesestimation together with remedies, though this depends on Assumptions. In otherestimation together with remedies, though this depends on Assumptions. In otherwords, you have to do some hard thinking to use instrumental variables methodswords, you have to do some hard thinking to use instrumental variables methodsin fnite samples.in fnite samples.As the sample size grows, concern should shit rom small-sample bias toAs the sample size grows, concern should shit rom small-sample bias toasymptotic bias caused by the ailure o the assumptions needed to make instru-asymptotic bias caused by the ailure o the assumptions needed to make instru-mental variables work. Since it is the unnamed, unobserved variables that are themental variables work. Since it is the unnamed, unobserved variables that are thesource o the problem, this isnt easy to think about. The percentage bias is small isource o the problem, this isnt easy to think about. The percentage bias is small i

5 The change in length but not location o a confdence interval is appropriate or one specializedcovariance structure.

-

7/28/2019 Tantalus (Leamer)

14/16

44 Journal o Economic Perspectives

the variables you have orgotten are unimportant compared with the variables thatthe variables you have orgotten are unimportant compared with the variables thatyou have remembered. Thats easy to determine, right?you have remembered. Thats easy to determine, right?

A Word on MacroeconomicsA Word on MacroeconomicsFinally, I think that Angrist and Pischke are way too optimistic about theFinally, I think that Angrist and Pischke are way too optimistic about theprospects or an experimental approach to macroeconomics. Our understandingprospects or an experimental approach to macroeconomics. Our understandingo causal eects in macroeconomics is virtually nil, and will remain so. Dont weo causal eects in macroeconomics is virtually nil, and will remain so. Dont weknow that? Though many members o our proession have jumped up to supportknow that? Though many members o our proession have jumped up to supportthe $787 billion stimulus program in 2009 as i they knew that was an appropriatethe $787 billion stimulus program in 2009 as i they knew that was an appropriateresponse to the Panic o 2008, the intellectual basis or that opinion is very thin,response to the Panic o 2008, the intellectual basis or that opinion is very thin,especially i you take a close look at how that stimulus bill was written.especially i you take a close look at how that stimulus bill was written.The economists who coined the DSGE acronym combined in three terms theThe economists who coined the DSGE acronym combined in three terms thethings economists least understand: dynamic, standing or orward-looking deci-things economists least understand: dynamic, standing or orward-looking deci-sion making; stochastic, standing or decisions under uncertainty and ambiguity;sion making; stochastic, standing or decisions under uncertainty and ambiguity;and general equilibrium, standing or the social process that coordinates andand general equilibrium, standing or the social process that coordinates andinuences the actions o all the players. I have tried to make this point in the titleinuences the actions o all the players. I have tried to make this point in the titleo my recent book:o my recent book: Macroeconomic Patterns and Stories(Leamer, 2009). Thats what we(Leamer, 2009). Thats what wedo. We seek patterns and tell stories.do. We seek patterns and tell stories.

ConclusionConclusionIgnorance is a ormidable oe, and to have hope o even modest victories, weIgnorance is a ormidable oe, and to have hope o even modest victories, weeconomists need to use every resource and every weapon we can muster, includingeconomists need to use every resource and every weapon we can muster, includingthought experiments (theory), and the analysis o data rom nonexperiments,thought experiments (theory), and the analysis o data rom nonexperiments,accidental experiments, and designed experiments. We should be celebrating theaccidental experiments, and designed experiments. We should be celebrating thesmall genuine victories o the economists who use their tools most eectively, andsmall genuine victories o the economists who use their tools most eectively, andwe should dial back our adoration o those who can carry the biggest and brightestwe should dial back our adoration o those who can carry the biggest and brightestand least-understood weapons. We would beneft rom some serious humility, andand least-understood weapons. We would beneft rom some serious humility, androm burning our Mission Accomplished banners. Its never gonna happen.rom burning our Mission Accomplished banners. Its never gonna happen.Part o the problem is that we data analysts want it all automated. We want anPart o the problem is that we data analysts want it all automated. We want ananswer at the push o a button on a keyboard. We know intellectually that thought-answer at the push o a button on a keyboard. We know intellectually that thought-less choice o an instrument can be a severe problem and that summarizing theless choice o an instrument can be a severe problem and that summarizing thedata with the consistent instrumental variables estimate when the instrumentsdata with the consistent instrumental variables estimate when the instrumentsare weak is an equally large error.are weak is an equally large error.66The substantial literature on estimation withThe substantial literature on estimation withweak instruments has not yet produced a serious practical competitor to the usualweak instruments has not yet produced a serious practical competitor to the usual

6Bayesians have a straightorward solution in theory to this problem: describe the marginal likelihoodunction, marginalized with respect to all the parameters except the coefcient being estimated. Ithe instruments are strong, this marginal likelihood will have its mode near the instrumental vari-

ables estimate. I the instruments are weak, the central tendency o the marginal likelihood will lieelsewhere, sometimes near the ordinary least-squares estimate.

-

7/28/2019 Tantalus (Leamer)

15/16

Edward E. Leamer 45

instrumental variables estimator. Our keyboards now come with a highly seduc-instrumental variables estimator. Our keyboards now come with a highly seduc-tive button or instrumental variables estimates. To decide how best to adjust thetive button or instrumental variables estimates. To decide how best to adjust theinstrumental variables estimates or small-sample distortions requires some hardinstrumental variables estimates or small-sample distortions requires some hardthought. To decide how much asymptotic bias aicts our so-called consistent esti-thought. To decide how much asymptotic bias aicts our so-called consistent esti-mates requires some very hard thought and dozens o alternative buttons. Facedmates requires some very hard thought and dozens o alternative buttons. Facedwith the choice between thinking long and hard versus pushing the instrumentalwith the choice between thinking long and hard versus pushing the instrumentalvariables button, the single button is winning by a very large margin.variables button, the single button is winning by a very large margin.Lets not add a randomization button to our intellectual keyboards, to beLets not add a randomization button to our intellectual keyboards, to bepushed without hard reection and thought.pushed without hard reection and thought. Comments rom Angus Deaton, James Heckman, Guido Imbens, and the editors and reerees

or the JEP (Timothy Taylor, James Hines, David Autor, and Chad Jones) are grateully

acknowledged, but all errors o act and opinion, o course, remain my own.

References

Bewley, Truman F. 1986. Knightian Deci-

sion Theory. Parts 1 and 2. Cowles FoundationDiscussion Papers No. 807 and 835.Card, David. 1990. The Impact o the Mariel

Boatlit on the Miami Labor Market. Industrialand Labor Relations Review, 43(2): 24557.

Chamberlain, Gary, and Guido Imbens.

1996. Hierarchical Bayes Models with ManyInstrumental Variables. NBER Technical

Working Paper 204.The Economist. 2009. Cause and Deect.

August 13, 2009.Deaton, Angus. 2008. Instruments o Devel-

opment: Randomization in the Tropics, and the

Search or the Elusive Keys to Economic Develop-ment. The Keynes Lecture, British Academy,October, 2008.

Fox, Justin. 2009. The Myth o the RationalMarket. A History o Risk, Reward and Delusion on

Wall Street. New York: HarperCollins.Gates, Dominic. 2009. Boeing 787 Wing

Flaw Extends Inside Plane. Seattle Times, July30. http://seattletimes.nwsource.com/html/boeingaerospace/2009565319_boeing30.html.

Hanany, Eran, and Peter Klibanoff. 2009.Updating Ambiguity Averse Preerences. The

B.E. Journal o Theoretical Economics, vol. 9, issue 1(Advances), article 37.

Heckman, James J. 1992. Randomization

and Social Program Evaluation. In EvaluatingWelare and Training Programs, eds. Charles Manskiand Irwin Garfnkel, 201230. Cambridge, MA:Harvard University Press. (Also available as NBERTechnical Working Paper 107.)

Imbens, Guido W. 2009. Better LATE thanNothing: Some Comments on Deaton (2009) andHeckman and Urzua (2009). NBER WorkingPaper No. w14896.

Imbens, Guido W., and Joshua D. Angrist.

1994. Identifcation and Estimation o LocalAverage Treatment Eects. Econometrica, 62(2):46775.

Keynes, John Maynard. 1921. A Treatise onProbability. London: Macmillan.

Keynes, John Maynard. 1936. The GeneralTheory o Employment, Interest and Money. Macmillan.

Klibanoff, Peter, Massimo Marinacci, and

Sujoy Mukerji. 2005. A Smooth Model o Deci-sion Making Under Ambiguity. Econometrica,73(6): 18491892.

Knight, Frank. 1921. Risk, Uncertainty andProft. New York: Houghton Miin.

Leamer, Edward E. 1978. Specifcation Searches:Ad Hoc Inerence with Nonexperimental Data. John

Wiley and Sons.Leamer, Edward E. 1983. Lets Take the Con

-

7/28/2019 Tantalus (Leamer)

16/16

46 Journal o Economic Perspectives

Out o Econometrics. American Economic Review,73(1): 3143.

Leamer, Edward E. 1985. Vector Autoregres-sions or Causal Inerence? In Karl Brunner and

Allan H. Meltzer, eds.,Carnegie-Rochester ConerenceSeries on Public Policy, vol. 22, pp. 255304.

Leamer, Edward E. 2009. MacroeconomicPatterns and Stories. Springer Verlag.

Liu, Ta-Chung. 1960. Underidentifca-tion, Structural Estimation, and Forecasting.

Econometrica, 28(4): 85565.Sala-i-Martin, Xavier. 1997. I Just Ran Two

Million Regressions. American Economic Review,87(2): 17883.

Sims, Christopher. 1980. Macroeconomicsand Reality.Econometrica, 48(1): 148.

White, Halbert. 1980. A Heteroskedasticity-Consistent Covariance Matrix Estimator and aDirect Test or Heteroskedasticity. Econometrica,48(4): 81738.