149550

Transcript of 149550

-

8/13/2019 149550

1/71

RelativeDistanceMeasurement

usingGPSandInternalVehicleSensors

MasterofScienceThesisintheMastersProgrammeCommunicationEngineeringAHMETIRKIN

SERKANKARAKIS

DepartmentofSignalsandSystems

DivisionofSignalProcessingandBiomedicalEngineeringCHALMERSUNIVERSITYOFTECHNOLOGY

Gteborg,Sweden2011

MastersThesis2011:15

-

8/13/2019 149550

2/71

-

8/13/2019 149550

3/71

MASTERSTHESIS2011:15

RelativeDistanceMeasurement

usingGPS

and

Internal

Vehicle

Sensors

MasterofScienceThesisintheMastersProgrammeCommunicationEngineering

AHMETIRKIN

SERKANKARAKIS

DepartmentofSignalsandSystems

DivisionofSignalProcessingandBiomedicalEngineering

CHALMERSUNIVERSITYOFTECHNOLOGY

Gteborg,Sweden2011

-

8/13/2019 149550

4/71

RelativeDistanceMeasurementusingGPSandInternalVehicleSensors

MasterofScienceThesisintheMastersProgrammeCommunicationEngineering

AHMETIRKIN

SERKANKARAKIS

AHMETIRKIN,SERKANKARAKIS,2011

Examensarbete/InstitutionenfrSignalerochSystem,

Chalmerstekniskahgskola,2011:15

DepartmentofSignalsandSystems

DivisionofSignalProcessingandBiomedicalEngineering

ChalmersUniversityofTechnology

SE41296Gteborg

Sweden

Telephone:+46(0)317721000

Cover:

AvisualoftheVehicletoVehiclecommunications,ofwhichthisthesisworkpresents

oneofthebenefits:relativepositioningoftargetvehicles.

DepartmentofSignalsandSystemsGteborg,Sweden2011 Kommentar [mp1]: IfthereportisprintedbyforinstanceChalmers

reproservice,thisnameshouldbeinserted

here.Ifelsethedepartmentnameshould

begiven.

-

8/13/2019 149550

5/71

i

RelativeDistanceMeasurementusingGPSandInternalVehicleSensors

MasterofScienceThesisintheMastersProgrammeCommunicationEngineering

AHMETIRKIN

SERKANKARAKIS

DepartmentofSignalsandSystems

DivisionofSignalProcessingandBiomedicalEngineering

ChalmersUniversityofTechnology

ABSTRACT

Each year a million people are being injured in traffic accidents. New active safety systems

have recentlybeen introduced on themarket thatmaysignificantly reduce theeffect ofcollisions

andsometimesevenavoidthem(RefCitySafety).Inordertopreventanaccidentthereisaneedto

first assess and understand the traffic situation around the vehicle. The current state of the art

active safety systems utilize radars, cameras and laser based sensors to support environmental

sensing, i.e., to position the neighboring vehicles in relation to the host vehicle and the

infrastructure(roads,intersections,etc.).Futuresafetysystemswillobtainadditionalinformationby

communicatingwith

other

vehicles

and

the

infrastructure,

also

by

including

new

sensors

like

aGPS

andamap.Suchinformationmaydramaticallyimprovetheaccuracyofourunderstandingthetraffic

situation,butasofnow,thedesignofsuchsystemshasnotbeenstudiedmuch.

This master thesis investigates how to combine information from several sensors including

GPSand internalvehiclesensors inordertopositiontheegovehicleandotherobjectsaroundthe

vehicle. Apart from designing the positioning systems, we also wish to identify difficulties and

understand different parts of the problem. These parts include sensor models, sensor fusion

algorithmsandtechniquestohandledatathatarriveswithatimedelay.

-

8/13/2019 149550

6/71

ii

ACKNOWLEDGMENTS

We would like to thank our examiner Lennart Svensson from Chalmers and our supervisor

HenrikLindfromVolvoCarCorporationsomuchforbringingthis interestingthesisworktousand

alsofortheircontinuoussupportthroughoutourwork.

We also thank Volvo Car Corporation and especially Mats Lestander for making the thesis

workpossiblewithprovidingeverythingweneededduringtheproject.

-

8/13/2019 149550

7/71

iii

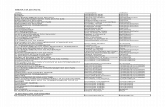

TABLEOF

CONTENTS

Abstract......................................................................................................................................... i

Acknowledgments........................................................................................................................ ii

TableofContents........................................................................................................................ iii

ListofAbbreviations..................................................................................................................... v

TableofFigures........................................................................................................................... vi

1

Introduction........................................................................................................................

1

1.1 ThesisBackground.......................................................................................................... 1

1.2 AimoftheThesis............................................................................................................. 2

1.3 Methodology................................................................................................................... 2

1.4 DescriptionofTestEquipmentandConfigurations........................................................ 2

1.5 Limitations....................................................................................................................... 4

1.6 OutlineoftheReport...................................................................................................... 5

2

Technical

Overview

.............................................................................................................

6

2.1 PrinciplesofGPS............................................................................................................. 6

2.1.1 GPSSignal................................................................................................................ 6

2.1.2 DeterminingReceiverPosition................................................................................ 7

2.1.3 GPSMessages......................................................................................................... 8

2.1.4 GPSDataError...................................................................................................... 12

2.2 CoordinateSystems...................................................................................................... 12

2.2.1 GeographicalCoordinateSystem.......................................................................... 13

2.2.2 EarthCenteredEarthFixedCoordinateSystem................................................... 13

2.2.3 MapProjection...................................................................................................... 14

2.2.4 EllipticParametersoftheEarthforGPSApplications.......................................... 15

-

8/13/2019 149550

8/71

iv

2.3

InternalVehicle

Sensors

................................................................................................

16

2.4 SensorFusionSystems.................................................................................................. 17

2.4.1 InertialNavigationSystem.................................................................................... 18

2.4.2 KalmanFilter......................................................................................................... 18

3 MathematicalModelofAlgorithmsandConfiguration.................................................... 21

3.1 OverviewoftheAlgorithm............................................................................................ 21

3.1.1 SynchronizationofGPSandInternalSensors....................................................... 22

3.1.2

Parametersof

the

Kalman

Filter

...........................................................................

23

3.1.3 DetectionofSignalQualitiesforGPSandInternalSensors.................................. 27

3.1.4 RelativeDistanceMeasurement........................................................................... 28

4 Results............................................................................................................................... 30

4.1 Testcase1.................................................................................................................. 30

4.2 Testcase2.................................................................................................................. 34

5 ConclusionandFutureWork............................................................................................ 43

Bibliography................................................................................................................................

44

-

8/13/2019 149550

9/71

v

LISTOF

ABBREVIATIONS

CAN ControllerAreaNetwork

ECEF EarthCenteredEarthFixed

GPS GlobalPositioningSystem

HSCAN HighSpeed ControllerAreaNetwork

Hz Hertz

IEEE InstituteofElectricalandElectronicsEngineers

INS InertialNavigationSystem

ITS IntelligentTransportationSystems

LPF LowPassFilter

NGA NationalGeospatialIntelligenceAgency

NIMA NationalImageryandMappingAgency

NMEA NationalMarineElectronicsAssociation

SNR SignaltoNoiseRatio

V2V VehicletoVehicle

WAVE WirelessAccessforVehicularEnvironments

WGS

World

Geodetic

System

WLAN WirelessLocalAreaNetwork

latitude

longitude

h altitude

-

8/13/2019 149550

10/71

vi

TABLEOF

FIGURES

Figure1.1 Overviewofdeviceconnections.......................................................................................... 3

Figure2.1 Calculationofsatellitedistance........................................................................................... 7

Figure2.2 VisualizationofGPSpositioning.......................................................................................... 8

Figure2.3 ECEFandGeodeticcoordinates......................................................................................... 14

Figure2.4 Mercatorprojection........................................................................................................... 15

Figure2.5 Lowpassfilterappliedtoyawrate.................................................................................... 17

Figure2.6 VisualizationoftheKalmangain........................................................................................ 19

Figure3.1 Overviewofthesensorfusionsystem............................................................................... 22

Figure3.2 TheKalmanfilteralgorithm............................................................................................... 25

Figure3.3 Relativedistancemeasurement........................................................................................ 29

Figure4.1 Testtrack1......................................................................................................................... 30

Figure4.2 Vehiclespeed..................................................................................................................... 31

Figure4.3 Lateraldistance.................................................................................................................. 31

Figure4.4 Longitudinaldistance......................................................................................................... 32

Figure4.5 Targetangle....................................................................................................................... 32

Figure

4.6

Lateral

error

histogram

......................................................................................................

33

Figure4.7 Longitudinalerrorhistogram............................................................................................. 33

Figure4.8 Targetangleerrorhistogram............................................................................................. 34

Figure4.9 Testtrack2......................................................................................................................... 35

Figure4.10 Vehiclespeed................................................................................................................... 36

Figure4.11 Lateraldistance................................................................................................................ 36

Figure4.12 Longitudinaldistance....................................................................................................... 37

Figure4.13 Targetangle..................................................................................................................... 37

Figure4.14 Absolutedistanceerror................................................................................................... 38

Figure4.15

Lateral

error

histogram

....................................................................................................

38

Figure4.16 Longitudinalerrorhistogram........................................................................................... 39

Figure4.17 Targetangleerrorhistogram........................................................................................... 39

Figure4.18 GPSclockerror................................................................................................................. 42

-

8/13/2019 149550

11/71

1

INTRODUCTION

In this section, the background of our thesis workwillbe introduced to the reader and the

goals will be defined. Technical equipments used in the project will also be briefly introduced. In

addition, theassumptionsmade,and the limitations put intheworkwillbepresented.Finally,an

outlineofthereportisgivenattheendofthesection.

1.1 ThesisBackground

Todays active safety systems utilize radars, cameras and laser based sensors to support

environmentalsensing, i.e.,topositiontheneighboringvehicles inrelationtothehostvehicleand

the infrastructure (roads, intersections, etc.). Systems capability is dependent on the limits of the

sensorswhichallowtrackingofobjectsonlyinviewofthesensors. Futuresafetysystemswillobtain

additional information by communicating with other vehicles and the infrastructure, also by

includingnewsensorssuchasaGPS.Suchinformationmaydramaticallyimprovetheaccuracyofour

understandingthetrafficsituationandreducepossibilityofaccidents,butasofnow,thedesignof

suchsystemshasnotbeenstudiedmuch.

InthisthesisworkrelativedistancecalculationtosupportV2Vcommunication ispresented.

ConsideringtheshortdistancebetweenthecarsdrivingontheroadandusingsimilarGPSreceiver

products,

it

is

practical

to

assume

that

they

may

have

common

errors

()

and

also

vehicle

specific

errors () in their individual position estimations. Therefore, we can define the equation of the

positionestimationforeachvehicleasfollows:

Ourexpectationwasalthougheachvehiclehaderroneouspositionestimationwiththemodel

definedabove;differentiationcoulddiscardthecommonerror includedinbothvehiclepositions.

To evaluate this idea, we logged and organized the necessary information and implemented a

systemto

make

the

best

position

estimations.

In

order

to

reduce

the

vehicle

specific

errors

in

the

calculations, other inputs (yaw rate, speed, gear, etc.) are also introduced to the system and

combined with a sensor fusion algorithm. There are different methods and algorithms such as

KalmanFiltering,BayesianNetworks,DempsterShaferTheoryandetc.usedtoimplementasensor

-

8/13/2019 149550

12/71

2

fusionsystem

for

different

applications.

A

Kalman

filter

is

used

for

improving

position

and

thereby

distancemeasurementsinthismasterthesiswork.

1.2 AimoftheThesis

The target of this thesis work is to investigate the possible improvements in collision

avoidance systems that might become available with V2V communication. The main goals of our

workaredeterminingtherelativedistanceofatargetvehiclewithrespecttotheegovehicleasthey

share GPS and internal vehicle sensor information such as speed, yaw rate, steering angle and

analyzingtheaccuracy,dependenciesandchallengesofsuchasystem.

As

of

now,

several

associations

and

corporations

are

working

on

V2V

communication

standards.Oneofthem, IEEE,hasrecentlydevelopedthe802.11pstandard,thesocalledWireless

AccessforVehicularEnvironments(WAVE)forIntelligentTransportationSystems(ITS),whichbrings

asetofadjustmentstothewellknown802.11WLANprotocol[1].However,duringthisthesiswork,

althoughthevehiclesdidnothavesuchbuiltincommunicationsystemphysically,weassumedthat

theyhadoneandtheycouldsharethenecessaryinformationwitheachother.

1.3 Methodology

Todesignanaccurateandefficientsensorfusionsystem,theprinciplesofGPSandthevehicle

sensors

are

studied

first.

Once

the

strengths

and

weaknesses

of

each

of

them

are

grasped,

the

necessary sensors are chosen to be inputs to the system. The next thing is to choose the sensor

fusion algorithm and define the equations to combine the inputs in a reasonable way. After the

equations are defined and the sensor fusion algorithm is developed, CANalyzer software to log

information from CAN bus of the vehicle is installed and a C++ code is written to record GPS

information.Smalltestdataisloggedtoanalyzeandsolvesynchronizationproblemandonceallthe

information is synchronized, the sensor fusion algorithm is developed in MATLAB. When the

software and the hardware are prepared, test scenarios are created and thereby tests are done

accordingtothem.Finally,resultsofthetestsareanalyzedandpresented.

1.4 DescriptionofTestEquipmentandConfigurations

Testvehiclesthatwereusedinourprojectarestandard2007VolvoS80sedanand2008Volvo

V70 station wagon. Both vehicles are equipped with GlobalSat BU353 GPS receiver, standard

internalsensors(speed,yaw,gear)andonlyS80hadintegratedRadarCamerasensor.

-

8/13/2019 149550

13/71

3

GPSReceiver

GlobalSatBU353GPSreceiverwasused inthisthesiswork.GPSreceiver isequippedwitha

high performance SiRF Star III GPS chipset, which outputs the information in NMEA 0183 format

throughitsUSBinterface.WeusedGPRMC,GPGGAandGPGSVmessagesofNMEA0183outputin

ourproject.

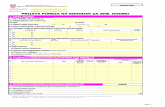

Table1 SamplingfrequencyofGPSdata

Source SamplingTime SamplingFrequency

GPRMC GPS 1sec 1Hz

Latitude

Longitude

Speed

Time

GPGGA 1sec 1Hz

NumberofSatellites

GPGSV 5sec 0.2Hz

SNR

ControllerAreaNetwork(CAN)&VectorCANcaseXL

Controllerareanetwork (CAN) isaserialbuscommunicationprotocolsuited fornetworking

sensors, actuators and other nodes in real time systems [2]. The protocol is widely used in the

automotive industry. CAN messages are kept in four different formats as data, remote,error and

overload. In our project, data messages are used. CAN message frame consists of Start of Frame

(SOF), Identifier,RemoteTransmissionRequest (RTR),Control, Data, Cyclic Redundancy Checksum

(CRC),Acknowledgement(ACK)andEndofFrame(EOF).

Figure0.1 Overviewofdeviceconnections

CANcaseXL CANalyzer

GPSLogger

-

8/13/2019 149550

14/71

4

SOF

Identifier

RTR

Control

Data CRC ACK EOF

Vector CANcaseXL is a USB interface, which is capable of processing CAN messages. It is

possibletoreceiveandanalyzeremoteframeswithoutanylimitation.Itisalsocapableofgenerating

and detecting error frames on the CAN bus [3]. CANcaseXL works with the Vector CANalyzer

software.

VectorCANalyzerisasoftwaretool,whichisusedforanalyzingandobservingECUnetworks

anddistributedsystemsinvehicles.Inthisthesiswork,weusedCANalyzertoolinordertoobserve,

analyzeandrecorddatatrafficinCAN.

Weloggedspeed,gearandyawrateinformationfromHighSpeedControlAreaNetwork(HS

CAN). Thesamplingfrequenciesofthesesignalsareshowninthetablebelow.

Table2 Samplingfrequencyofsensordata

Source SamplingTime SamplingFrequency

Speed HighSpeedCAN 20ms 50Hz

Gear 20ms 50Hz

Yaw 20ms 50Hz

Distance Radar 100ms 10Hz

Yaw 20ms 50Hz

1.5 Limitations

Inprincipal,thesystemthatisdevelopedinthisthesisworkreliesonaccuracyandefficiency

of the communication link between the vehicles. However, since we did not use an actual

communication system, we assumed existence of a link to share the information in between.

Therefore,theoutcomesofthisthesisworkshouldbeseenastheresultsobtainedusingaperfect

communicationsystemwithhighdatarate.

Anotherimportantassumptionistheabsoluteaccuracyofthereferencedata,withwhichwe

compareourtestresults.Weusedtheinformationfromtheradarenhancedwithacamerainorder

toobtainthe truedistancebetweenthevehiclesandcomparedour resultswiththat information.

Consequently,theaccuracyofourtestresultsisrelativetotheaccuracyoftheradarinformation.

-

8/13/2019 149550

15/71

5

Moreover,since

we

used

the

radar

camera

information

as

the

reference

and

they

required

a

lineofsightviewwithinanarrowangleofthetargetvehicle fordistancedetermination,wecould

onlytestthecaseswherethevehicleswerefollowingeachotherorat leasttheydrove in frontof

eachother.

1.6 OutlineoftheReport

The report starts with the aim of this thesis work and the theoretical background of the

problem we are dealing with. The hardware and the software equipments used in thisjob are

introducedandthelimitationsaregiventoassistthereaderwhywehavemadecertaindecisionsin

thedesign.

Afterthisbriefintroduction,adetailedoverviewofGPSandvehiclesensorsincludingworking

principles,technicalspecifications,strengthsandweaknessesispresented.Theformalsensorfusion

algorithmandtheeffectsofitsparametersareexplained.

Inthethirdchapter,anoverviewoftheentirealgorithmincludinginputsofthesensorfusion

system,solutiontosynchronizationproblemandvehicledynamicsequationsisgiven.

Resultsobtainedfromthetestsareshownintheresultssection;thecommentsandpossible

workforthefuturearepresentedintheconclusion.

-

8/13/2019 149550

16/71

6

TECHNICALOVERVIEW

Inthissection,someimportantbackgroundinformationisgiventohelpthereaderfollowthe

restofthereporteasier.MainareasdiscussedareprinciplesoftheGlobalPositioningSystem(GPS),

coordinate systems, internal vehicle sensors and sensor fusion systems, particularly the Kalman

Filterwhichisusedinourproject.

1.7 PrinciplesofGPS

Globalpositioningsystem(GPS) isasatellitebasedpositioningsystem.GPSsatellitesconvey

informationdata,whichcanbeusedtocalculatethepositionofthereceiver.GPSincludes28active

satellites thatareuniformlysettled on six differentcircularorbits. Inclinationangle oforbits from

the equator is 55 and orbits are seperated by 60 right accession from each other[4]. This

constellation provides global coverage and chance to operate 24 hours a day and in all weather

conditions. In theory three or more satellites in view is enough to calculate the location of the

receiver.Althoughreceivercancalculatetheposition,theaccuracyofpositioning isdependenton

thenumberofsatellitesinview,signalqualities,weatherconditions,etc.

1.7.1 GPSSignal

EachGPSsatelliteconveysnavigationmessage,whichismadeoffivemajorcomponents[4].

1. SatelliteAlmanacData

Satellite Almanac Data includes orbital information for each satellite in the system. The

receiverusesAlmanacDatatocalculatetheapproximatelocationofeachsatelliteatanytime.

2. SatelliteEphemerisData

Satellite Ephemeris Data gives much more accurate information about satellite locations.

Ephemerisdataisconveyedfromaparticularsatelliteanditonlyincludeslocationofthatsatellite,

anditisvalidforonlyseveralhours[4].

3. SignalTimingData

GPSdata includestimetags,whichareusedtocalculatetransmissiontimeofspecificpoints

ontheGPSsignal.This information isneededtodeterminethesatellitetouserpropagationdelay

usedforranging[4].

4. IonosphericDelayData

-

8/13/2019 149550

17/71

7

Ionospheric

delay

data

includes

estimated

amount

of

delay,

when

signal

passes

through

ionosphere.

5. SatelliteHealthMessage

Satellitehealthmessagecarriesinformationaboutsatelliteoperatingstatus.

1.7.2 DeterminingReceiverPosition

Each satellite transmits its exact orbital position and onboard clock time. The signal is

transmitted at speedof light (30010/). Time differencebetween the transmission of thesignalbythesatelliteandthereceivedtimebythereceiveriscalledtransittime.Distancebetween

thereceiver

and

the

satellite

is

then

calculated

by

multiplying

the

speed

of

light

and

the

transit

time

(). c

Figure0.1 Calculationofsatellitedistance

Thedistance fromacertainsatelliteprovidesa rangecircle,which indicatesallthepossible

positionsof

the

receiver.

Each

satellite

points

adifferent

range

circle.

In

an

ideal

case,

the

range

circlesarerepresentedwithsolidlinecirclesshowninFigure0.2.Intersectionofthesecirclesgives

theestimatedreceiverposition.However,theserangecirclesaresensitivetoerrorsandconsidering

the fact that the signal propagates with speed of light, even small clock errors may cause large

positioningerrors.Forthisreason,theGPSreceiverclockandthesatelliteclockaresynchronizedto

-

8/13/2019 149550

18/71

8

provideaccurate

positioning.

Although

synchronized

time

reduces

positioning

errors,

it

does

not

fix

theerrorscausedbyatmosphericconditionsandmultipatheffects.Theseerrorsaffectthetransmit

timeorthespeedofthesignalanditcreateslargerrangecircleswhichareshowninFigure0.2with

dashlines.

Figure0.2 VisualizationofGPSpositioning

In theory, the receiverneedsthreesatellitedistance information todetermine theposition,

however, todays GPS receivers use information from at least four satellites to determine their

positions.Accuracyofpositioningdependsonthenumberofsatellitesinviewandthequalityofthe

receivedsignals.

1.7.3 GPSMessages

GPSreceiversprocessthemessagescomingfromGPSsatellites.Processedmessageisgivenas

NMEA,SiRF,Garmin,Delormeformatatoutputofthereceiver.MostoftheGPSreceiversuseNMEA

-

8/13/2019 149550

19/71

9

format,

which

is

developed

by

National

Marine

Electronics

Association.

There

are

many

kinds

of

NMEAsentences. Someofthemarelistedbelow[5].

GPGGA Fixinformation

GPGLL Lat/Londata

GPGSA Overallsatellitedata

GPGSV Detailedsatellitedata

GPRMC RecommendedminimumdataforGPS

GPVTG Vectortrackanspeedovertheground

User needs at least one sentence from GPGGA, GPGGL or GPRMC in order to define the

positionofreceiver.InthismasterthesisreportGPGGA,GPGSVandGPRMCareused.

GPGGA

GPGGA packets enclose fix data, which gives three dimensional location of the receiver[6].

GPGGAsentencelookssimilarto:

$GPGGA,101428.000,5741.1742,N,01158.7346,E,1,10,0.8,46.9,M,40.1,M,,0000*69

,,,,,,,,,,,,,,,

ExplanationofaGPGGAsentenceisgivenbelow.

Field Example Comments

SentenceID

$GPGGA

UTCTime 101428.000 hhmmss.sss

Latitude 5741.1742 ddmm.mmmm

N/SIndicator N N=North,S=South

Longitude 01158.7346 dddmm.mmmm

E/WIndicator E E=East,W=West

PositionFix 1 0=Invalid,1=ValidGPSfix(SPS),

2=ValidDGPSfix,3=ValidPPSfix

SatellitesUsed 10 Satellitesbeingused(012)

HDOP

0.8

Horizontaldilution

of

precision

Altitude 46.9 Altitude(WGS84ellipsoid)

AltitudeUnits M M=Meters

GeoidSeparation 40.1 Geoidseparation(WGS84ellipsoid)

SeparationUnits M M=Meters

-

8/13/2019 149550

20/71

10

Timesince

DGPS

in

seconds

DGPSStationID

Checksum *69 alwaysbeginwith*

GPRMCGPRMC is a NMEA sentence, which keeps recommended minimum data for GPS [7]. It

includesposition,velocityandtimeinformation.GPRMCsentencelookssimilarto:

$GPRMC,101427.000,A,5741.1742,N,01158.7346,E,0.02,29.49,060810,,*35

,,,,,,,,,,,,

ExplanationofaGPRMCsentenceisshownbelow.

Field Example Comments

SentenceID $GPRMC

UTCTime 101427.000 hhmmss.sss

Status A A=Valid,V=Invalid

Latitude 5741.1742 ddmm.mmmm

N/SIndicator N N=North,S=South

Longitude 01158.7346 dddmm.mmmm

E/WIndicator

E

E=East,

W

=West

Speedoverground 0.02 Knots

Courseoverground 29.49 Degrees

UTCDate 060810 DDMMYY

Magneticvariation Degrees

Magneticvariation E=East,W=West

Checksum *35

GPGSVGPGSVsentencesprovideelevationandazimuthanglesofeachsatelliteaswellassignal to

noise ratio (SNR) of each signal [7]. GPGSV sentence can include maximum four satellites

informationthus thenumberofGPGSVsentencesdependsonthenumberofsatellitenumbers in

view.Generally,threesentencesarenecessaryforfullinformation.GPGSVsentencelookssimilarto:

-

8/13/2019 149550

21/71

11

$GPGSV,3,1,12,27,72,271,41,15,55,191,38,09,55,275,40,17,38,102,42*7A

$GPGSV,3,2,12,26,33,159,38,28,31,059,28,18,27,276,19,22,21,313,33*72

$GPGSV,3,3,12,12,11,223,30,11,09,042,24,24,01,326,,08,01,094,*74

,,,,,,,,,,,,,,,

ExplanationofaGPRMCsentenceisshownbelow.

Field Example Comments

SentenceID $GPGSV

No.of

messages

3

No.of

messages

in

complete

(1

3)

Sequenceno. 1 Sequenceno.ofthisentry(13)

Satellitesinview 12

SatelliteID1 27 Rangeis132

Elevation1 72 Elevationindegrees

Azimuth1 271 Azimuthindegrees

SNR1 41 SignaltonoiseratiodBHZ(099)

SatelliteID2 15 Rangeis132

Elevation2 55 Elevationindegrees

Azimuth2

191

Azimuthin

degrees

.

.

Checksum *70

ThetextbelowisapartofNMEA0183datareceivedfromtheGPSreceiverinoneofourtests

inGothenburg.

$GPRMC,101427.000,A,5741.1742,N,01158.7346,E,0.02,29.49,060810,,*35

$GPGGA,101428.000,5741.1742,N,01158.7346,E,1,10,0.8,46.9,M,40.1,M,,0000*69

$GPGSA,A,3,17,15,27,26,22,28,18,09,11,12,,,1.5,0.8,1.3*36

$GPGSV,3,1,12,27,72,271,41,15,55,191,38,09,55,275,40,17,38,102,42*7A

$GPGSV,3,2,12,26,33,159,38,28,31,059,28,18,27,276,19,22,21,313,33*72

$GPGSV,3,3,12,12,11,223,30,11,09,042,24,24,01,326,,08,01,094,*74

-

8/13/2019 149550

22/71

12

1.7.4 GPSData

Error

Thereareseveralerrors,whicheffectandreduceaccuracyofGPS.Errorsaregroupedintofive

subgroups.Thesearebrieflyexplainedunderbelow:

1. IonosphericPropagationError

Ionosphere is the upper layer of the atmosphere. It consists of gases, which are ionized by

solar radiation. Ionized gases affect the speed of the GPS signal because signal cannot propagate

withfreespacepropagationspeedwhenitpassesthroughtheionosphere.Thisspeedchangecauses

modulationdelaysanderrorsinpseudorangemeasurement.

2. TroposphericPropagationError

Troposphereisthelowerlayeroftheatmosphere,whichiscomposedofwatervaporanddry

gases.Conditionintropospherelengthenspropagationpath,whichcausestroposphericpathdelay.

Tropospheric delay is not frequency dependent; thereby frequency dependent methods cannot

cancel tropospheric errors. Standard model of the atmosphere at the antenna is used to reduce

errorsthatarecausedbytroposphere[4].

3. MultipathEffectError

Objectsorsurfaces(ex:tallbuildings)aroundtheGPSreceivercanreflectoriginalGPSsignals.

Reflected signals can create new propagation paths and intersect with original GPS signals. This

reflections increase propagation time and makes distortion on the amplitude and the phase of

signal,therebycauseserrors.

4. EphemerisDataError

Each satellite conveys Ephemeris data, which gives information about the position of the

satellite.Differencebetweenthecomputedsatelliteorbitalpositionandtheactualsatelliteorbital

position is called Ephemeris data error. Ephemeris data error is generally small and can be

eliminatedbyDGPS.

5. ReceiverClockError

Areceiver'sbuiltinclock isnotasaccurateas theatomicclocksonboardtheGPSsatellites.

Therefore,itmayhaveveryslighttimingerrors[8].

1.8 CoordinateSystems

Inpositioning,inordertopointanobjectonareferenceframe,acoordinatesystemmustbe

introduced.Acoordinatesystemincludesasetofnumberstodefinethe locationontheframe.By

usingthesenumbers,anypointintheframecanbemarkeduniquely.

-

8/13/2019 149550

23/71

13

1.8.1 GeographicalCoordinate

System

The most common way of representing the locations on a spheroid is using geographical

coordinates.Ingeographicalcoordinates,therearethreeparametersdefiningtheexactlocationofa

pointontheEarth:latitude(),longitude()andaltitude(h).Formertwoareenoughtodetermine

the position on the surface of the Earth without the height information, which in most cases is

satisfactory.Latitude istheanglebetweentheequatorialplaneandthe line,which isdrawn from

the center of the Earth to the corresponding point on the surface, while longitude is the angle

betweenthis lineand theprimemeridian crosssection.Altitude is thenormaldistanceofapoint

fromthesurfaceoftheEarth.

1.8.2 Earth-CenteredEarth-FixedCoordinateSystem

EarthCenteredEarthFixed(ECEF)coordinatesystemisaCartesiancoordinatesystem,which

is widely used in the GPS applications since it is considered to be a convenient way of locating a

point on the Earth [9].Thenameof thissystem consists of two terms;EarthCenteredand Earth

Fixedprovidingthisconveniencetogether.EarthCenteredmeansthattheoriginofthiscoordinate

systemisthecenteroftheEarth,whichmakesanypointtobe locatedalmostuniformlythatway.

The latterprovides thecoordinates rotate togetherwith theEarth,which lets themstay constant

despiteofthisphysicalrotation.Inthiscoordinatesystem,threeparametersX,YandZareusedto

determinethe

position

on

the

Earth

where

Xand

Yaxes

appear

to

be

on

the

equatorial

plane

and

Z

axis liesperpendiculartothem. Accordingtothisbasisthecoordinateset,(0,0,0)representsthe

center of the Earth and (R, 0, 0) maps to the point where the prime meridian and the equator

intersect where R is the radius of the equator [10]. The relationship between the geodetic

coordinates and the ECEF coordinates is shown in Figure 0.3 and the mathematical conversions

betweeneachotherareshownbelow[11].

coscos

cossin

s i n

where;

1

-

8/13/2019 149550

24/71

14

Figure0.3 ECEFandGeodeticcoordinates

1.8.3 MapProjection

TheprocessofrepresentingthesurfaceoftheEarthinatwodimensionalplaneiscalledmap

projection. There are many projection methods in use and in all these methods the surface is

deformed with certain rules and parameters. In this thesis work Mercator projection is used to

positiontheGPSreceiveronatwodimensionalplaneandtheoptimizationalgorithmsaredoneon

thisplane.

Mercator projectionMercatorprojection isaconformalcylindricalprojectionpresentedbyGerardusMercator in1569,which draws a set of horizontal lines representing the parallels and equally spaced vertical lines

corresponding to the meridians. Principle of the scaling in Mercator projection is shown in Figure

0.4.ConformalityprovidespreservingtheangleatanypointoftheEarthonthemap.Thisisoneof

-

8/13/2019 149550

25/71

15

the

reasons

that

Mercator

projection

is

widely

used

in

navigation

systems.

However,

while

preserving the accurate angle, the equal distances between the vertical lines cause the shape

distortedbecausethedistancebetweenthemeridiansactuallyvariesdependingonthe latitudeof

the point. The varying spaces between the horizontal lines provide the angles to be fixed with

respecttotheactualangleofadirectionwhilenothelpingthedistortedsizeortheshapeofthearea

tobecorrected[12].

Figure0.4 Mercatorprojection

Themathematicalexpressionofthex,ycoordinatesofthemapisdeterminedby[13]

and

ln tan sec ,0andarethe longitude,thereferencemeridianandthe latituderespectively.Thefirst

equation provides equal distances between the vertical lines since x coordinates are calculated

regardless to the latitude information. The conformality is provided in the second equation by

scalingthedistancesalongthemeridianswithrespecttothelatitudes.

1.8.4 EllipticParameters

of

the

Earth

for

GPS

Applications

The standard model used to determine the latitude, longitude and the altitude of a GPS

receiverisexplainedinadetailedwayinWorldGeodeticSystem1984(WGS84)byNationalImagery

and Mapping Agency (NIMA) which is known with the name National Geospatial Intelligence

Agency (NGA) currently [6]. The Earth is described as an ellipsoid in this model, which offers the

-

8/13/2019 149550

26/71

16

crosssections

parallel

to

the

equator

being

circular

while

the

ones

normal

to

the

equator

being

ellipsoid.Thesemimajoraxisoftheellipsoid,which isnormaltotheequatorandcenteredatthe

centeroftheEarthisequaltotheradiusoftheequatorandistaken6,378.137km.Thesemiminor

axisofthisellipsoid,thesocalledpolarradiusoftheEarth,is6,356.7523142kmaccordingtoWGS

84.

1.9 InternalVehicleSensors

In vehiclepositioning or relative distance measurement, usingGPS is usually not enough to

getaccurate results [14].Asmentioned in1.7.4,GPS receiverssuffer frommanyerrorscausedby

differentsources.

In

order

to

reduce

the

errors

in

the

calculations,

other

inputs

are

introduced

to

the

system and combined with a sensor fusion algorithm. These inputs are the information received

from the internal sensors thoseexpected tohavea positiveeffecton the results.Thesensorsare

alreadysendinginformationtotherelevantpartsofthesysteminthevehiclethroughCANbusand

usingthisconnection;thenecessary information is forwardedtooursensor fusionsystemaswell.

Thesensorsthoseareutilizedinthesystemarelistedanddescribedinthischapter.

1. VehicleSpeedoverGround

Vehiclespeedover ground is reliablesensor information indicating the instantspeedof the

vehicle.Itisusefulforestimatingthepositionofthevehicleorthedistancetoanobjectforafurther

timeinstance.

2. YawRate

Yaw rate is the information received from asensor,which is able todetermine the angular

velocity of the vehicle in degrees/second or radians/second. There are different kinds of sensors

capable of sensing angular velocity using different hardware and software. Yaw rate sensors are

usuallyconsideredtobetoonoisytherebynotveryreliablewhendrivinginlowspeeds.Inthatcase,

steering angle information becomes important to make a better estimation of the actual turning

angle. We also applied a lowpassfilter to reduce the noise from the yaw rate information (see

Figure0.5).

-

8/13/2019 149550

27/71

17

Figure0.5 Lowpassfilterappliedtoyawrate

3. ReversedGear

Reversedgearistheindicatorforthegearposition,whichisimportanttodecidewhetherthe

vehicleismovingforwardorbackward.

Table3 Propertiesofsensordata

Sensor information Data type Min Max Resolution Unit

Vehicle speed float 0 320 0.01 km/h

Yaw rate float -75 74.9998 0.03663 /sec

Gear level boolean 0 1

1.10SensorFusionSystems

A sensor fusion system is a system that combines information from different sources to

achievetheleastnoisyresultpossible.Thereasonbehindthenecessityofsensorfusionsystemsin

-

8/13/2019 149550

28/71

18

manyapplications

is

the

fact

that

each

sensor

has

its

own

strengths

and

weaknesses,

therefore

one

sensorisusuallynotreliableitself.

There are different methods and algorithms such as Kalman Filtering, Bayesian Networks,

DempsterShafer Theory etc. used to implement a sensor fusion system for different applications

[15]. The strengths and weaknesses of each input are introduced to these algorithms and the

reliability of each input is determined according to the working conditions and the output is

optimizedbythisway.Inthisthesiswork,aKalmanFilterisusedforimprovingpositionanddistance

measurements.

1.10.1InertialNavigation

System

Inertialnavigationsystemisanavigationthatcomputesthefurtherpositionofanobjectfrom

thegiven initialpositionusingmotionsensorssuchasspeedandrotationaccordingtothe lawsof

physics[16].Everynewpositioniscalculatedfromthepreviouslydeterminedpositionbyaprocess

known as dead reckoning. It is used in a wide range of applications including aircraft, spacecraft,

submarine,ship,mobilelandvehicleandhumannavigation.

Duetothenatureofthissystemthenewposition isalwaysdependentonthepreviousone,

thereforetheerrorsincludedinthepreviouscalculationsarealsopreservedinthenewones.

1.10.2KalmanFilter

Oneofthemostwellknownmethods fordata/sensor fusionalgorithms istheKalmanfilter,

whichisnamedafterRudolfE.Kalmanwhoproposedalineardatafilteringalgorithminhisfamous

paper (Kalman 1960) in 1960 [17]. The problem to which the Kalman filter finds a solution is

estimatingtheoptimumstateofalineardynamicequationcorruptedbywhitenoiseusingrelevant

measurementsalsocontainingwhitenoise[18].Itisconsideredtobeoneofthebiggestdiscoveries

in statistical estimation theory and has been studied widely for various applications since its

discovery.

In principle, the Kalman filter has two distinguished steps consisting of prediction and

correction

[17].

In

prediction,

the

states

of

the

dynamics

equation

are

estimated

with

a

prediction

noiseusingpredefineddynamicmodeloftheprocessusuallybeingalawofphysics.Thecorrection

stephasthemeasurementsofthesestateswithameasurementnoise.Predictionandmeasurement

noisesarebothnormallydistributedwhitenoisesandoneshouldnote that theyare independent

-

8/13/2019 149550

29/71

19

fromeach

other.

The

Kalman

filter

improves

the

predicted

state

using

the

measurement

with

a

weightedgain,whichiscalculatedaccordingtothenoisecovariancevalues[19].

Figure0.6 VisualizationoftheKalmangain

The Kalman filter algorithm

TheKalmanfilterdoesnotrequirekeepingthehistoryofobservationsorestimationssinceit

isarecursivealgorithm,whichcalculatesthecurrentestimationonlyfromthelatestestimatedstate

and the current observation. As mentioned in the introduction of the Kalman filter 1.10.2, the

algorithm canbedivided into twopartswhere in the firstone, the socalledprediction step, the

stateisestimatedfromtheprevioustimeinstanceusingalinearpredictionequation;therebytheapriori estimate of the state at the current time instance is computed. The apriori estimate isimprovedwithanoisymeasurementfeedbackinthenextstepthatisknownasthecorrectionstep.

Thespecific

formulas

for

these

steps

are

presented

below

[17].

PrioristateestimatePrioripredictionerrorcovarianceResidual

ResidualcovarianceKalmangainPosterioristateestimatePosterioripredictionerrorcovariance

where;

Aisthestatetransitionmodel,

Bisthecontrolinputmodel,

uisthecontrolvector,

Prediction

Correction

-

8/13/2019 149550

30/71

20

Qis

the

process

error

covariance,

zisthemeasurement,

Histhemeasurementtransitionmodel,

Risthemeasurementerrorcovariance,

Iistheunitmatrix.

-

8/13/2019 149550

31/71

21

MATHEMATICALMODEL

OF

ALGORITHMS

AND

CONFIGURATION

In this section, the implementation details of our work will be presented with the

mathematical equations defined and the algorithm flowcharts. Furthermore, solutions to certain

problemssuchassynchronizationanddynamicsignalqualitydetectionarepresented.

1.11OverviewoftheAlgorithm

The algorithms that we developed during this thesis work can be divided into three main

parts: informationextraction,datasynchronizationandsensorfusionsystem.Afterthe information

was simultaneously logged from the CAN bus and the GPS receivers in the two vehicles, first the

necessarydatasuchasspeed,yawrate, latitude, longitude,SNRvaluesandetc.wereextractedby

theMATLABcodeswedeveloped.However,neither inbetweenthecarsnor inthe individualcars

amongtheGPSandthevehiclesensors,theinformationwassynchronized;therefore,thenextstep

wassynchronizingalltheinformation.Thedetailedexplanationofthewaywefollowedinthispartis

given in 1.11.1. Once the data is synchronized, two sensor fusion algorithms can work

simultaneously for the two cars and they can share the necessary information with each other

duringtheprocessshownbelow.

-

8/13/2019 149550

32/71

22

1.11.1SynchronizationofGPSandInternalSensors

Inrealtimeapplications,dataorsignalsynchronizationisoneofthemostcriticalaspectsthat

shouldbedesignedand implementedcarefully. Inourcase,althoughthetestsweredoneoffline,

thesensorfusionsystemrequiressynchronousinformationfromdifferentinputsinordertoprocess

the output correctly. Moreover, the outputs of the sensor fusion systems running in individual

vehiclesaresupposedtobesynchronouswitheachothertoo.

Figure0.1 Overviewofthesensorfusionsystem

velocity

direction

velocity

x,

y

x,y

direction

,

+

x,y

x,y

R

GPS

gear

gear

speed

speed

Q

Q

filtered

yawrate

filtered

yawrate

yaw

rate

yaw

rate

KalmanFilter

LPF

50

Hz

50

Hz

1Hz

KalmanFilter

LPF

50

Hz

GPS

R

50

Hz

1Hz

50

Hz

50

Hz

-

8/13/2019 149550

33/71

23

Thetimestamp

information

of

the

GPS

messages

was

very

sensitive,

however

there

was

no

exact match between the absolute time of the GPS and CAN messages. In order to calculate the

exacttimedifferencebetweenthem,wetookadvantageofthecrosscorrelationbetweenthespeed

signalsreceivedfrombothinputs.Thepositionofthemaximumcorrelationpointwithrespecttothe

referencepointgaveustheexacttimedifferencebetweenGPSandCAN;therefore,theinputsofthe

sensorfusionsystemweresynchronizedbyconsideringthisdifference.

AfterGPSandCANsignalsweresynchronized,thevehiclescouldbesynchronizedeasilyusing

thetimestampsofthesignalsfromtworeceiversthankstotheprecisetimeinformationintheGPS

messages.

1.11.2ParametersoftheKalmanFilter

In this section, the actual parameters that were mapped into the formal Kalman filter

algorithmdescribedin1.10.2andtheinitializationofthestateswillbeexplained.

States and the transition models of the Kalman filterThestatematrixconsistsofonlythepositionofthevehicle inourproject;therefore,thematrixes

becomescalarvalues.ThestateandthemeasurementtransitionmodelsAandHarebothequalto

1.

where

is20ms inoursystemand isthevelocityofthevehicleattheprecisemoment.Thedetailedexplanation of the derived formulas determining the velocity of the vehicle is given below in this

section.

Theprioripredictionerrorcovariancebecomes

since 1.

As explicitly shown in the previous formula, the process error covariance Q does not

necessarilystayconstantthroughoutthealgorithm;contrarily it isupdatedateverytimesamplek

byasubfunctionaccordingtothecharacteristicsoftheinternalvehiclesignalsthosetakepartinthe

prioriestimationstep.

-

8/13/2019 149550

34/71

24

Once

the

priori

estimates

are

calculated,

the

correction

step

starts

with

calculating

the

residual between the measured and the priori estimated position. One should notice thatH was

takentobe1earlier.Thereforetheresidualandtheresidualcovarianceare

and

Again, the measurement error covariance R is updated for each new GPS signal by a sub

function,whichtakesseveralpropertiessuchasnumberofsatellitesusedinthepositioncalculation

andtheSignaltoNoiseRatio(SNR)ofthesignalsreceivedfromthesesatellitesintoaccount.

TheKalman

gain

is

then

calculated

by

the

following

equation;

Theposterioristateandthepredictionerrorcovarianceestimationsbecome

and

1

Initialization of the statesThe Kalman filter recursively updates the states using the above mentioned formulas.

However,

the

states

should

be

initialized

before

the

first

iteration

in

order

to

be

updated

in

the

recursivealgorithm.

Theeasiestwayofinitializingthestatesisprobablysettingallto0sincetheywillbeupdated

laterintheprocessanyway.However,wechosetoinitializethepositionwiththefirstreceivedGPS

coordinates. On the other hand, the prediction error covarianceP was set to 5 in the beginning,

whichbasicallymeansthatthefirstpositionisassumedtobeincludinganerrorwithavarianceof5

intheCartesiancoordinates.

-

8/13/2019 149550

35/71

25

NOYES

NO

NOYES

YES

Start

Read

GPSandSensor

Data

direction=NOTSET

Initializex,Q,P

Do

k=1to

lengthof

data

direction

==SET?

isGPS

available?

Isreadytoset

direction?

direction=SET

ComputeS,K,x,P

Compute

,Q

=xQ=MAX

Compute

Computey,Ry=0

R=MAX

Figure0.2 TheKalmanfilteralgorithm

-

8/13/2019 149550

36/71

26

Inertial navigation formulas for priori position estimationTheprioripositionestimateofthevehiclewasgivenasthefollowingaboveinthissection.

where

is the velocity of the vehicle. However, how the velocity is defined on a specific axis of theCartesiancoordinatesystemhasnotbeenintroducedyet.Obviouslyconsideringatwodimensional

Cartesiancoordinatesystem,thevelocityonthexoryaxisdependsonthedirectionofthevehicles

movement.In1.9,theinternalvehiclesensorsusedinthisthesisworkwerebrieflyexplainedandin

thissection,thespecificformulasusingtheinformationfromthesesensorswillbegiven.

Referringbackto1.9,yawratesignalgivesinformationontheangularvelocityofthevehicle,

which means how many degrees the heading direction of the vehicle will change in a second.

Accordingly, a 0 degrees/second of yaw rate means that the vehicle is heading straight, a 10

degrees/second and 5 degrees/second indicate that the vehicle will make an anticlockwise 10

degreesandaclockwise5degreeschangeinitsheadingdirectioninonesecondrespectively.Ifthe

current heading direction of the vehicle is known, it is always possible to determine the heading

usingtheyawrateinformationcontinuouslywiththefollowingequation.

Once the direction of the vehicle is computed, the velocity on each axis on the coordinate

systemcanbecalculatedbycombiningthedirectionwiththespeedinformation.

cos sin

Animportantpointinthevelocitycalculationsisthatitisafunctionofthedirectionandthe

direction is updated recursively by adding the yaw rate at each time, which causes the errors

included in the yaw rate to be added to the direction in a cumulative manner. After a while, the

direction gets highly corrupted because of this motive. In order to reduce this effect, we

implemented a sub function, which periodically keeps track of the two latest positions when the

observationsareavailableandcomputesthedirectionofthemovementusingasimplegeometrical

formulashownbelow.

t a n

-

8/13/2019 149550

37/71

27

1.11.3Detectionof

Signal

Qualities

for

GPS

and

Internal

Sensors

In1.10.2,itwasmentionedthatthelastdecisionontheoutputoftheKalmanfilterismadeby

the Kalman gain factor, which depends on the noise covariance of the prediction and the

observation.Therefore,thekeypoint is introducing thecovarianceof theseprocessescorrectlyto

theKalmanfilter.

Wealreadycitedin1.11.2thattheerrorcovariancevariesbytimeinbothsteps.Thenecessity

behindthisvariation isthe factthattheconditionsaffectingthenoisevarianceoftheseprocesses

actuallychangebytime.Forinstance,theobservationinoursystemisthepositioncalculatedbyGPS

receiverandaccuracyofthispositionmaychangeaccordingtothequalityofthesignalreceivedor

tothenumberofsatellitesthatwereusedincalculationsatthatmoment.Additionallythereliability

ofthesignalsreceivedfromthevehiclesensorsmayalsoalterbytheconditionsatthatmoment.

Starting with the quality of the prediction, which is inversely proportional to the noise

varianceofthesensorsused inoursystem,whicharespeedandyawrate,aftercarefulanalysisof

the logged information from these sensors, we deduced that speed could be considered very

accurateallthetime,howeveryawratewasquitenoisywhendrivingatlowspeedsupto20km/h.

Sincethepredictionofthepositionisprocessingthecombinedinformationfrombothsensors,the

noisyyawratedataaffectedtheresults.Therefore,thecovarianceofthepredictionprocessQwasa

functionofthespeedofthevehicle.

Ontheotherhand,itwasmorecomplicatedtodecideontheaccuracyoftheGPSpositiondue

to the fact that thereweremany thingsaffecting thecalculationssuchasdifferentnoisesources,

numberofvisiblesatellitesandSNRofthesignalsreceivedfromeachsatellite.

Wediscussedin1.7thattheremustbeatleastthreevisiblesatellitestobeabletodetermine

thepositionofaGPSreceiveronthesurfaceoftheEarth,andatleastfoursatellitestomanagethat

with theheight informationaswell.Basedon these facts,an idea forestimating theGPS location

accuracy is counting the number of satellite signal SNRs that are higher than a certain threshold.

Using different thresholds to notice the number of satellite signals above those levels was one

methodforunderstandingobservationerrorcovariance.

Anothermethodwefollowedtodeterminetheobservationerrorcovariancewascalculating

theaverageSNRofallsatellite signals thatwereused in thepositioncalculationsby the receiver.

Sincethefinalresultwasaffectedbyallthesignalsreceivedfromthosesatellites,itwaspracticalto

estimate the accuracy of the position using this average SNR. We implemented and tested both

-

8/13/2019 149550

38/71

28

methodsduring

our

work

and

although

the

results

were

pretty

similar,

we

came

to

aconclusion

that

usingaverageSNRprovidedslightlybetterresults.

However, we discovered that in certain cases, although the SNR values of the signals were

relativelyhigh,theaccuracyoftheGPSpositioncouldbeconsiderablypoorwhenthevehiclewas

driving around tall buildings or passing under obstacles such as bridges. Our guess is multipath

effectscausedtheresultstobecorruptedwithoutanysignificantdecreaseintheSNR.

1.11.4RelativeDistanceMeasurement

Thefinalworktobedoneafterdeterminingthepositionsofthevehicleswasmeasurementof

therelative

distance

between

them.

As

we

mentioned

in

1.8.3,

Mercator

projection

was

used

for

positioningtheGPSreceiveronatwodimensionalplaneandtheoptimizationalgorithmsweredone

onthisplane.

The difference between the absolute positions on the universal plane only defines the

distance in northsouth and eastwest direction. However, in todays safety systems, each vehicle

needstodeterminethepositionsofthesurroundingvehicleswithrespecttoitsownheadingangle.

Therefore,eachvehicleneedstodefineanewcoordinatesystem,whichisshowninFigure0.3,sets

the vehiclesheadingangle as theprimary horizontalaxis indicatedwithx. Since thevehiclemay

constantlychangeitsheadingangle,thecoordinatesystemisupdatedateverystepofthealgorithm

and

the

surrounding

vehicles

are

positioned

according

to

this

dynamic

coordinate

frame

continuously.

The calculations that we made for the relative measurement at a certain time is given as

follows:

t a ny

s i n

c o s

-

8/13/2019 149550

39/71

29

Figure0.3 Relativedistancemeasurement

-

8/13/2019 149550

40/71

30

RESULTSIn order to understand the accuracy, challenges and dependencies of a real time relative

distance measurement system; we did several tests in different areas (urban, suburban) and

environmentalconditions(weather,trafficconditions,etc.).Althoughresultsfromonlytwotestsare

covered comprehensively due to practical reasons,we nevertheless added a table of RMSE and

standarddeviationoftheerrorsforeachtestcaseattheendofthischapter.

1.12Testcase1ThisisoneoftheteststhatweredonearoundChalmersJohannebergcampusarea.Belowis

thedescriptionofthisparticulartestthatisstudied.

Figure0.1

Test

track

1

Urbanarea Mediumtraffic Pursuit(3040meters) Bothcarshavevaryingspeeds(1540km/h) Weatherconditions:cloudyandrainy

-

8/13/2019 149550

41/71

31

Figure0.2 Vehiclespeed

Figure0.3 Lateraldistance

-

8/13/2019 149550

42/71

32

Figure0.4 Longitudinaldistance

Figure0.5 Targetangle

-

8/13/2019 149550

43/71

33

Figure0.6 Lateralerrorhistogram

Figure0.7 Longitudinalerrorhistogram

-

8/13/2019 149550

44/71

34

Figure0.8 Targetangleerrorhistogram

1.13Testcase2

The second test that will be covered in this section was done in a closed traffic path in

countryside.Thedescriptionofthetestisgivenbelow.

Countryside

Entryclosedtotraffic

Pursuit(2.4sec)

Bothcarshavevaryingspeeds(6080km/h)

Weatherconditions:clear

Path:mostlystraightloop

-

8/13/2019 149550

45/71

35

Figure0.9

Test

track

2

-

8/13/2019 149550

46/71

36

Figure0.10 Vehiclespeed

Figure0.11 Lateraldistance

-

8/13/2019 149550

47/71

37

Figure0.12 Longitudinaldistance

Figure0.13 Targetangle

-

8/13/2019 149550

48/71

38

Figure0.14 Absolutedistanceerror

Figure0.15 Lateralerrorhistogram

-

8/13/2019 149550

49/71

39

Figure0.16 Longitudinalerrorhistogram

Figure0.17 Targetangleerrorhistogram

-

8/13/2019 149550

50/71

40

Table4RMSE

and

Standard

deviation

of

errors

Case Driving

ConditionsRMSElat(m)

Standard

Deviationlat

(m)

RMSElon(m)

Standard

Deviationlon

(m)

Case1UrbanVarying

Speed1.509937 1.463356 2.228135 1.801362

Case2Countryside

Varyingspeed1.369821 1.517508 2.030176 1.814933

Case3Urban

VaryingSpeed1.483793 1.225087 2.231224 1.803487

Case4Countryside

Constantspeed1.019622 0.879626 1.711952 1.317442

Case5Countryside

Varyingspeed1.365131 1.304811 2.314364 2.007950

Case6Countryside

Varyingspeed2.726428 2.671556 2.460274 2.222996

Case7Countryside

Constantspeed1.042331 0.892837 1.830348 1.426627

Case8Countryside

Varyingspeed1.631389 1.489401 2.376652 2.114173

Case9 UrbanVaryingSpeed

1.529207 1.439083 2.271184 1.940336

Case10Countryside

Varyingspeed1.462437 1.259776 2.189806 1.910531

Theresultsareanalyzedanddiscussedaccordingtothefiguresandthetableabove,however

one should consider that error analyses are done with the assumption of perfect accuracy of the

radarcamerainformationasmentionedbeforein1.5,whichisnottrueinreality.

Wehavementionedbeforethatduetothe lackoftrue informationontheabsolutevehicle

positions;wewereonlyabletodotestswhentargetvehiclewasinthefieldofradarcameraview.

However,thiswasnottheonlyproblemcausedbynonexistenceofthetrue information.Another

thingwasthedifficultyofdeterminingtheerrorcovarianceoftheGPSreceiverpositions.Sincewe

had no information on true positions, we could only use a limited number of experimental

informationatknownlocationsandonlyinimmobilesituations.

-

8/13/2019 149550

51/71

41

RMSerrors

and

standard

deviation

of

the

errors

show

that

sensor

fusion

system

with

GPS

and

vehicle sensors could be a good way for relative distance measurement with around 1.5 and 2.2

meter average lateral and longitudinal RMSE respectively. By using more suitable models in the

sensorfusionsystemandrefiningthestatesofthefilter,theerrorscouldbeevenreduced.

First thing to be noticed from the lateral and longitudinal error histograms (see Figure 0.6,

Figure 0.7, Figure 0.15, Figure 0.16); their patterns do not have normal distribution. Main reason

behindthisistheinaccuratemodelsusedinthefusionsystem,whicharenotoptimalfornonlinear

systems.Thenonlinearityofthemotionandmeasurementmodelscausedtheerrordistributionto

reshapeandhavedifferentcharacteristics.AnExtendedKalmanFilter,UnscentedKalmanFilteror

ParticleFiltercouldprovidemuchbetterresults.

Another interesting point is that the mean of the longitudinal errors is not zero, which we

think was caused by the assumption of constant velocity during one cycle of the fusion system.

However, inreality,thespeedofthevehicleshadrapidchangesatsomepointsofthetestdrives,

whichactually ledtovaryingspeedsduringa fusioncycle.Thisbehaviorchanged themeanofthe

errorstononzerovalues.

As one of our goals was to understand the noise characteristics of GPS and errors

encounteredbythereceiver,wespentquitealotoftimetofindacorrelationbetweenSNRvalues

of the GPS signals and the positioning errors. However, we could neither end up with certain

thresholds nor could notice distinct behaviors in that sense. In some tests, while the SNR values

were relatively high, the accuracy could still be poor. Interestingly, the opposite scenario was

possibletoo.

-

8/13/2019 149550

52/71

42

Figure0.18 GPSclockerror

Probably amore marked foundingwas thedifference between two identicalGPS receivers.

TheycouldoutputverydifferentSNRvaluesatthesametime,atthesameplace,whichmadethe

situationevenmorecomplicated.Ontheotherhand,onecriticalGPSreceivererrorthatwenoticed

wastheclockerror.Inatestdrive,welocatedtwosameGPSproductsononevehicleandwantedto

analyzethebehaviorsofthem.Unexpectedly,therewas1seconddifference inthetimestampsof

tworeceiversinoneparticulartest(seeFigure0.18).Consideringthefactthatweheavilyreliedon

the timestamp information of GPS, it is possible that we might have encountered the same

problems when they were positioned in different vehicles, which was impossible to notice in the

currentdesign.

Insummary,theresultsarenotasgoodasitcouldbebecauseofthesimplerdesignchoices,

butevenintheseconditionstheycouldbeconsideredencouraging.Bettersuitedmodelsandbetter

understandingoftheGPSerrordistributioncouldimprovetheresultssignificantly.

-

8/13/2019 149550

53/71

43

CONCLUSIONAND

FUTURE

WORK

Consideringthe importanceofthesafetysystems inroadtraffic,collisionavoidancesystems

aremost likelygoingtobeimprovedcontinuously.Environmentalawarenesswillalwaysbeoneof

the key figures in these systems, therefore relative distance measurements will be an important

featureandmuchmorestudiesanddevelopmentsshouldbeexpected.

The results obtained from our studies showed that it is practical to share GPS and sensor

information between the vehicles to accomplish relative positioning. However; although relative

position estimations have reasonable accuracy, we are not sure how much of the error was

eliminatedusing

the

differential

equation

defined

in

1.1,

since

we

do

not

know

the

extent

of

the

errorintheabsolutepositionsduetothelackoftruepositioninformation.

Firstthingthatmightberefinedto improverelativepositions isthevehicledynamicsmodel

that is used in sensor fusion algorithm. There are already more accurate models used in cars for

differentapplicationssuchasstabilitycontrol,etc.Themodelusedinoursystemisignoringallthe

factorsthataffecttherawmovementsuchasweightandroadslip.

Secondly,thesensor fusionalgorithmweused inthecalculations isactuallynottheoptimal

solution for nonlinear vehicle motion model and measurement model. The results could be

improvednotablywithamoresuitablealgorithm.

Weused

radar

camera

information

as

reference

since

we

did

not

have

another

option,

but

it

could actually be introduced to the sensor fusion system as well as with other potentially useful

sensors,sothatthecombinedresultscouldbeimprovedevenmore.

Finally,many more testsandanalysesshouldbedone toget a betterunderstanding of the

noisesources,dependenciesoftheproblemandincreasetheaccuracyofresults.

-

8/13/2019 149550

54/71

44

BIBLIOGRAPHY

1.IEEE.IEEE802.11OfficialTimelines.[Online]

http://grouper.ieee.org/groups/802/11/Reports/802.11_Timelines.htm.

2.VehicleApplicationsofControllerAreaNetwork.Johanson,KarlHenrik,Trngren,Martin

andNielsen,Lars.

3.GmbH,VectorInformatic.VectorUserManual.2006.

4.MohinderS.Grewal,LawrenceR.Weill,AngusP.Andrews.GlobalPositioningSystems,

InertialNavigation,andIntegration.2001.

5.GpsInformation NMEA.[Online]http://gpsinformation.org/dale/nmea.htm#nmea.

6.NationalImageryandMappingAgency,DepartmentofDefence.WorldGeodeticSystem

1984(WGS84).2000.

7.TheGeodeticSurveySectionofSurveyandMappingOffice.[Online]

www.geodetic.gov.hk/smo/gsi/data/ppt/NMEAandRTCM.ppt.

8.StateWaterResourcesControlBoard.[Online]

http://www.swrcb.ca.gov/water_issues/programs/swamp/docs/cwt/guidance/6120.pdf.

9.Stovall,SherrylH.BasicInertialNavigation.s.l.:NavigationandDataLinkSection System

Integration

Branch,

1997.

10.ElliottD.Kaplan,ChristopherJ.Hegarty.UnderstandingGPS PrinciplesandApplications

SecondEdition.2006.

11.UnderstandingCoordinateReferenceSystems,DatumsandTransformations.Janssen,V.

2009.

12.PennstateCollegeofEarthandMineralSciences.NatureofGeometricInformation.

[Online]https://www.eeducation.psu.edu/natureofgeoinfo/c2_p29.html.

13.Snyder,JohnP.MapProjections AWorkingManual.1987.

14.TohidArdeshiri,SogolKharrazi,JonasSjberg,JonasBrgman,MathiasLidberg.Sensor

Fusionfor

Vehicle

Positioning

in

Intersection

Active

Safety

Applications.

2006.

15.MartinE.Liggins,DavidL.Hall,JamesLlinas.HandbookofMultisensorDataFusion,

TheoryandPractice,SecondEdition.2009.

16.Woodman,OliverJ.Anintroductiontoinertialnavigation.2007.

17.GregWelch,GaryBishop.AnIntroductiontotheKalmanFilter.2001.

-

8/13/2019 149550

55/71

45

18.Mohinder

S.

Grewal,

Angus

P.

Andrews.

Kalman

Filtering:

Theory

and

Practice

Using

MATLABSecondEdition.2001.

19.JamesL.Crawley,YvesDemazeau.PrinciplesandTechniquesforSensorDataFusion.

20.http://www.swrcb.ca.gov/water_issues/programs/swamp/docs/cwt/guidance/6120.pdf.

[Online]

-

8/13/2019 149550

56/71

46

APPENDIXA.

SOURCE

CODES

A.1CANDataExtractorScript

% Thi s scr i pt extr acts t he rel evant dat a f r om vehi cl e CAN bus% i ncl udi ng i nt ernal sensors and radar / camer a f used dat a.

cl ose al l cl ear al l cl c

% Get t he l ocal t i me bef or e extr acti on st ar t st0 = c l ock;

% Set f i l e fol der and f i l e name to readf i l eFol der = ' . . \ \ LogFi l es\ \ ' ; f i l eName = ' Aug_20_CAN2_Case02. asc' ; f ul l Fi l eName = [ f i l eFol der f i l eName] ;

% Read t he f i l eCANFi l e = f open( f ul l Fi l eName, ' r t ' ) ;

% Request ed si gnal smessageI D1 = ' 71B' ; f uncHandl e1 = @ext r act _r adar_71B;

messageI D2 = ' 321' ; f uncHandl e2 = @ext r act _hs_can_s peed;

% messageI D3 = ' 83' ; % f uncHandl e3 = @extr act _hs_can_gear ;

% messageI D4 = ' 94' ; % f uncHandl e4 = @extr act _hs_can_st eer i ng;

messageI D5 = ' 155' ; f uncHandl e5 = @ext r act _hs_can_yaw;

messageI D6 = ' 600' ; f uncHandl e6 = @ext r act _r adar_yaw;

messageI D7 = ' 71C' ; f uncHandl e7 = @ext r act _r adar_71C;

messageI D8 = ' 160' ; f uncHandl e8 = @ext r act _hs_can_r evgear;

% messageI D9 = ' 321' ; % f uncHandl e9 = @ext r act_ hs_can_accel erat i on;

% I ni t i al i ze i ndexes f or each vector

-

8/13/2019 149550

57/71

47

i ndex1 = 1; i ndex2 = 1; % i ndex3 = 1; % i ndex4 = 1; i ndex5 = 1; i ndex6 = 1; i ndex7 = 1; i ndex8 = 1; % i ndex9 = 1;

% Maxi mum DLC val uemaxDLC = 8;

% Li ne count er i n t he ent i r e CAN l ogl i neCounter = 0;

i ni t i al Capaci t y = 1000; capaci t yExt ensi on = 1000;

capaci t y1 = i ni t i al Capaci t y; capaci t y2 = i ni t i al Capaci t y; % capaci t y3 = i ni t i al Capaci t y; % capaci t y4 = i ni t i al Capaci t y; capaci t y5 = i ni t i al Capaci t y; capaci t y6 = i ni t i al Capaci t y; capaci t y7 = i ni t i al Capaci t y; capaci t y8 = i ni t i al Capaci t y; % capaci t y9 = i ni t i al Capaci t y;

Can. r adar71B = i ni t i al i zeRadar 71B( capaci t y1) ;

Can. hsSpeedOverGr ound = zer os( 1, capaci t y2) ; % Can. hsGear Lever Posi t i on = zeros( 1, capaci t y3) ;

% Can. hsSt eeri ngAngl eSi gn = zeros( 1, capaci t y4) ; % Can. hsSt eeri ngAngl e = zeros( 1, capaci t y4) ;

Can. hsYaw = zeros( 1, capaci t y5) ; Can. r adarYaw = zeros( 1, capaci t y6) ; Can. r adar71C = i ni t i al i zeRadar 71C( capaci t y7) ; Can. hsRevGear = zer os( 1, capaci t y8) ; % Can. hsAccel erati on = zeros( 1, capaci t y9) ;

whi l e ~f eof ( CANFi l e)

% Read t he next l i ne f r om t he f i l et hi sLi ne = f get l ( CANFi l e) ;

% % I ncr ement t he l i ne count er% l i neCounter = l i neCount er + 1; % di sp(spri ntf ( ' L i ne: %u' , l i neCounter) ) ;

-

8/13/2019 149550

58/71

48

[ t empToken, t empSt r i ng] = st r t ok( t hi sLi ne); t empTi me = st r 2doubl e( t empToken) ;

i f t empTi me >= 0[ t empToken, t empSt r i ng] = st r t ok( t empSt r i ng) ;

t empChannel = st r 2num( t empToken) ;

i f ~i sempty( t empChannel ) && i shex( st r t ok( t empSt r i ng) )

[ t empMessageI D, t empStr i ng] = st r t ok(t empSt r i ng);

% Get dat a bytes f r om t he cur r ent l i neDataByt es = get DataByt es( t hi sLi ne) ;

% I s i t Message 1?i f st r cmp( t empMessageI D, messageI D1)

i f i ndex1 == capaci t y1Can. r adar71B = extendRadar71B( Can. r adar71B, . . .

capaci t yExtensi on) ; capaci t y1 = capaci t y1 + capaci t yExt ensi on;

end[ Can. r adar71B. t ar getRangeAccel erat i on( i ndex1) , . . . Can. r adar71B. t arget RangeRate( i ndex1) , . . . Can. r adar71B. det ect i onSensor( i ndex1) , . . . Can. r adar71B. det ect i onSt atus( i ndex1) , . . . Can. r adar71B. t arget Range( i ndex1), . . . Can. r adar71B. t ar getMoti onCl ass(i ndex1) , . . . Can. r adar 71B. t ar get I d( i ndex1) , . . . Can. r adar71B. t ar getWi dt h( i ndex1) , . . .

Can. r adar71B. t arget Moveabl eSt atus( i ndex1), . . . Can. r adar71B. t ar getAngl e(i ndex1) ] . . . = f uncHandl e1( DataByt es) ;

i ndex1 = i ndex1 + 1;

% I s i t Message 2?el sei f st r cmp( t empMessageI D, messageI D2)

i f i ndex2 == capaci t y2Can. hsSpeedOver Gr ound = [Can. hsSpeedOver Gr ound . . .

zer os( 1, capaci t yExtensi on) ] ; capaci t y2 = capaci t y2 + capaci t yExt ensi on;

endCan. hsSpeedOver Gr ound( i ndex2) = f uncHandl e2( Dat aByt es) ; i ndex2 = i ndex2 + 1;

% % I s i t Message 3?% el sei f st r cmp(t empMessageI D, messageI D3) % i f i ndex3 == capaci t y3% Can. hsGearLever Posi t i on = [ Can. hsGear Lever Posi t i on . . . % zer os( 1, capaci t yExtensi on) ] ; % capaci t y3 = capaci t y3 + capaci t yExt ensi on; % end% Can. hsGear LeverPosi t i on( i ndex3) = f uncHandl e3( DataByt es) ;

-

8/13/2019 149550

59/71

49

% i ndex3 = i ndex3 + 1;

% I s i t Message 4?% el sei f st r cmp(t empMessageI D, messageI D4) % i f i ndex4 == capaci t y4% Can. hsSt eer i ngAngl eSi gn = [ Can. hsSt eer i ngAngl eSi gn . . . % zer os( 1, capaci t yExtensi on) ] ; % Can. hsSt eer i ngAngl e = [ Can. hsSt eeri ngAngl e . . . % zer os( 1, capaci t yExtensi on) ] ; % capaci t y4 = capaci t y4 + capaci t yExt ensi on; % end% [ Can. hsSt eer i ngAngl eSi gn( i ndex4) , . . .% Can. hsSt eer i ngAngl e( i ndex4) ] = f uncHandl e4( DataByt es) ; % i ndex4 = i ndex4 + 1;

% I s i t Message 5?el sei f st r cmp( t empMessageI D, messageI D5) i f i ndex5 == capaci t y5

Can. hsYaw = [ Can. hsYaw . . . zer os( 1, capaci t yExtensi on) ] ;

capaci t y5 = capaci t y5 + capaci t yExt ensi on; endCan. hsYaw( i ndex5) = f uncHandl e5( Dat aByt es) ; i ndex5 = i ndex5 + 1;

% I s i t Message 6?el sei f st r cmp( t empMessageI D, messageI D6)

i f i ndex6 == capaci t y6Can. r adarYaw = [ Can. r adarYaw . . .

zer os( 1, capaci t yExtensi on) ] ; capaci t y6 = capaci t y6 + capaci t yExt ensi on;

endCan. r adarYaw( i ndex6) = f uncHandl e6( DataByt es) ; i ndex6 = i ndex6 + 1;

% I s i t Message 7?el sei f st r cmp( t empMessageI D, messageI D7)

i f i ndex7 == capaci t y7Can. r adar71C = extendRadar71C( Can. r adar71C, . . .

capaci t yExtensi on) ; capaci t y7 = capaci t y7 + capaci t yExt ensi on;

end[ Can. r adar71C. t ar getRangeAccel erat i on( i ndex7) , . . . Can. r adar71C. t arget RangeRate( i ndex7) , . . . Can. r adar71C. det ect i onSensor( i ndex7) , . . . Can. r adar71C. det ect i onSt atus( i ndex7) , . . .

Can. r adar71C. t arget Range( i ndex7), . . . Can. r adar71C. t ar getMoti onCl ass(i ndex7) , . . . Can. r adar 71C. t ar get I d( i ndex7) , . . . Can. r adar71C. t ar getWi dt h( i ndex7) , . . . Can. r adar71C. t arget Moveabl eSt atus( i ndex7), . . . Can. r adar71C. t ar getAngl e(i ndex7) ] . . . = f uncHandl e7( DataByt es) ;

i ndex7 = i ndex7 + 1;

-

8/13/2019 149550

60/71

50

% I s i t Message 8?el sei f st r cmp( t empMessageI D, messageI D8)

i f i ndex8 == capaci t y8Can. hsRevGear = [ Can. hsRevGear . . .

zer os( 1, capaci t yExtensi on) ] ; capaci t y8 = capaci t y8 + capaci t yExt ensi on;

endCan. hsRevGear ( i ndex8) = f uncHandl e8( DataByt es) ; i ndex8 = i ndex8 + 1;