NN_Ch07

-

Upload

mohamad-sannan -

Category

Documents

-

view

213 -

download

0

Transcript of NN_Ch07

-

8/11/2019 NN_Ch07

1/24

Ming-Feng Yeh 1

CHAPTER 7

Supervised

Hebbian

Learning

-

8/11/2019 NN_Ch07

2/24

Ming-Feng Yeh 2

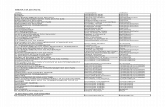

Object ives

The Hebb rule, proposed by Donald Hebb in1949, was one of the first neural networklearning laws.

A possible mechanism for synapticmodificationin the brain.

Use the linear algebra conceptsto explainwhy Hebbian learning works.

The Hebb rulecan be used to train neuralnetworks for pattern recognition.

-

8/11/2019 NN_Ch07

3/24

Ming-Feng Yeh 3

Hebbs Postulate

Hebbian learning(The Organization of Behavior)

When anaxon of cell A is nearenough to excitea

cell Band repeatedlyor persistentlytakes part in

firing it; some growth processor metabolic change

takes place in one or both cellssuch thatAs

efficiency, as one of the cells firing B, is increased.

AB

B

AB

-

8/11/2019 NN_Ch07

4/24

Ming-Feng Yeh 4

L inear Associator

W

SR

R

p

R1

a

S1

n

S1

S

a =Wp

Q

jjiji pwa

1

The linear associator is an example of a type of neuralnetwork called an associator memory.

The task of an associator is to learn Qpairs ofprototype input/output vectors: {p1,t1}, {p2,t2},, {pQ,tQ}.

If p= pq, then a= tq. q= 1,2,,Q.If p= pq+ , then a= tq+ .

-

8/11/2019 NN_Ch07

5/24

Ming-Feng Yeh 5

Hebb Learn ing RuleIf two neurons on either side of a synapse areactivated simultaneously, the strengthof the synapsewill increase.

The connection (synapse)between inputpjand

outputaiis the weightwij.Unsupervised learning rule

jqiq

old

ij

new

ijjqjiqi

old

ij

new

ij pawwpgafww )()(

)1(T qqoldnew

jqiq

old

ij

new

ij ptww ptWW

Supervised learning rule

Not only do we increasethe weight whenpjand aiare

positive, but we also increasethe weight when theyare both negative.

-

8/11/2019 NN_Ch07

6/24

Ming-Feng Yeh 6

Superv ised Hebb Ru le

Assume that the weight matrixis initialized to zeroandeach of the Qinput/output pairsare applied oncetothe supervised Hebb rule. (Batch operation)

T

T

T

2

T

1

21

1

TTT

22

T

11

TP

p

p

p

ttt

ptptptptW

Q

Q

Q

qqqQQ

QQ pppPtttT 2121 ,where

-

8/11/2019 NN_Ch07

7/24

Ming-Feng Yeh 7

Performance Analysis

Assume that the pqvectors are orthonormal(orthogonaland unit length), then

.,0

.,1

kq

kqk

T

qpp

If pqis inputto the network, then the network outputcan be computed

k

Q

q kqqk

Q

q qqk

tpptpptWpa

1

T

1

T )(

If the input prototype vectors are orthonormal, the Hebbrule will produce the correct output for each input.

-

8/11/2019 NN_Ch07

8/24

Ming-Feng Yeh 8

Performance Analysis

Assume that each pqvector is unit length, but they arenot orthogonal. Then

k

Q

q

kqqk tpptWpa

1

T )( kq

kqq )( Tppt

error

The magnitude of the errorwill depend on the amountof correlationbetween the prototype input patterns.

-

8/11/2019 NN_Ch07

9/24

Ming-Feng Yeh 9

Orthonormal Case

1

1,

5.0

5.0

5.0

5.0

,1

1,

5.0

5.0

5.0

5.0

2211 tptp

01101001

5.05.05.05.0

5.05.05.05.0

11

11T

TPW

.1

1,1

121

WpWp Success!!

-

8/11/2019 NN_Ch07

10/24

Ming-Feng Yeh 10

Not Orthogonal Case

1,

5774.0

5774.0

5774.0

,1,

5774.0

5774.0

5774.0

2211 tptp

0547.105774.05774.05774.0

5774.05774.05774.011T

TPW

.8932.0,8932.0 21 WpWp

The outputs are close, but do not quite match the target

outputs.

-

8/11/2019 NN_Ch07

11/24

Ming-Feng Yeh 11

Solved Prob lem P7.2

21 ppTP

:1p :2p

T1 111111 p

T2 111111 p

i. 02

T

1

pp Orthogonal, notorthonormal, 62

T

21

T

1

pppp

202020

020202

202020

020202

202020

020202

T

TPWii.

-

8/11/2019 NN_Ch07

12/24

Ming-Feng Yeh 12

So lut ions o f Prob lem P7.2

iii. :tp T111111 tp

2

1

1

1

1

1

1

6-

2

6

2

6

2-

hardlims)(hardlims pWpa

t

:1p :2pHamming dist. = 2 Hamming dist. = 1

-

8/11/2019 NN_Ch07

13/24

-

8/11/2019 NN_Ch07

14/24

Ming-Feng Yeh 14

Pseudo inverse Rule

Pmatrix has an inverseiffPmust be a square matrix.Normally the pqvectors (the column of P) will beindependent, butR(the dimension of pq, no. of rows)will be largerthan Q(the number of p

q

vectors, no. ofcolumns). Pdoes notexist any inverse matrix.

The weight matrix Wthat minimizesthe performance

index is given by the

pseudoinverse rule .

2

1

)(

Q

qqqF WptW

TPW

where P+is the Moore-Penrose pseudoinverse.

-

8/11/2019 NN_Ch07

15/24

Ming-Feng Yeh 15

Moore-PenrosePseudoinverse

The pseudoinverse of a real matrix Pis the uniquematrix that satisfies

T

T

)()(

PPPPPPPP

PPPP

PPPP

WhenR (no. of rows of P) >Q (no. of columns of P)andthe columnsofPare independent, then the

pseudoinverse can be computed by .T1T

)( PPPP

Note that we do NOT need normalizetheinput vectors

when using the pseudoinverse rule.

-

8/11/2019 NN_Ch07

16/24

Ming-Feng Yeh 16

Example o fPseudo inverse Rule

1,

1

1

1

,1,

1

1

1

2211 tptp

111

111T

P

25.05.025.0

25.05.025.0

111

111

31

13)(

T

T1TPPPP

01025.05.025.0

25.05.025.011

TPW

2211 11

1

1

010,1

1

1

1

010 tWptWp

-

8/11/2019 NN_Ch07

17/24

Ming-Feng Yeh 17

Autoassociat ive Memory

The linear associatorusing the Hebb rule is a type ofassociative memory( tqpq ). In an autoassociativememorythe desired output vector is equal to the inputvector ( tq = pq ).

An autoassociative memorycan be used to store aset of patternsand then to recall these patterns, evenwhen corrupted patternsare provided as input.

11, tp 22 , tp 33 , tpW

30

30

30

p

301

a

301

n

301

30

T

33

T

22

T

11 ppppppW

-

8/11/2019 NN_Ch07

18/24

Ming-Feng Yeh 18

Corrupted & Noisy Vers ions

Recovery of 50%Occluded Patterns

Recovery of NoisyPatterns

Recovery of 67%Occluded Patterns

-

8/11/2019 NN_Ch07

19/24

Ming-Feng Yeh 19

Variat ions o fHebb ian Learn ing

Many of the learning rules have some relationship to theHebb rule.

The weight matricesof Hebb rule have very largeelementsif there are many prototype patternsin the

training set.

Basic Hebb rule: Tqq

oldnew ptWW

Filtered learning: adding adecay term, so that the

learning rule behaves like a smoothing filter,remembering the most recent inputs more clearly.

TT )1( qqoldold

qq

oldnewptWWptWW

10

-

8/11/2019 NN_Ch07

20/24

Ming-Feng Yeh 20

Variat ions o fHebb ian Learn ing

Delta rule: replacing the desired output with thedifference between the desired output and the

actual output.It adjusts the weights so as to minimizethe mean square error.

T)( qqqoldnew

patWW

The delta rule can update the weights after each new

input pattern is presented.

BasicHebb rule: Tqq

oldnew ptWW

Unsupervised Hebb rule: Tqq

oldnewpaWW

-

8/11/2019 NN_Ch07

21/24

Ming-Feng Yeh 21

Solved Prob lem P7.6

+

a11

n11

1 b11

W

11

2

p

21

1

T2T

1 22,11 pp

p1

p2Wp = 0

Why is a bias required to solve this problem?

The decision boundary for the perceptron network isWp+ b= 0. If these is no bias, then the boundarybecomes Wp= 0which is a line that must passthrough the origin. No decision boundary that passesthrough the origin could separate these two vectors.

i.

-

8/11/2019 NN_Ch07

22/24

Ming-Feng Yeh 22

Solved Prob lem P7.6

Use the pseudoinverse rule to design a network withbias to solved this problem.

Treat the bias as another weight, with an input of 1.

ii.

T

2

T

1 122,111

pp 1,1 21 tt

11,11

21

21

TP

15.05.0

25.05.0)( T1T PPPP

3,11311 bWTPW

p1

p2

Wp + b = 0

-

8/11/2019 NN_Ch07

23/24

Ming-Feng Yeh 23

Solved Prob lem P7.7

Up to now, we have represented patterns as vectors byusing 1and 1to represent dark and light pixels,respectively. Whatif we were to use 1and 0instead?How should the Hebb rule be changed?

Bipolar{1,1} representation: },{},...,,{},,{ 2211 QQ tptptp

Binary{0,1} representation: },{},...,,{},,{ 2211 QQ tptptp

1pp1pp qqqq 2,21

21 , where 1is a vector of ones.

Wpb1WpWb1pW 2

121

21

21

WpbpW

W1bWW ,2

-

8/11/2019 NN_Ch07

24/24

Ming-Feng Yeh 24

B inary Associat iveNetwork

+

aS1n

S11 b

S1

SR

R

R1

S

n = Wp + b a = hardlim(Wp + b)p

W

W1b

WW

,2