HadoopFileFormats_2016

-

Upload

jakub-wszolek -

Category

Documents

-

view

125 -

download

0

Transcript of HadoopFileFormats_2016

© 2013 Acxiom Corporation. All Rights Reserved. © 2013 Acxiom Corporation. All Rights Reserved.

Large-Scale ETL Processing -Hadoop File Formats

Jakub Wszolek ([email protected])Future3 - 2016

© 2013 Acxiom Corporation. All Rights Reserved.

ETL with Hadoop

• Querying and reporting on data swiftly requires a sophisticated storage format

• Systems flexible enough to load any delimited source

• Systems that are able to detect layout changes• System that can catch potential data issues• BI platform• Processes and services automation

2

© 2013 Acxiom Corporation. All Rights Reserved.

Hadoop analytics architecture

3

© 2013 Acxiom Corporation. All Rights Reserved.

Hadoop file formats• Key factor in BigData processing and query

performance, optimization• Schema evolution• Compression and splitability

• Optimized storage space utilization

• Data Processing:-Write performance-Partial Read-Full Read

4

© 2013 Acxiom Corporation. All Rights Reserved.

Available File Formats• Text/CSV (STORED AS TEXTFILE)• JSON• Sequence file (STORED AS SEQUENCEFILE)-Binary key/value pair format

• RC File -Record columnar format

• Avro/Trevni• Parquet• ORC-Optimized record columnar format

5

All the formats have general compression:• ZLIB (GZip) – tight compression (slower)• Snappy – some compression (faster)

© 2013 Acxiom Corporation. All Rights Reserved.

Row/Column oriented

6

© 2013 Acxiom Corporation. All Rights Reserved.

Sequence file

7

• Flat file consisting of binary key/value pairs• Extensively used in MapReduce as input/output

formats• Each record is a <key,value> pair • Key and Value needs to be a class of org.apache.hadoop.io.Text

• KEY = record name/filename+uniqe ID• VALUE = content as UTF-8 encoded String

• Hive has to read a full row and decompress it even if only one column is being requested.

© 2013 Acxiom Corporation. All Rights Reserved.

RCFile• RCFile (Record Columnar File) is a data

placement structure designed for MapReduce-based data warehouse systems

• RCFile stores table data in a flat file consisting of binary key/value pairs.

• RCFile stores the metadata of a row-split as the key part of a record, and all the data of a row split as the value part.

• hive --rcfilecat [--start=start_offset] [--length=len] [--verbose] fileName

8

© 2013 Acxiom Corporation. All Rights Reserved.

RCFile

9

© 2013 Acxiom Corporation. All Rights Reserved.

ORCFile• Column-oriented

• Lightweight indexes stored within the file-Ability to skip row groups that don’t pass predicate

filtering

• Includes basic statistics (min, max, sum, count) on columns

• Larger block size of 250 MB by default optimizes for large sequential reads on HDFS for more throughput and fewer files to reduce load on the namenode.

10

© 2013 Acxiom Corporation. All Rights Reserved.

ORCFile

11

© 2013 Acxiom Corporation. All Rights Reserved.

ORCFile Usage

12

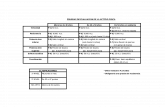

CREATE EXTERNAL TABLE testing_campaign (advertiser_id STRING, order_id STRING, order STRING, start_date STRING, end_date STRING, creative_library_enabled STRING, billing_invoice_code STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.ql.io.orc.OrcSerde'STORED AS orc TABLEPROPERTIES (“orc.compress” = “ZLIB”)INPUTFORMAT 'org.apache.hadoop.hive.ql.io.orc.OrcInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.orc.OrcOutPutFormat' LOCATION “/user/test/…”

key default comments

orc.compress ZLIB NONE, ZLIB, Snappy

orc.compress.size 256 KB Number of bytes in each compression chunk

orc.stripe.size 64 MB Each ORC stripe is processed in one Map task

hive.exec.orc.default.block.size 250 MB Define the default file system block size for ORC files.

© 2013 Acxiom Corporation. All Rights Reserved.

Parquet• Column-oriented

• Parquet came out of a collaboration between Twitter and Cloudera in 2013

• Querying “wide” tables with many columns or performing aggregation operations like AVG() for the values of a single column

• Schema evolution-Can add columns at the end

13

© 2013 Acxiom Corporation. All Rights Reserved.

File size compression

14

© 2013 Acxiom Corporation. All Rights Reserved.

What is AVRO?• Data serialization framework

• Language-neutral data serialization system (SerDeexample)- Data serialization is a mechanism to translate data in computer

environment (like memory buffer, data structures or object state) into binary or textual form that can be transported over network or stored in some persistent storage media.

• Develop by Doug Cutting, the father of Hadoop (2009)• Avro is a preferred tool to serialize data in Hadoop

• Avro uses JSON format to declare the data structures. Presently, it supports languages such as Java, C, C++, C#, Python, and Ruby.

© 2013 Acxiom Corporation. All Rights Reserved.

Features of Avro

• Avro creates binary structured format that is

both compressible and splittable- used as the input to Hadoop MapReduce jobs.

• Avro provides rich data structures- record that contains an array, an enumerated type, and a sub record.

• Avro creates a self-describing file named Avro Data File

- stores data along with its schema in the metadata section

© 2013 Acxiom Corporation. All Rights Reserved.

SerDe (JSON/Parquet/AVRO…) –serialization example

CREATE TABLE testing_campaign

PARTITIONED BY (ingestion_dt STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.avro.AvroSerDe’

STORED as INPUTFORMAT 'org.apache.hadoop.hive.ql.io.avro.AvroContainerInputFormat'OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.avro.AvroContainerOutputFormat’

LOCATION '/user/...'TBLPROPERTIES ('avro.schema.url'='hdfs://cloudera.loc/user/.../schema.avsc');

© 2013 Acxiom Corporation. All Rights Reserved.

Schema in AVRO{"type" : "record","name" : "TestingData","doc" : "Schema generated by jwszol","fields" : [ {"name" : "name","type" : [ "null", "string" ],"doc" : "Type inferred from 'jwszol'"

}, {"name" : "value","type" : ["null", "string" ],"doc" : "Type inferred from '100'"

}, {"name" : "id","type" : [ "null", "string" ],"doc" : "Type inferred from '1'"

}, {"name" : "size","type" : [ "null", "string" ],"doc" : "Type inferred from '123'"

} ]}

© 2013 Acxiom Corporation. All Rights Reserved.

AVRO file

© 2013 Acxiom Corporation. All Rights Reserved.

Tested use cases• Simple conversion : Text delimited to AVRO –

PASSED• Field was missing in the data file – PASSED• Fields being rearranged in the data file – PASSED• Field name changes in data file (continue to map it

into the old field name) – PASSED• Define optional and required fields – PASSED• Basic transformation on the data (reformat of time

stamp) - PASSED• Missing whole column in text file - PASSED

© 2013 Acxiom Corporation. All Rights Reserved.

Python code

Simple schema example:

Serialization:

© 2013 Acxiom Corporation. All Rights Reserved.

API• Java supported:

https://avro.apache.org/docs/1.7.7/gettingstartedjava.html

• Python supported: https://avro.apache.org/docs/1.7.6/gettingstartedpython.html

• AVRO tools (CDH5 embedded): https://mvnrepository.com/artifact/org.apache.avro/avro-tools/1.7.4

© 2013 Acxiom Corporation. All Rights Reserved.

What’s the best choice?

• For writing

- Is the data format compatible with your processing and querying tools?

-Does your schema evolve over time?-Saving data types-Speed concerns (AVRO/Parquet/ORCFile needs

additional parsing to format the data – increase the overall time)

23

© 2013 Acxiom Corporation. All Rights Reserved.

Writing results

24

0

10

20

30

40

50

60

70

Text Avro Parquet Sequence ORC

Tim

e in

sec

onds

1* - hive 1.1 Cloudera (5.4)

0

100

200

300

400

500

600

700

Text Avro Parquet Sequence ORC

Tim

e in

sec

onds

2* - Hive 1.1 Cloudera (5.4)

1* - 10 milions rows, 10 columns2* - 4 milions rows, 1000 columns

© 2013 Acxiom Corporation. All Rights Reserved.

What’s the best choice?

• For reading

-Compression – regardless the format increases query speed time

-Column specific queries: group of columns (Parquet/ORC)

-Parquet and ORC optimize read performance at the expense of write performance

25

© 2013 Acxiom Corporation. All Rights Reserved.

Reading results

26

0

10

20

30

40

50

60

70

Query 1 (0 conditions) Query 2 (5 conditions) Query 3 (10 conditions)

time

in s

econ

s

1* - hive 1.1 Cloudera (5.4)

Text

Avro

Parquet

Sequence

ORC

0

50

100

150

200

250

Query 1 (0 conditions) Query 2 (5 conditions) Query 3 (10 conditions) Query 4 (20 conditions)

Tim

e in

sec

onds

2* - hive 1.1 Cloudera (5.4)

Text

Avro

Parquet

Sequence

ORC

1* - 10 milions rows, 10 columns2* - 4 milions rows, 1000 columns

© 2013 Acxiom Corporation. All Rights Reserved.

Reading results (Impala)

27

0

1

2

3

4

5

6

7

8

Query 1 (0 conditions) Query 2 (5 conditions) Query 3 (10 conditions)

Tim

e in

sec

onds

1* - Impala CDH 5.4

Text

Avro

Parquet

Sequence

0

5

10

15

20

25

30

Query 1 (0 conditions) Query 2 (5 conditions) Query 3 (10 conditions) Query 4 (20 conditions)

Tim

e in

sec

onds

2* - Impala CDH 5.4

Text

Avro

Parquet

Sequence

1* - 10 milions rows, 10 columns2* - 4 milions rows, 1000 columns

© 2013 Acxiom Corporation. All Rights Reserved.

ACXIOM - Marketing Analytics Environment

• Bring together online and offline data to get a complete, 360-degree view of your customer

• Actionable recommendations on how to adjust your digital marketing to reach your goals

• Measure and analyze your marketing spend across all channels

• Data loads (Aug – Dec 2015) – 268 980,402,366 records

28

http://www.acxiom.com/marketing-analytics-environment/

© 2013 Acxiom Corporation. All Rights Reserved.

References• http://www.acxiom.com/marketing-analytics-environment/

• https://www.datanami.com/2014/09/01/five-steps-to-running-etl-on-hadoop-for-web-companies/

• http://slideplayer.com/slide/6666437/

• https://cwiki.apache.org/confluence/display/Hive/RCFile

• http://axbigdata.blogspot.com/2014/05/hive.html

• http://www.slideshare.net/Hadoop_Summit/innovations-in-apache-hadoop-mapreduce-pig-hive-for-improving-query-performance

• http://www.semantikoz.com/blog/wp-content/uploads/2014/02/Orc-File-Layout.png

• http://www.slideshare.net/StampedeCon/choosing-an-hdfs-data-storage-format-avro-vs-parquet-and-more-stampedecon-2015

• http://www.slideshare.net/Hadoop_Summit/w-1205p230-aradhakrishnan-v3

29

© 2013 Acxiom Corporation. All Rights Reserved. © 2013 Acxiom Corporation. All Rights Reserved.

Questions?

Thank you!

twitter.com/jwszol