コンピュータビジョン, ヒューマン・コンピュータ ......task such as background...

Transcript of コンピュータビジョン, ヒューマン・コンピュータ ......task such as background...

菅野研究室[コンピュータビジョン・HCI ]生産技術研究所 ソシオグローバル情報工学研究センター

Center for Socio-Global Informatics

http: / / iv i . i is .u- tokyo.ac. jp/インタラクティブ視覚知能

コンピュータビジョン, ヒューマン・コンピュータ・インタラクション Ee410

情報理工学系研究科 電子情報学専攻

本研究室ではコンピュータビジョンを軸に、画像認識のための基礎的な手法の研究から応用のためのシステム提案・ユーザ評価まで、ユーザとのインタラクションを含めた全体論的な視野から知能システムの研究に取り組んでいます。

Erro

r co

mpe

nsat

ion

Training

Gaze target stimuli

Aggr

egat

ion

Original estimation Attention map

2 Y. Miyauchi et al.

3D models

Latent appearance vector 𝒛𝒛 𝒛 𝒛𝒛𝟎Input

Normal map rendering

Images generated by SCGAN

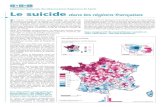

Fig. 1: The proposed shape-conditioned image generation network (SCGAN) outputsimages of an arbitrary object with the same shape as the input normal map, whilecontrolling the image appearances via latent appearance vectors.

been used to modify synthetic data to more realistic training images, and it has beenshown that such data can improve the performance of learned estimators [27,28,2].These methods use synthetic data as a condition on image generation so that outputimages remain visually similar to the input images and therefore keep their originalground-truth labels. In this sense, the aforementioned limitation of conditional im-age generation severely restricts the application of such training data synthesis ap-proaches. If the method allows for more fine-grained control of object shapes, poses,and appearances, it can open a way for generating training data for, e.g., generic ob-ject recognition and pose estimation.

In this work, we propose SCGAN (Shape-Conditioned GAN), a GAN architecturefor generating images conditioned by input 3D shapes. As illustrated in Fig. 1, thegoal of our method is to provide a way to generate images of arbitrary objects with thesame shape as the input normal map. The image appearance is explicitly modeled asa latent vector, which can be either randomly assigned or extracted from actual im-ages. Since we cannot always expect paired training data of normal maps and images,the overall network is trained using the cycle consistency loss [39] between the origi-nal and back-reconstructed images. In addition, the proposed architecture employsan extra discriminator network to examine whether the generated appearance vectorfollows the assumed distribution. Unlike prior work using a similar idea for featurelearning [7], this appearance discriminator allows us to not only control the imageappearance, but also to improve the quality of generated images. We demonstratethe effectiveness of our method in comparison with baseline approaches through

視線推定とユーザ理解

Spatial weights

Conv & Max Pooling Fully connected

Regression

U V

W

1x1 Conv+ReLu

1x1 Conv+ReLu

1x1 Conv+ReLu

96x110x110448x448

96x55x55

256x13x13

Average weight map

4096 4096

2

256x13x13

256x13x13 256x13x13 13x13

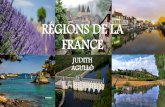

Figure 2: Spatial weights CNN for full-face appearance-based gaze estimation. The input image is passed through multipleconvolutional layers to generate a feature tensor U . The proposed spatial weights mechanism takes U as input to generatethe weight map W , which is applied to U using element-wise multiplication. The output feature tensor V is fed into thefollowing fully connected layers to – depending on the task – output the final 2D or 3D gaze estimate.

spatial weighting is two-fold. First, there could be someimage regions that do not contribute to the gaze estimationtask such as background regions, and activations from suchregions have to be suppressed for better performance. Sec-ond, more importantly, compared to the eye region that isexpected to always contribute to the gaze estimation perfor-mance, activations from other facial regions are expected tosubtle. The role of facial appearance is also depending onvarious input-dependent conditions such as head pose, gazedirection and illumination, and thus have to be properly en-hanced according to the input image appearance. Although,theoretically, such differences can be learned by a normalnetwork, we opted to introduce a mechanism that forces thenetwork more explicitly to learn and understand that differentregions of the face can have different importance for estimat-ing gaze for a given test sample. To implement this strongersupervision, we used the concept of the three 1! 1 convo-lutional layers plus rectified linear unit layers from [28] asa basis and adapted it to our full face gaze estimation task.Specifically, instead of generating multiple heatmaps (one tolocalise each body joint) we only generated a single heatmapencoding the importance across the whole face image. Wethen performed an element-wise multiplication of this weightmap with the feature map of the previous convolutional layer.An example weight map is shown in Figure 2, averaged fromall samples from the MPIIGaze dataset.

4.1. Spatial Weights Mechanism

The proposed spatial weights mechanism includes threeadditional convolutional layers with filter size 1!1 followedby a rectified linear unit layer (see Figure 2). Given activationtensor U of size N!H!W as input from the convolutionallayer, where N is the number of feature channels and H andW are height and width of the output, the spatial weightsmechanism generates a H ! W spatial weight matrix W .

Weighted activation maps are obtained from element-wisemultiplication of W with the original activation U with

Vc = W "Uc, (1)

where Uc is the c-th channel of U , and Vc corresponds tothe weighted activation map of the same channel. Thesemaps are stacked to form the weighted activation tensor V ,and are fed into the next layer. Different from the spatialdropout [28], the spatial weights mechanism weights theinformation continuously and keeps the information fromdifferent regions. The same weights are applied to all featurechannels, and thus the estimated weights directly correspondto the facial region in the input image.

During training, the filter weights of the first two con-volutional layers are initialized randomly from a Gaussiandistribution with 0 mean and 0.01, and a constant bias of 0.1.The filter weights of the last convolutional layers are initial-ized randomly from a Gaussian distribution with 0 mean and0.001 variance, and a constant bias of 1.

Gradients with respect to U and W are

!V

!U= !W , (2)

and

!V

!W=

1

N

N!

c

!Uc. (3)

The gradient with respect to W is normalised by the totalnumber of the feature maps N , since the weight map Waffects all the feature maps in U equally.

4.2. Implementation Details

As the baseline CNN architecture we used AlexNet [14]that consists of five convolutional layers and two fully con-nected layers. We trained an additional linear regression

54

コンピュータビジョンによるシーン認識

インタラクティブシステムへの応用

Gaze-guided Image Classification for

Reflecting Perceptual Class Ambiguity

Tatsuya Ishibashi Yusuke Sugano Yasuyuki MatsushitaGraduate School of Information Science and Technology, Osaka University

{ishibashi.tatsuya, sugano, yasumat}@ist.osaka-u.ac.jp

ABSTRACT

Despite advances in machine learning and deep neural net-works, there is still a huge gap between machine and humanimage understanding. One of the causes is the annotationprocess used to label training images. In most image catego-rization tasks, there is a fundamental ambiguity between someimage categories and the underlying class probability differsfrom very obvious cases to ambiguous ones. However, currentmachine learning systems and applications usually work withdiscrete annotation processes and the training labels do notreflect this ambiguity. To address this issue, we propose annew image annotation framework where labeling incorporateshuman gaze behavior. In this framework, gaze behavior isused to predict image labeling difficulty. The image classifieris then trained with sample weights defined by the predicteddifficulty. We demonstrate our approach’s effectiveness onfour-class image classification tasks.

CCS Concepts

•Human-centered computing ! Human computer inter-action (HCI); •Computing methodologies ! Computer vi-sion;

Author Keywords

Eye tracking; Machine learning; Computer vision

INTRODUCTION

Machine learning-based computer vision methods have beengrowing rapidly and the state-of-the-art algorithms even out-perform humans at some image recognition tasks [9, 10]. How-ever, their performance is still lower than humans’ when thetraining data is limited or the task is complex [1]. Further, theerrors that machines make are often different from the oneshumans make [12].

One approach to overcome this difficulty is to incorporatehumans in the loop via human-computer interaction [4, 6, 7].Some prior examples include using human brain activities toinfer perceptual class ambiguities in image recognition and

Permission to make digital or hard copies of part or all of this work for personal orclassroom use is granted without fee provided that copies are not made or distributedfor profit or commercial advantage and that copies bear this notice and the full citationon the first page. Copyrights for third-party components of this work must be honored.For all other uses, contact the owner/author(s).UIST ’18 Adjunct October 14–17, 2018, Berlin, Germany

© 2018 Copyright held by the owner/author(s).ACM ISBN 978-1-4503-5949-8/18/10.DOI: https://doi.org/10.1145/3266037.3266090

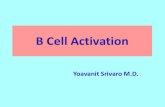

PerceptualClass Ambiguity

Gaze and Mouse Features

Image Features Image SVM

Gaze SVM

Figure 1. Overview of the proposed method. The first gaze SVM istrained using gaze and mouse features during image annotation and thesecond image SVM is trained so that it behaves similarly to the gazeSVM and reflects the perceptual class ambiguity for humans.

assigning difficulty-based sample weights to the training im-ages [8, 15]. However, while gaze is also known to reflectinternal states of humans, is much cheaper to measure thanbrain activity, and has been used as a cue to infer user proper-ties related to visual perception [3, 14, 16, 17], there has notbeen much research on using gaze data for guiding machinelearning processes.

This work proposes an approach for gaze guided an imageclassification that better reflects the class ambiguities in humanperception. An overview is given in Fig. 1. First, we collectgaze and mouse interaction data when participants work on avisual search and annotation task. We train a support vectormachine (SVM) [2] using features extracted from these gazeand mouse data and use its decision function to infer perceptualclass ambiguities when assigning the target image classes. Theambiguity scores are used to assign sample weights for traininga second SVM with image features. This results in an imageclassifier that behaves similarly to the gaze-based classifier.

GAZE-GUIDED IMAGE RECOGNITION

The basic idea of our method is that the behavior of the imageannotator reflects the difficulty of assigning class labels. Gazebehavior on annotated images is more distinctive if the imageclearly belongs to the target or non-target classes, while itbecomes more indistinctive on ambiguous cases. Therefore,the decision function of an SVM classifier trained on gazeand mouse features can be used to estimate the underlyingperceptual class ambiguity of the training images.

Our method uses gaze data recorded during a visual search andannotation task on an image dataset with pre-defined image

形状拘束付きの画像生成セマンティックセグメンテーション

パブリックディスプレイにおける集合的視線推定

視線情報を利用した画像認識

インタラクティブ音認識のための情報可視化

Training images

4096 dimensionalCNN feature f

Clustering result Positive/negative labels

Test image

Eye contact detector

Face & facial landmarks

Face & facial landmark detection 4096 dimensional

CNN feature f

Feature extraction

(f)

(a) Face & facial landmark detection

Face & facial landmarks

(b) Full-face gaze estimation (c) Clustering

(d) Target cluster selection Eye contact

detector

Gaze locations p

(e)

(g)

日常生活空間におけるアイコンタクト検出

大規模深層学習による視線推定

![Consistent Regions: Guaranteed Tuple Processing in IBM Streams · building blocks for tackling big data problems [3, 16, 22, 25]. With such systems, developers can write their applica-](https://static.fdocument.pub/doc/165x107/5f59ce4697a6287b983affe5/consistent-regions-guaranteed-tuple-processing-in-ibm-building-blocks-for-tackling.jpg)