2011_Abolghasemi

-

Upload

alansi92004 -

Category

Documents

-

view

213 -

download

0

Transcript of 2011_Abolghasemi

-

7/30/2019 2011_Abolghasemi

1/5

ON OPTIMIZATION OF THE MEASUREMENT MATRIX FOR COMPRESSIVESENSING

Vahid Abolghasemi1, Saideh Ferdowsi1, Bahador Makkiabadi1,2, and Saeid Sanei1

1Centre of Digital Signal Processing, School of Engineering, Cardiff University, Cardiff, CF24 3AA, UK2Electrical Engineering Department, Islamic Azad University, Ashtian Branch, Ashtian, IRAN

{abolghasemiv, ferdowsis, makkiabadib, saneis}@cf.ac.uk

ABSTRACT

In this paper the problem of Compressive Sensing (CS) isaddressed. The focus is on estimating a proper measurementmatrix for compressive sampling of signals. The fact thata small mutual coherence between the measurement matrixand the representing matrix is a requirement for achieving asuccessful CS is now well known. Therefore, designing mea-

surement matrices with smaller coherence is desired. In thispaper a gradient descent method is proposed to optimize themeasurement matrix. The proposed algorithm is designed tominimize the mutual coherence which is described as abso-lute off-diagonal elements of the corresponding Gram ma-trix. The optimization is mainly applied to random Gaussianmatrices which is common in CS. An extended approach isalso presented for sparse signals with respect to redundantdictionaries. Our experiments yield promising results andshow higher reconstruction quality of the proposed methodcompared to those of both unoptimized case and previousmethods.

1. INTRODUCTIONCompressive Sensing (CS) [1] [2] is one of the recent in-teresting fields in signal and image processing communi-ties. It has been utilized in many different applications frombiomedical signal and image processing [3] to communica-tion [4] and astronomy [5]. The core idea in CS is a novelsampling technique which under certain conditions can leadto a smaller rate compared to conventional Shannons sam-pling rate. The key requirement for achieving a successfulCS is compressibility or more precisely sparsity of the in-put signal. A sparse signal has a small number of active(nonzero) components compared to its total length. Thisproperty can either exist in the sampling domain of the sig-nal or with respect to other basis such as Fourier, wavelet,curvelet or any other basis. A CS scenario mainly consistsof two crucial parts; encoding (sampling) and decoding (re-covery). We formally explain both parts in this section, butthe focus in this work is on the first part where we try toimprove the sensing process which consequently affects thereconstruction performance.

Let x Rn be a sparse signal, meaning to have at mosts n nonzero elements. We now want to find p linear mea-surements termed as y =x, where s < p < n, andRpnis the measurement matrix. Obviously, p = s is the best pos-sible choice. However, since we are not aware of the loca-tions and the values of the nonzero elements when recon-structing x from y, a larger p is needed to guarantee the re-

covery of x [6]. In addition, the structure of is not arbi-trary, and should be adopted based upon some specific rules.

For instance, it has been proved [6] that for a with i.i.dGaussian entries with zero mean and variance 1/p the boundp C s log(n/p) is achievable and can guarantee the ex-act reconstruction ofx with overwhelming probability1. Thesame condition can be applied to binary matrices with inde-pendent entries taking values 1/p. As another example,we imply Fourier ensembles which are obtained by selecting

p renormalized rows from the n n discrete Fourier trans-form, with the bound p C s (log n)6.

Now, assume that x is not sparse in the present form (e.g.time domain), but it can be sparsely represented in anotherbasis (e.g. Fourier, wavelet, etc.). Mathematically speaking,we name the sparse version ofx satisfying x =, where Rnm is called the representing (or sparsifying) dictio-nary (or basis). is called a complete dictionary if n = m,otherwise n < m and we call it overcomplete (or redundant).For consistency in notations we always consider completedictionaries thorough the paper, unless it is stated. The ex-tension to overcomplete dictionaries is easy though. In orderto achieve a successful CS, we must choose a having leastpossible coherence with . In other words, a D := isdesired to have columns with small correlations [7]. Thisproperty is termed as mutual coherence, and usually denotedby . More details about will be discussed later in thispaper. The mutual coherence also affects the bound for p

such that p C2 s (log n)6 [6]. Interestingly, it is shownthat random ensembles are largely incoherent with any fixedbasis [7]. This is a useful property which allows us to non-adaptively choose a random for any type of signal. Moredetails and proofs in this context can be found in [6] and thereferences therein.

The second crucial job in CS is reconstruction, whichcan be described as solving the well known underdetermindproblem with sparsity constraint,

min0 s.t. y = D (1)where k denotes the k-norm in general, and here, 0-normgives the support of , which is actually the level of spar-sity. Although this problem is sometimes described in otherforms, the main issue is to find the sparsest possible sat-isfying y = D. Unfortunately, the problem (1) is noncon-vex in general, and the solution needs a combinatorial searchamong all possible sparse , which is not feasible. However,there are some greedy methods trying to iteratively solve (1).The family of Matching Pursuit (MP) [8] methods such asOrthogonal Matching Pursuit (OMP), stagewise OMP [9],and Iterative Hard Thresholding (IHT) [10] mainly attempt

1C is a computable constant.

18th European Signal Processing Conference (EUSIPCO-2010) Aalborg, Denmark, August 23-27, 2010

EURASIP, 2010 ISSN 2076-1465 427

-

7/30/2019 2011_Abolghasemi

2/5

to solve (1) by selecting the vectors of D which are mostlycorrelated with y. Thanks to the greedy nature of these algo-rithms; they are fast. In contrast, there have been proposedsome optimization-based methods, mainly achievable by lin-ear programming, called Basis Pursuit (PB) [11] which at-tempt to recover by converting (1) into a convex problem

which relaxes the

0

-norm to an

1

-norm problem:

min1 s.t. y = D (2)Although random matrices are suitable choices for , it

has been recently shown that optimizing with the hopeof reducing the mutual coherence can improve the perfor-mance [1215]. Elad [12] attempts to iteratively decreasethe average mutual coherence using a shrinkage operationfollowed by a Singular Value Decomposition (SVD) step.Duarte-Carvajalino et al. [13] take the advantage of an eigen-value decomposition process followed by a KSVD-based al-gorithm [13] (see also [16]) to optimize and train , re-spectively. Overally, the results of the current methods show

enhancement in terms of both reconstruction error and com-pression rate. That motivates us to work more on optimizingthe measurement matrix to obtain better results and also at-tempt to make the optimization process more efficient, spe-cially for large-scale signals.

In this paper we propose a gradient-based optimizationapproach to decrease the mutual coherence between the mea-surement matrix and the representing matrix. This can beachieved by minimizing the absolute off-diagonal elements

of the corresponding Gram matrix G = DT

D, where D isthe column-normalized version of D, and (.)T denotes thetransposition. Our idea is to approximate G with an identitymatrix using a gradient descent method.

In the next section we formally express the sensing prob-

lem and the required mathematics related to optimization ofthe measurement matrix. Then, in section 3, the gradient-based optimization method is described in details. In section4, the simulations are given to examine the proposed methodfrom practical perspectives. Finally, the paper is concludedin section 5.

2. PROBLEM FORMULATION

Similar to the notations in the previous section, we considerthe signal x to be sparse with cardinality s over the dictionaryRnm. Consider the noiseless case, we are going to takep < n linear measurements which based on CS rules can bedone by multiplying a with random i.i.d Gaussian entries

such that y =2. However, p cannot exceed the boundsmentioned in the previous section, which highly depends onthe coherence between and and the level of sparsity s.One of the suitable ways to measure the coherence between and is to look at the columns of D, instead. As D =,the mutual coherence (which is desired to be minimized) canbe defined as the maximum absolute value and normalizedinner product between all columns in D which can be de-scribed as follows [11],

(D) = maxi=j,1i,jm

|dTi dj|

di

dj

(3)

2

Note that in cases where x is sparse in the current domain, we ignore and consider x = and = D without loss of generality.

Another suitable way to describe , especially for thepurpose of this paper, is to compute the Gram matrix G =

DT

D, where D is column-normalized version of D. We thendefine mx (which is equal to (D)), as the maximum abso-lute off-diagonal elements in G,

mx = maxi=j,1i,jmgi j. (4)

Moreover, the average absolute off-diagonal elements inG is another useful measure defined as

av =i=j

gi jm(m1) . (5)

Mainly, there are two important reasons why we are in-terested in matrices with small coherence and these motivateus to optimize with the aim of decreasing the coherence.Suppose that the following inequality holds:

0 0 is the stepsize which can be fixed orvariable. Consequently, the full description of update equa-tion in each iteration is expressed as,

D(i+1) = D(i) D(i)(DT(i)D(i) I) (11)where i is the iteration index and is the new stepsize aftermerging the scaler 4 in (10) with . The proposed algorithmstarts with an initial random D(0) and then iteratively updat-

ing D. In addition, a normalization step for columns of D,

denoted by dj

with j = 1,2 . . .n, is required at each iteration:

d

j

(i+1) d

j

(i)/d

j

(i)2 (12)After K iterations, when the convergence condition(s) is(are)

met, D = D(K) is given as the solution for (8). Finally, if

x is sparse in its current domain, = D would actually bethe required measurement matrix and the algorithm is termi-nated. However, ifx is sparse over nn, then an inverse orpseudoinverse is required to obtain the measurement matrix

= D1. Note that the gradient descent methods, mainlydo not guarantee a global minimum, but can normally pro-vide a local minimum. The pseudocode of the proposed al-gorithm is given in Algorithm 1.

In order to extend the above algorithm for the case ofsparse signals over a dictionary nm, with n < m, we only

need to consider in converting (7) to (8). Since G =D

TD =TT, we modify the minimization problem and

obtain,

= argminTT I 2F (13)

The gradient of the error is then computed,

E

i j= 4(TT I)T (14)

and the update equation which directly updates is ex-pressed as:

(i+1) =(i)(i)(TT(i)(i) I)T. (15)

Algorithm 1: Gradient-descent optimization.

Input: Sparse representation basis nn (if necessary),Stepsize , Maximum number of iterations K.

Output: Measurement matrix pn.begin

Initialize D to a random matrix.for k=1 to K do

for j=1 to n do

dj dj/dj2end

D DD(DTD I)endifnn has been given as inputthen

D1else

Dend

end

4. SIMULATION RESULTS

In this section we illustrate some results of our experimentsto show the ability of the proposed method in optimizing themeasurement matrix and consequently its effect in the recon-struction process. The results are encouraging and show thatthe idea of optimizing the measurement matrix can increasethe performance in CS framework.

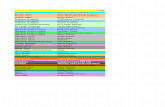

In the first experiment we built up a random dictionary(200400) and a random (100200) both with i.i.d Gaus-sian elements. We then ran the proposed algorithm with = 0.02 and 60 number of iterations to optimize . Wealso applied Elads algorithm [12] to the same and. Theparameters we used for Elads method were: the shrinkage

parameter = 0.5, a fixed threshold t = 0.25 and 60 num-ber of iterations. Figure 1(a) shows the distribution of ab-solute off-diagonal elements of G for this experiment. Asseen from the figure, after applying the proposed method,the distribution becomes denser with more coefficients closeto zero. This change verifies a smaller coherence between and , which is also confirmed by having av = 0.0075 andmx = 0.3887 in this experiment, compared to av = 0.0148and mx = 0.4995, for unoptimized . Elads method im-proves the coherence, almost similarly; av = 0.0187 andmx = 0.4255. However, large av in Elads method, alsoreported in [13], is due to an undesired peak around 0 .15 inFigure 1(a), which may weaken the RIP conditions [13]. Thisdrawback has been well mitigated in the proposed method as

can be noticed from Figure 1(a). The same results are alsoseen for random i.i.d Gaussian (100200) and Discrete co-sine transform (DCT) (200200), shown in Figure 1(b).

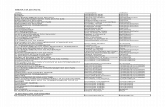

In the next experiment we generated a set of 10000 sparsesignals with the length of n = 120, at random locations andrandom amplitudes, for nonzero samples. We chose DCTbases for , with the size of 120120, i.e. n = m = 120. Inthis experiment we used = 0.01 and 150 number of itera-tions. For Elads method we used = 0.95, relative thresholdt = 20% and 1000 number of iterations:

First, we fixed the sparsity level to s = 8 and varied pfrom 20 to 80 in taking the measurements y =pnnn.We then applied three reconstruction methods: IHT,

OMP, and BP on all 10000 signals. This experiment wasrepeated for each p [20 80], and then the relative error

429

-

7/30/2019 2011_Abolghasemi

4/5

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 10

5000

10000

15000

Amplitude

Distribution

No optimization

Proposed method

Elads method

(a) : Random, and : Random.

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 10

500

1000

1500

2000

2500

3000

3500

4000

4500

Amplitude

Distribution

No optimization

Proposed method

Elads method

(b) : Random, and : DCT.

Figure 1: Distribution of off-diagonal elements of G.

rate between the reconstructed signals and the originalsignals were computed. The result of this experiment isshown in Figure 2. It is seen that optimization of has aconsiderable influence in reducing the reconstruction er-ror. In addition, it is observed that the proposed methodworks better compared to the work in [12]. It is also seenthat the error rate is almost similar for both proposed andElads, when using BP for recustruction (Figure 2 (c)).

Second, in order to evaluate the performance of the pro-posed method against changes in cardinality, we fixedp = 30 and varied the sparsity level from 1 to 20, andthen reconstructed x by applying IHT and OMP for all10000 sparse signals. The computed relative error ratefor this experiment is shown in Figure 3. Again we seeimprovement compared to unoptimized case.

In the last experiment, we studied the effects of the pro-posed optimization on sampling and reconstruction of realimages. Due to huge number of pixels, it is mainly impossi-ble to process the whole image, directly. Hence, we appliedthe multi-scale strategy proposed in [19] and [20], consider-ing wavelet transform as the representing dictionary to spar-sify the input image. We used symmlet8wavelet with coars-est scale at 4 and the finest scale at 5, and followed the sameprocedure as in [19]. As an illustrative example, the Mon-drian image of size 256 256 was compressed using un-optimized and optimized measurement matrices. Followingthe same procedure in [19] to keep the approximation coef-ficients, the whole image was then compressed to 870 sam-ples. Then, the image was reconstructed using HALS CSmethod [19, 21]. As can be seen from Figure 4, the recon-struction error computed as = x x2/x2, where x isthe reconstructed image, is less when we compress the im-age using the proposed optimized measurement matrix. Wealso recorded the running time of this experiment where adesktop computer with a Core 2 Duo CPU of 3.00 GHz, and

2 G Bytes of RAM was used. It is seen from Figure 4 thatadding the optimization step increases the running time, as

20 30 40 50 60 70 800

0.2

0.4

0.6

0.8

1

p

Relativeerrorrate

No optimization

Elads method

Proposed method

(a)

20 30 40 50 60 70 800

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

p

Relativeerrorrate

No optimization

Elads method

Proposed method

(b)

20 25 30 35 40 45 500

0.1

0.2

0.3

0.4

0.5

0.6

0.7

p

Relativeerrorrate

No optimization

Elads method

Proposed method

(c)

Figure 2: Relative error rate vs. the number of measurements p,using (a) IHT, (b) OMP, and (c) BP for reconstruction.

5 10 15 200

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

s

Relativee

rrorrate

No optimization

Elads method

Proposed method

(a)

5 10 15 200

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

s

Relativee

rrorrate

No optimization

Elads method

Proposed method

(b)

Figure 3: Relative error rate vs. the sparsity level s, using (a) IHTand (b) OMP for reconstruction.

expected. However, less computation time of the proposedmethod compared to Elads is noticeable.

5. DISCUSSION AND CONCLUSION

In this paper the problem of compressive sensing is investi-gated. A new gradient-based approach is proposed in whichthe aim is to optimize the measurement matrix in order to de-crease the mutual coherence. An extension to the proposedalgorithm is also presented which is suitable for sparse sig-nals with respect to overcomplete dictionaries. The results ofour simulations on both real and synthetic signals are promis-ing and confirm that optimization of the measurement matrixincreases the performance, which is introduced in terms ofreconstruction error and the number of measurements taken.In practice, however, the proposed method is still not appli-

cable to very large-scale problems. Lower complexity of theproposed method, compared to the previous methods, indi-

430

-

7/30/2019 2011_Abolghasemi

5/5

50 100 150 200 250

50

100

150

200

250

(a)

50 100 150 200 250

50

100

150

200

250

(b) = 0.168, t = 2.13 sec.

50 100 150 200 250

50

100

150

200

250

(c) = 0.157, t = 19.37 sec.

50 100 150 200 250

50

100

150

200

250

(d) = 0.163, t = 444.3 sec.

Figure 4: Reconstruction of Mondrian image using HALS CSmethod. (a) Original image. Reconstruction with (b) no optimiza-tion, (c) proposed optimization, and (d) Elads optimization of themeasurement matrix.

cates the possibility of appropriateness of such methods forvery large-scale problems. This fact has not been reported inthe literature yet and needs to be challenged.

REFERENCES

[1] D. Donoho. Compressed sensing. Information Theory,IEEE Transactions on, 52(4):12891306, April 2006.

[2] E. J. Candes, J. Romberg, and T. Tao. Robust un-certainty principles: exact signal reconstruction fromhighly incomplete frequency information. IEEE Trans-actions on Information Theory, 52(2):489509, Febru-ary 2006.

[3] M. Lustig, D. Donoho, J.M. Santos, and J.M. Pauly.Compressed sensing MRI. Signal Processing Maga-

zine, IEEE, 25(2):7282, March 2008.

[4] S.F. Cotter and B.D. Rao. Sparse channel estimationvia matching pursuit with application to equalization.Communications, IEEE Transactions on, 50(3):374377, Mar 2002.

[5] J. Bobin, J.-L. Starck, and R. Ottensamer. Compressedsensing in astronomy. Selected Topics in Signal Pro-cessing, IEEE Journal of, 2(5):718726, Oct. 2008.

[6] E. J. Candes, J. K. Romberg, and T. Tao. Stable sig-nal recovery from incomplete and inaccurate measure-ments. Communications on Pure and Applied Mathe-matics, 59(8):12071223, 2006.

[7] E. J. Candes and M. B. Wakin. An introduction to com-pressive sampling. IEEE Signal Processing Magazine,25(2):2130, March 2008.

[8] J. A. Tropp and A. C. Gilbert. Signal recovery

from random measurements via orthogonal matchingpursuit. Information Theory, IEEE Transactions on,53(12):46554666, Dec. 2007.

[9] D. Donoho, Y. Tsaig, I. Drori, and J-l. Starck. Sparsesolution of underdetermined linear equations by stage-wise orthogonal matching pursuit. Technical report,

2006.[10] T. Blumensath and M. Davies. Iterative thresholding

for sparse approximations. Journal of Fourier Analysisand Applications, 2004.

[11] D. Donoho and P. B. Stark. Uncertainty principles andsignal recovery. SIAM Journal on Applied Mathemat-ics, 49(3):906931, 1989.

[12] M. Elad. Optimized projections for compressedsensing. Signal Processing, IEEE Transactions on,55(12):56955702, Dec. 2007.

[13] J.M. Duarte-Carvajalino and G. Sapiro. Learning tosense sparse signals: Simultaneous sensing matrix andsparsifying dictionary optimization. Image Processing,

IEEE Transactions on, 18(7):13951408, July 2009.[14] P. Vinuelas-Peris and A. Artes-Rodriguez. Sensing ma-

trix optimization in distributed compressed sensing. InStatistical Signal Processing, 2009. SSP 09. IEEE/SP15th Workshop on, pages 638 641, Aug. 2009.

[15] V. Abolghasemi, S. Sanei, S. Ferdowsi, F. Ghaderi, andA. Belcher. Segmented compressive sensing. In Statis-tical Signal Processing, 2009. SSP 09. IEEE/SP 15thWorkshop on, pages 630 633, Aug. 2009.

[16] M. Aharon, M. Elad, and A. Bruckstein. K-SVD: Analgorithm for designing overcomplete dictionaries forsparse representation. Signal Processing, IEEE Trans-actions on, 54(11):43114322, 2006.

[17] J.A. Tropp. Greed is good: algorithmic results forsparse approximation. Information Theory, IEEETransactions on, 50(10):2231 2242, Oct. 2004.

[18] D. L. Donoho and M. Elad. Optimally sparse repre-sentation in general (nonorthogonal) dictionaries via l-minimization. PNAS, 100(5):21972202, March 2003.

[19] A. Cichocki, R. Zdunek, A. H. Phan, and S. Amari.Nonnegative Matrix and Tensor Factorizations: Appli-cations to Exploratory Multi-way Data Analysis and

Blind Source Separation. John Wiley, 2009.

[20] Y. Tsaig and D. L. Donoho. Extensions of compressedsensing. Signal Processing, 86(3):549 571, 2006.Sparse Approximations in Signal and Image Process-

ing.

[21] S. Sanei, A.H. Phan, Jen-Lung Lo, V. Abolghasemi,and A. Cichocki. A compressive sensing approach forprogressive transmission of images. In Digital Sig-nal Processing, 2009 16th International Conference on,pages 1 5, Jul. 5-7 2009.

431