IJETAE_0612_28

-

Upload

wulaningayu -

Category

Documents

-

view

214 -

download

0

Transcript of IJETAE_0612_28

-

7/28/2019 IJETAE_0612_28

1/7

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, Volume 2, Issue 6, June 2012)

156

Colour Based Image Segmentation Using L*A*B* ColourSpace Based On Genetic Algorithm

Mr. Vivek Singh Rathore1, Mr. Messala Sudhir Kumar2, Mr. Ashwini Verma 31M.Tech Scholar 4th Sem., 3Associate Professor, LNCT, Indore(M.P.)

2Assosiate Professor, CEC Bilaspur (C.G.)

AbstractIn colour based image segmentation is made toovercome the problems encountered while segmenting an

object in a complex scene background by using the colour of

the image. After pre-processing, the image is transformed

from the RGB colour space to L*a*b* space. Then, the three

channels of L*a*b* colour space are separated and a single

channel is selected depending upon the colour under

consideration. Next, genetic based colour segmentation isperformed on the single channel image after which practical is

applied to the image to obtain the particular object of interest.

As can be seen from the expected results shown in this paper

the proposed method is effective in segmenting the complex

background images, these results are used to propose a new

colour image segmentation method. The proposed method

searches for the principal colours, defined as the intersections

of the homogeneous blocks of the given image. As such, rather

than using the noisy individual pixels, which may contain

many outliers, the proposed method uses the linear

representation of homogeneous blocks of the image. The

paper includes comprehensive mathematical discussion of the

proposed method and expected results to show the efficiency

of the proposed algorithm.

KeywordsImage segmentation, RGB colour space,

L*A*B* colour space, Separate channels, Genetic algorithm.

I. INTRODUCTIONImage segmentation may be defined as a technique, which

partitions a given image into a finite number of non-

overlapping regions with respect to some characteristics,

such as gray value distribution, texture distribution, etc.

The objective of dividing an image into homogeneous

regions remains a challenge, especially when the image is

made up of complex textures. Traditional methods for

image segmentation have approached the problem either

from localisation in class space using region information,or from localisation in position, using edge or boundary

information [1]-[5]. Some rules to be followed for regions

resulting from the image segmentation can be stated as:

They should be uniform and homogeneous with respect

to some characteristics.

Their interiors should be simple and without many small

holes.

Adjacent regions should have significantly different

values with respect to the characteristic on which they are

uniform.

Boundaries of each segment should be simple, not

ragged, and must be spatially accurate.

Various different colour spaces have been defined which

simply described the colours, or gamut that particularelectronic equipment can interpret, analyze or display. The

choice of colour space representation could be taken to

enhance the performance of processes such as segmentation

because of the increase in demand of the colour driven

images as compared to gray scale images [6]-[7].

II. IMAGE SEGMENTATIONIn this paper, the image segmentation is defined as an

optimal segmentation obtained in a pure bottom-up

fashion that provides the information necessary to initialize

and constrain high-level segmentation methods. Although

the details of primary segmentation methods will depend

on the application domain, we require that they do notdepend on a priori knowledge about the objects present in

a particular scene or image specific parameter adjustments.

These claims become realistic because we do not seek for a

perfect segmentation result but rather for the best possible

support for more intelligent methods to be applied

afterwards. Unfortunately up to now there is no theory

which defines the quality of a segmentation. Therefore we

have to rely on some heuristic constraints which the

primary segmentation should meet:

The segmentation should provide regions that arehomogenous with respect to one or more

properties, i.e. the variation of measurements

within the regions should be considerably lessthan the variation at borders.

The position of the borders should coincide withlocal maxima, ridges and saddle points of the local

gradient of the measurements.

Areas that perceptually form only one regionshould not be splitted into several parts. In

particular this applies to smooth shading and

texture.

-

7/28/2019 IJETAE_0612_28

2/7

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, Volume 2, Issue 6, June 2012)

157

Small details, if clearly distinguished by theirshape or contrast, should not be merged with theirneighbouring regions.[10]

III. IMAGE PRE-PROCESSINGImage pre-processing is form of signal processing for

which the input is an image, such as a picture; the output of

image pre-processing may be either an image or, a set of

characteristics or parameters related to the image. Mostimage pre-processing techniques involve treating the

image as a two-dimensional signal and applying standard

signal-processing techniques to it. segmentation refers to

the process of partitioning a digital image into multiple

segments (sets of pixels, also known as super pixels). The

goal of segmentation is to simplify and/or change therepresentation of an image into something that is more

meaningful and easier to analyze. Image segmentation is

typically used to locate objects and boundaries in

images.[9]

The two types of methods used for Image Processing

are

Analog Image Processing Digital Image Processing.

Analog or visual techniques of image processing can be

used for the hard copies like printouts and photographs.

Association is another important tool in image processing

through visual techniques. So analysts apply a combination

of personal knowledge and collateral data to image

processing.

Digital Processing techniques help in manipulation of

the digital images by using computers. As raw data from

imaging sensors from satellite platform .The three general

phases that all types of data have to undergo while using

digital technique are Pre-processing, enhancement and

display, information extraction.

A.Purpose Of Image ProcessingThe purpose of image processing is divided into 5

groups. They are:

Visualization - Observe the objects that are notvisible.

Image sharpening and restoration - To create abetter image.

Image retrieval - Seek for the image of interest. Measurement of pattern Measures various

objects in a image.

Image RecognitionDistinguish the objects in animage.

The pre-processing of the images. Pre- processing

consists of those operations that prepare data forsubsequent analysis that attempts to correct for systematic

errors. The digital images are subjected to several

corrections. After the pre-processing is complete, the

original images are pre-processed to make the

dimensionality more adaptable to processing which also

helps to make the processing faster.

IV. LAB COLOURSPACEA Lab colour space is a colour opponent space with

dimension L for lightness and a and b for the colour-

opponent dimensions, based on nonlinearly compressed

CIE XYZ colour space coordinates. "Lab" colour spaces is

to create a space which can be computed via simpleformulas from the XYZ space, but is more perceptually

uniform than XYZ. Perceptually uniform means that a

change of the same amount in a colour value should

produce a change of about the same visual importance.

When storing colours in limited precision values, this can

improve the reproduction of tones. Both Lab spaces are

relative to the white point of the XYZ data they were

converted from. Lab values do not define absolute colours

unless the white point is also specified.[11].Your goal is to

identify different colours in image by analyzing the L*a*b*

colour space. The image was acquired using the Image

Acquisition Toolbox.

Step 1: Acquire Image

Read the image, which is an colourful image

instead of using gray image.

Step 2: Calculate Sample Colours in L*a*b* Colour Space

for each region.

The L*a*b* colour space is derived from the CIE

XY tristimulus values. The L*a*b* space consists

of a luminosity 'L*' layer, chromaticity layer 'a*'

indicating where colour falls along the red-green

axis, and chromaticity layer 'b*' indicating where

the colour falls along the blue-yellow axis. Your

approach is to choose a small sample region for

each colour and to calculate each sample region'saverage colour in 'a*b*' space.

Step 3: Classify Each Pixel Using the Nearest Neighbour

rule each colour marker now has an 'a*' and a 'b*'

value.

The smallest distance will tell you that the pixel

most closely matches that colour marker.

-

7/28/2019 IJETAE_0612_28

3/7

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, Volume 2, Issue 6, June 2012)

158

Step 4: Display Results of Nearest Neighbour Classification

The label matrix contains a colour label for eachpixel in the fabric image. Use the label matrix to

separate objects in the original fabric image by

colour.

Step 5: Display 'a*' and 'b*' Values of the Labelled Colours.

The nearest neighbour classification separated the

different colour populations by plotting the 'a*'

and 'b*' values of pixels that were classified into

separate colours. For display purposes, label each

point with its colour label.

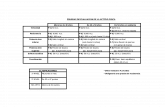

Segmented ImageFigure1. Scheme of the segmentation method

V. DIFFERENT CHANNEL IN LAB COLOURSPACEThe three coordinates of LAB represent the lightness of

the color (L* = 0 yields black and L* = 100 indicates

diffuse white; specular white may be higher), its position

between red/magenta and green (a*, negative values

indicate green while positive values indicate magenta) and

its position between yellow and blue (b*, negative values

indicate blue and positive values indicate yellow)

coordinate ranges from 0 to 100.

The possible range of a* and b* coordinates is

independent of the colour space that one is convertingfrom, since the conversion uses X and Y which come from

RGB the red/green and yellow/blue opponent channels are

computed as differences of lightness transformations of

cone responses, CIELAB is a chromatic value colour space

The nonlinear relations forL*, a*, and b* are intended to

mimic the nonlinear response of the eye. Furthermore,

uniform changes of components in theL*a*b* colour space

aim to correspond to uniform changes in perceived colour,

so the relative perceptual differences between any two

colours in L*a*b* can be approximated by treating each

colour as a point in a three dimensional space.

The L*a*b* colour space includes all perceivable

colours which means that its gamut exceeds those of theRGB and CMYK colour models. One of the most

important attributes

Figure2. The L*a*b* model

of the L*a*b*-model is the device independency. This

means that the colours are defined independent of their

nature of creation or the device they are displayed on. The

L*a*b* color space is used e.g. in Adobe Photoshop when

graphics for print have to be converted from RGB to

CMYK, Your goal is to identify different colours in imageby analyzing the L*a*b* colour space

Image pre-processing

RGB Colour Space to L*a*b* colour

Channel Separation representing the different

Colours

Colour Based segmentation based on genetic

Algorithm

-

7/28/2019 IJETAE_0612_28

4/7

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, Volume 2, Issue 6, June 2012)

159

Figure3. The CIE 1976 (L*, a*, b*) colour space

The next implementation in the proposed method is to

convert the pre-processed images which are in RGB colour

space to L*a*b* colour space. For this proposed work

L*a*b* colour space is selected which is a homogeneous

space for visual perception.

Figure4. Pre- Processed Image

The difference between the two points in the L*a*b*

colour space is same with the human visual system. Since

the L*a*b* model is a three-dimensional model, it can only

be represented properly in a three-dimensional space [8]-

[9]. The solution to convert digital images from the RGB

space to the L*a*b* colour space is given by the following

formula [8].

L* = 116 f(Y/Yn)

16

a* = 500[f(X/Xn)-f(Y/Yn)]

b* = 200[f(Y/Yn)-f(Z/Zn)]

X, Y, Z, Xn, Yn, and Zn are the coordinates of CIEXYZ

colour space. The solution to convert digital images from

the RGB space to the CIEXYZ colour space is as the

following formula.

X 0.608 0.174 0.201 R

Y = 0.299 0.587 0.114 G

Z 0.000 0.066 1.117 B

-

7/28/2019 IJETAE_0612_28

5/7

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, Volume 2, Issue 6, June 2012)

160

Xn, Yn, and Zn are respectively corresponding to the

white value of the parameter.

f(x) = X1/3 x>0.008856

7.787x+16/116 x

-

7/28/2019 IJETAE_0612_28

6/7

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, Volume 2, Issue 6, June 2012)

161

Zj(r,g,b), j = 1, 2, ..., K

if || Xi(r,g,b)-Zj(r,g,b)|| < || Xi(r,g,b)-Zp(r,g,b)||,

p = 1,2,...,K, and p j.

B.Proposed MethodWe proposed a new segmentation algorithm that can

produce a new result according to the values of the

clustering. We consider a colour image f of size mxn.

The proposed algorithm is:

1. Repeat steps 2 to 8 for K=2 to K=Kmax.2. Initialize the P chromosomes of the population.3. Compute the fitness function fi for i=1,,P, using

equation.

4. Preserve the best (fittest) chromosome seen till thatgeneration.5. Apply selection on the population.6. Apply crossover and mutation on the selected

population.

7. Repeat steps 3 to 6 till termination condition isreached.

8. Compute the clustering Validity Index for the fittestchromosome for the particular value of K.

9. Cluster the dataset using the most appropriate numberof clusters determined by comparing the Validity

Indices of the proposed clusters for K=2 to K=Kmax.

VII. EXPECTED RESULTThe result of image segmentation is a set of segments

that collectively cover the entire image, or a set of contours

extracted from the image. Each of the pixels in a region are

similar with respect to some characteristic or computed

property, such as colour, intensity ,or texture. Adjacent

regions are significantly different with respect to the same

characteristic. When applied to a stack of images, typical in

Medical imaging, the expected result contours after image

segmentation can be used to find how many objects in

cluster as well as it is used to count a regions in image.

VIII.CONCLUSIONA new colour image segmentation method is proposed,

which utilizes the general method. The mathematics of the

proposed method is discussed comprehensively and

expected results are presented. Comparison of the

performance of the proposed method with an available

clustering method ,I expect that the proposed method is

more stable and faster.

It is also observed that the proposed method decreases

the probability of local minimum entrapment. The usabilityof the proposed segmentation method is also more than the

available methods. Furthermore, while the proposed

method gives more perceptually satisfactory segmentation

results, it demands less processing resources. the concept of

segmentation based on the colour features of an image.

IX. FUTURE ENCHANCEMENTA new feature selection technique for face recognition

we can proposed. the most proper ones should be selected

to enhance the performance of classification. Although GA

considered as one the best optimization methods, defining

an appropriate and global fitness function for the feature

selection has a high impact on its performance. Theassociated problem of simple GA fitness function was the

quick convergence into uninformative feature sets. In the

proposed techniques named Swap Training a new fitness

function .

REFERENCES

[1 ] Kearney,Colm and Patton, J. Andrew, Survey on the imagesegmentation, Financial Review, 41: 29-48 (2000).

[2 ] H. D. Cheng, X. H. Jiang, Y. Sun, and J. Wang, Color imagesegmentation: advances and prospects, Pattern Recognition, 34:22592281, (2001).

[3 ] He, Xiaoling; Hodgson, W. Jeffrey ,Research Image ProcessingTechnology hot issue, IEEE Transactions on Intelligent

Transportation Systems, 3(4): Dec., 244-251 (2002).[4 ] Xia Yong and Feng Dagan; "A General Image Segmentation Model

and its Application," icig , pp.227-231, 2009 Fifth International

Conference on Image and Graphics, 2009.

[5 ] Y. Boykov and V. Kolmogorov; An experimental comparison ofmin-cut/max-flow algorithms for energy minimization in

vision,IEEE Trans. On Pattern Analysis and Machine Intelligence,

vol. 26, no. 9, pp. 1124-1137, 2004.

[6 ] Kwok, M.N. ; Ha, P.Q. and Fang, G. - Image and Signal ProcessingEffect of color space on color image segmentation.In: Image and

Signal Processing. CISP 09. 2nd International Congress on ,17-19

Oct., Tianjin (2009).

[7 ] Erik Reinhard, Michael Adhikhmin, Bruce Cooch, et al. ; ColorTransfer between Image, IEEE transactions on Computer Graphics

and Applications, USA, 2001.

[8 ] Chun Chen;Computer image processing technology andalgorithms., Beijing: Tsinghua University Press, 2003.

[9 ] R. Fisher, K Dawson-Howe, A. Fitzgibbon, C. Robertson, E. Trucco(2005). Dictionary of Computer Vision and Image Processing. John

Wiley. ISBN 0-470-01526-8

[10 ]Shankar Rao, Hossein Mobahi, Allen Yang, Shankar Sastry and YiMa Natural Image Segmentation with Adaptive Texture and

Boundary Encoding, Proceedings of the Asian Conference onComputer Vision (ACCV) 2009, H. Zha, R.-i. Taniguchi, and S.

Maybank (Eds.), Part I, LNCS 5994, pp. 135--146, Springer.

-

7/28/2019 IJETAE_0612_28

7/7

International Journal of Emerging Technology and Advanced Engineering

Website: www.ijetae.com (ISSN 2250-2459, Volume 2, Issue 6, June 2012)

162

[11 ]Margulis, Dan (2006). Photoshop Lab Color: The CanyonConundrum and Other Adventures in the Most PowerfulColorspace. Berkeley, Calif. : London: Peachpit ; Pearson Education.

ISBN 0321356780.

[12 ]COLORLAB MATLAB toolbox for color science computation andaccurate color reproduction. It includes CIE standard tristimulus

colorimetry and transformations to a number of non-linear color

appearance models (CIE Lab, CIE CAM, etc.).

[13 ]M. Srinivas, Lalit M. Patnaik, Genetic Algorithms: A urvey.[14 ]D. E. Goldberg, Genetic Algorithms in Search, Optimization and

Machine Learning, Addison-Wesley, 1989.

[15 ]Hwei-Jen Lin, Fu-Wen Yang and Yang-Ta Kao, An Efficient GA-based ClusteringTechnique, in Tamkang Journal of Science and

Engineering Vol-8 No-2, 2005.