05523919

-

Upload

saurabh-shukla -

Category

Documents

-

view

220 -

download

0

Transcript of 05523919

-

8/3/2019 05523919

1/10

1200 IEEE TRANSACTION S ON CIRCUI TS A ND SYSTEMS FOR VIDEO TECHNOLOGY, VOL. 20, NO. 9, SEPTEMBER 2010

Parallel and Pipeline Architectures forHigh-Throughput Computation of

Multilevel 3-D DWTBasant K. Mohanty, Member, IEEE, and Pramod K. Meher, Senior Member, IEEE

AbstractIn this paper, we present a throughput-scalable par-allel and pipeline architecture for high-throughput computationof multilevel 3-D discrete wavelet transform (3-D DWT). Thecomputation of 3-D DWT for each level of decomposition issplit into three distinct stages, and all the three stages areimplemented in parallel by a processing unit consisting of anarray of processing modules. The processing unit for the first leveldecomposition of a video stream of frame-size (MN) consists ofQ/2 processing modules, where Q is the number of input samplesavailable to the structure in each clock cycle. The processing unitfor a higher level of decomposition requires 1/8 times the numberof processing modules required by the processing unit for itspreceding level. For J level 3-D DWT of a video stream, each ofthe proposed structures involves J processing units in a cascadedpipeline. The proposed structures have a small output latency,and can perform multilevel 3-D DWT computation with 100%hardware utilization efficiency. The throughput rate of proposedstructures are Q/7 time higher than the best of the correspondingexisting structures. Interestingly, the proposed structures involvea frame-buffer of O(MN) while the frame-buffer size of theexisting structures is O(MNR). Besides, the on-chip storage andthe frame-buffer size of the proposed structure is independent ofthe input-block size and this favors to derive highly concurrentparallel architecture for high-throughput implementation. Theoverall area-delay products of proposed structure are significantly

lower than the existing structures, although they involve slightlymore multiplier-delay product and more adder-delay product,since it involves significantly less frame-buffer and storage-word-delay product. The throughput rate of the proposed structurecan easily be scaled without increasing the on-chip storage andframe-memory by using more number of processing modules,and it provides greater advantage over the existing designs forhigher frame-rates and higher input block-size. The full-parallelimplementation of proposed scalable structure provides the bestof its performance. When very high throughput generated bysuch parallel structure is not required, the structure could beoperated by a slower clock, where speed could be traded forpower by scaling down the operating voltage and/or the pro-cessing modules could be implemented by slower but hardware-efficient arithmetic circuits.

Manuscript received January 17, 2009; revised September 2, 2009 andDecember 24, 2009. Date of publication July 26, 2010; date of current versionSeptember 9, 2010. This paper was recommended by Associate Editor S.-Y.Chien.

B. K. Mohanty is with the Dept. of Electronics and CommunicationEngineering, Jaypee Institute of Engineering and Technology, Guna 473226,Madhya Pradesh, India (e-mail: [email protected]).

P. K. Meher is with the Department of Embedded Systems, Institute forInfocomm Research, Singapore 138632 (e-mail: [email protected]).

Color versions of one or more of the figures in this paper are availableonline at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TCSVT.2010.2056950

Index Terms3-D DWT, discrete wavelet transform, paralleland pipeline architecture, very large scale integration (VLSI).

I. Introduction

THE DISCRETE wavelet transform (DWT) is widely useddue to its remarkable advantage over the unitary trans-forms like discrete Fourier transform (DFT), discrete cosine

transform (DCT) and discrete sine transform (DST) for variousapplications due to its multiple time-frequency resolution.

DWT of different dimensions has emerged as a powerful tool

for speech and image coding in recent years. The 3-D DWT is

found to provide superior performance in video compression

by eliminating the temporal redundancies within the video

sequences for motion compensation [1]. Apart from that, 3-D

DWT has been used popularly for the compression of 3-D and

4-D medical images, volumetric image compression, and video

watermarking etc [2][6]. The multidimensional DWTs are

particularly more computation intensive and, therefore, require

to be implemented in very large scale integrated (VLSI)

systems for real-time applications.

The 2-D and 3-D DWTs can be realized either by separableapproach or by non-separable approach. The separable ap-

proach is more popular over the other, because it involves less

computation for the same throughput performance. The main

building blocks of separable multidimensional DWT cores are

constituted by lower dimensional transform modules and one

or more transposition units. Although the speed performance

and hardware-complexity of a multidimensional DWT archi-

tecture substantially depends on the implementation of its

lower dimensional modules, the area and time overhead of

transposition unit significantly affects the overall performance

of the computing structure [7][9]. In order to avoid the

transposition unit of 2-D DWT computation, some pipeline

architectures have been proposed for the implementation of

non-separable 2-D DWT [10][12]. Attempts have also been

made to reduce the complexities of transposition unit. Wu et al.

[13] have proposed a line-based folded architecture to reduce

the size of the transposition-buffer and overall complexity of

separable 2-D DWT device. In this paper, we aim at presenting

another possible approach for separable implementation of

multidimensional DWT by parallel processing with appropri-

ate scheduling of computation to achieve significant reduction

in storage requirement and area-delay product.

1051-8215/$26.00 c 2010 IEEE

-

8/3/2019 05523919

2/10

MOHANTY AND MEHER: PARALLEL AND PIPELINE ARCHITECTURES FOR HIGH-THROUGHPUT COMPUTATION OF MULTILEVEL 3-D DWT 1201

As of now, only a few designs for VLSI implementation

of 3-D DWT are found in the literature [16][20]. Weeks

et al. have proposed two separate designs (3DW-I and 3DW-

II) for computing 3-D DWT [16][18]. The 3DW-I design

involves on-chip memory of the O(MNR) which is imprac-

tical to implement in a chip, where (M N) is the im-

age size, and R is the frame-rate of the given video. The

3DW-II structure performs block-by-block processing of 3-

D data, and involves very small on-chip memory O(K). It,however, involves complex control circuitry and frame-buffer

of size MNR to feed the blocks of input data. The aver-

age computation time (ACT) of 3DW-II is also significantly

higher than that of 3DW-I. Das et al. [19] have proposed

a separable architecture for 3-D DWT, which involves less

memory space and lower output latency compared with the

earlier designs. Dai et al. [20] have applied the polyphase

decomposition technique, and mapped the computation of

3-D DWT into a systolic architecture. They have used the

conventional separable method efficiently in their structure

for reducing the on-chip storage space. The systolic design

of [20] requires four times the resources compared to that of

[19], and calculates the 3-D DWT nearly four times faster,and involves significantly less storage space compared to the

other. One major problem with the designs proposed in [19]

and [20] is that they compute the multilevel 3-D DWT by

level-by-level approach (similar to the folded scheme proposed

by Wu et al. [13]) using an external frame-buffer of size

(MNR)/8.

The external frame-buffer is a major hardware component in

the existing structures since the frame-size for practical video

transmission applications may vary from 176144 (screen size

of mobile phone) to 19201080 [screen size of high-definition

television (HDTV)] and the frame-rate can vary from 15 (f/s)

in mobile phones to 60 f/s for HDTV application. The on-

chip storage and the frame-buffer contribute more than 90% to

the total area of the existing structures. Significant amount of

memory-bandwidth and computation-time are also wasted for

accessing the external frame-buffer. It is also observed that the

on-chip storage and frame-buffer size is remain independent

of the throughput rate. This motivates us to apply concurrent

design method and this has two-fold advantage: using con-

current design method the frame-buffer size could be reduced

and the on-chip memory of the 3-D structure can be used more

efficiently to calculate multiple outputs per cycle to improve

the overall performance of the chip. Since the silicon devices

are progressively scaling according to Moores Law [21][23],

more components are accommodated in the integrated circuits,and at the same time silicon cost has been declining fast,

over the years. Hence, it may be considered as an appropriate

strategy to design parallel architectures where area can be

traded either for time, or for power if faster computation is

not required by the application. If high throughput is not

required for a given application, then the clock frequency

could be reduced and lower operating voltage could be used

for reducing the power consumption [24]. Keeping this in

mind, we have proposed a parallel architecture for multilevel

3-D DWT. The key ideas used in our proposed approach

are:

1) to process each decomposition level of 3-D DWT in sep-

arate computing blocks in cascaded pipeline structure for

concurrent computation of multilevel DWT computation

in order to reduce the size of the frame-buffer used for

buffering of the subband components and to maximize

hardware utilization efficiency;

2) the input rows for each level are appropriately folded to

meet the desired throughput rate and to achieve 100%

hardware utilization efficiency (HUE) of the processingunit.

Using the above approach, we have reduced the frame-

buffer size, and have obtained Q/7 times higher throughput

compared with the best of the existing structures, using the on-

chip memory of the same order. It is shown that the proposed

structure can calculate DWT coefficient of an input video

signal of size (MNR) in MNR/Q cycles. The proposed

parallel implementation of 3-D DWT structures is of additional

advantage, since the size of the frame-buffer could be reduced,

and it does not demand for higher on-chip memory and frame-

buffer for higher input block sizes, which contribute the most

of the hardware in the existing designs.

The remainder of this paper is organized as follows. Mathe-

matical formulation of the 3-D DWT computation is presented

in Section II. The proposed architecture for 1-level 3-D

DWT is presented in Section III. A pipeline architecture for

multilevel 3-D DWT is presented in Section IV. The hardware

complexity and performance of proposed structure is discussed

in Section V. Our conclusion is presented in Section VI.

II. Mathematical Formulation

The 3-D DWT coefficients of any decomposition level can

be obtained from the scaling coefficients of its previous level

according to the pyramid algorithm, given by

lllj (n1, n2, n3) =

Kh1

i1=0

Kh1

i2=0

Kh1

i3=0

h1(i1) h2(i2) h3(i3)

lllj1(2n1 i1, 2n2 i2, 2n3 i3) (1)

llhj (n1, n2, n3) =

Kh1

i1=0

Kh1

i2=0

Kg1

i3=0

h1(i1) h2(i2) g3(i3)

lllj1(2n1 i1, 2n2 i2, 2n3 i3) (2)

::

hhhj (n1, n2, n3) =

Kg1

i1=0

Kg1

i2=0

Kg1

i3=0

g1(i1) g2(i2) g3(i3).

lllj1(2n1 i1, 2n2 i2, 2n3 i3). (3)

n1 = 0, 1, . . . , (R/2) 1, n2 = 0, 1, . . . , (M/2) 1, and

n3 = 0, 1, . . . , (N/2) 1, where, Kh and Kg are, respectively,

the lengths of the low-pass and high-pass filters, M and N,

respectively, the height and width the image and R is the

-

8/3/2019 05523919

3/10

1202 IEEE TRANSACTION S ON CIRCUI TS A ND SYSTEMS FOR VIDEO TECHNOLOGY, VOL. 20, NO. 9, SEPTEMBER 2010

Fig. 1. Proposed structure for computation of 1-level convolution-based 3-D DWT of frame-size (M N). z1l

(n1, n2, q)p and z1h

(n1, n2, q)p represent four

subband components {z1lll

(n1, n2, q)p, z1hll

(n1, n2, q)p, z1lhl

(n1, n2, q)p, z1hhl

(n1, n2, q)p} and {z1llh

(n1, n2, q)p, z1hlh

(n1, n2, q)p, z1lhh

(n1, n2, q)p, z1hhh

(n1, n2, q)p},respectively. z(n1, n2, q)p is defined as the qth data sample of pth block of n2th row of n1th frame of the subband matrix, where 0 q (Q/2) 1,0 p P 1, 0 n2 (M/2) 1, 0 n1 (R/2) 1, N = PQ.

frame-rate of the video stream. Assuming K = Kh = Kg,

(1)(3) can be represented in a generalized form

z(n1, n2, n3) =

K1i1=0

K1i2=0

K1i3=0

w1(i1) w2(i2) w3(i3).

x(2n1 i1, 2n2 i2, 2n3 i3) (4)

where z() corresponds to lllj (), llhj (), . . . , hhhj (), and x()

corresponds to lllj1() in (1)(3), while w1(i), w2(i) and w3(i),

respectively correspond to the filter coefficients (h1(i) or g1(i)),

(h2(i) or g2(i)) and (h3(i) or g3(i)).

The computations of (2) can be decomposed into three

distinct stages as

z(n1, n2, n3) =

K1

i=0

w3(i).v(2n1 i, n2, n3) (5)

v(n1, n2, n3) =

K1

i=0

w2(i).u(n1, 2n2 i, n3) (6)

u(n1, n2, n3) =

K1

i=0

w1(i).x(n1, n2, 2n3 i). (7)

[u(n1, n2, n3)] represents the low-pass and high-pass output

matrices [ul(n1, n2, n3)] and [uh(n1, n2, n3)], respectively,

corresponding to the 3-D input [x(n1, n2, n3)], while

[v(n1, n2, n3)] of (6) represents the four subband output

matrices [vll(n1, n2, n3)], [vlh(n1, n2, n3)], [vhl(n1, n2, n3)],

[vhh(n1, n2, n3)], where (vll(n1, n2, n3), vlh(n1, n2, n3))constitute the low-pass and high-pass outputs resulting from

the intermediate output ul(n1, n2, n3), and (vhl(n1, n2, n3),

vhh(n1, n2, n3)) constitute the low and high-pass outputs

resulting from the intermediate output uh(n1, n2, n3).

Similarly, z(n1, n2, n3) of (4) represents the eight oriented

selective subband outputs zlll(n1, n2, n3), zllh(n1, n2, n3),

zlhl(n1, n2, n3), zlhh(n1, n2, n3), zhll(n1, n2, n3), zhlh(n1, n2, n3),

zhhl(n1, n2, n3), zhhh(n1, n2, n3) corresponding to the

low and high-pass outputs of the four intermediate

outputs {vll(n1, n2, n3), vlh(n1, n2, n3), vhl(n1, n2, n3),

vhh(n1, n2, n3)}.

Using the decomposition scheme of (5)(7), the computa-

tion of 3-D DWT can be performed in three distinct stages as

follows.

1) In stage-1, low and high-pass filtering is performed

row-wise on each input frame (intra frame) to produceintermediate matrix [Ul] and [Uh] according to (7).

2) In stage-2, low and high-pass filtering is performed

column-wise on each of the intermediate coefficient

matrices [Ul] and [Uh] to generate four subband matrices

[Vll], [Vlh], [Vhl] and [Vhh] according to (6).

3) Finally, in stage-3 low-pass and high-pass filtering is

performed on inter-frame subbands to obtain eight ori-

ented selective subband matrices [Zlll], [Zllh], [Zlhl],

[Zlhh], [Vhll], [Vhlh], [Vhhl] and [Vhhh] of size [M/2

N/2 R/2].

III. Proposed Architecture for 1-Level 3-D DWT

Based on the mathematical formulation of the previous

section, we derive here a throughput-scalable structure for the

implementation of 1-level 3-D DWT, as shown in Fig. 1. It

consists ofQ/2 regularly arranged processing modules, where

Q samples are available to the structure per clock cycle, and

N = PQ, such that P clock cycles are involved to feed each

of the rows of a frame of the 3-D input. Input data of each

frame of size (MN) is cyclically extended by (K2) rows

and (K 2) columns; and rows of 3-D input data-matrix are

then folded by a factor P, where P is assumed to be power of

2, and fed block-by-block serially in P successive clocks to

the input data distribution unit (IDU), such that one completeframe is fed in (M + K 2)P successive clocks. Note that

for P = 1, one complete row of data is fed to the IDU in

each cycle which corresponds to the fully parallel structure.

Each input data-block (Xn1,n2,p) is comprised of (Q + K 2)

consecutive samples of a given row and the successive data-

blocks, for 0 p P 1 are overlapped by (K 2)

samples, where 0 n1 R 1, 0 n2 M 1. In every

cycle, the IDU derives Q/2 input-vectors (I(n1, n2, q)p) such

that, each input-vector (I(n1, n2, q)p) for 0 q Q/2 1,

consists of K consecutive values of particular input block,

where I(n1, n2, q)p = x(n1, n2, pQ+2q+K1), x(n1, n2, pQ+

-

8/3/2019 05523919

4/10

MOHANTY AND MEHER: PARALLEL AND PIPELINE ARCHITECTURES FOR HIGH-THROUGHPUT COMPUTATION OF MULTILEVEL 3-D DWT 1203

Fig. 2. Structure of a processing module. Output-1 and output-2, re-spectively represent the subband components Z1

lll, Z1

hll, Z1

lhl, Z1

hhland

Z1llh

, Z1hlh

, Z1lhh

, Z1hhh

.

Fig. 3. Internal structure of subcell-1. Output-1 and output-2, respec-tively, represent the low-pass and the high-pass components ul and uh.I(n1, n2, q)p : x(n1, n2, pQ + 2q + 3), x(n1, n2, pQ + 2q + 2), x(n1, n2, pQ +2q + 1), x(n1, n2, pQ + 2q).

2q+K2), . . . , x(n1, n2, pQ+2q+1), x(n1, n2, pQ+2q). Note

that, for K = 4, two adjacent input-vectors of a particular input

block are overlapped by two samples. The IDU feeds the Q/2

input-vectors in parallel to the Q/2 processing modules such

that, (Q/2q)th module receives the input-vector I(n1, n2, q)pduring the pth cycle of a period of P successive cycles.

The internal structure of the processing module is shown in

Fig. 2. It consists of three subcells working in separate pipeline

stages. Subcell-1, subcell-2, and subcell-3, respectively, per-

form the computations pertaining to stage-1, stage-2, and

stage-3. The arithmetic operations pertaining to the low-pass

and high-pass filtering of stage-1 [as given by (7)] are mapped

into a subcell-1. The internal structure of subcell-1 for K = 4

is shown in Fig. 3. It consists ofK multiplication units (MUs)

and (2K 2) adders. Each of the MUs stores a pair of filter

coefficients of the low-pass and high-pass filters, such that the

(k +1)th MU stores the coefficients h1(k) and g1(k). Four input

samples (for K = 4) are fed in parallel to the MUs through

the input-vector I(n1, n2, q)p during each clock cycle. The four

pairs of multiplications required for computing a pair of filter

outputs are implemented concurrently by four MUs; the out-

puts of the MUs are added concurrently by an adder-tree. Theduration of a clock cycle of the structure is equal to (TM+2TA),

where TM and TA are, respectively, the multiplication-time

and addition-time. During each cycle, subcell-1 produces a

low-pass component ul(n1, n2, q)p and a high-pass compo-

nent uh(n1, n2, q)p of an intermediate matrix. Note that, P

successive pairs of such outputs of subcell-1 correspond to

a particular input row, and form a row of the intermediate

matrix, which can be used directly as the input for the stage-2

to perform the wavelet filtering along the column direction.

The computation of stage-2 [given by (6)] is mapped

into a subcell-2, where the filtering of intermediate outputs

Fig. 4. Structure of subcell-2. Output-1 and Output-2 of subcell-2, respec-tively, represent the subband components {vll, vhl} and {vlh, vhh}.

Fig. 5. (a) Function of LC. (b) Function of AC.

ul(n1, n2, q)p and uh(n1, n2, q)p for (0 p P1) are time-

multiplexed to take the advantage of down-sampling of DWT

components. The internal structure of subcell-2 for K = 4

is depicted in Fig. 4. It consists of four MUs, three adder

cells (AC), three serial-in serial-out shift-registers (SR) of size

P words, and one line-changer (LC). During every cycle, a

pair of outputs from subcell-1 is fed to the MUs in subcell-

2 through the LC. The structure and the function of the LC

are shown in Fig. 5(a). In each set of P successive clock

cycles, the output lines of a pair of output of subcell-1 are

interchanged by the LC, such that if the low-pass intermediate

output is on a particular output line during a particular set of

P successive cycles, then the high-pass intermediate output

appears on that output line in the next set P cycles. The sample

values on line-1 are fed to the odd-numbered MUs (MU-1

and MU-3), while the sample values on the line-2 are fed

to the even-numbered MUs (MU-2 and MU-4). This simple

technique of sample loading introduces embedded decimation

in the filter computation of ul(n1, n2, q)p and uh(n1, n2, q)palong the column direction. Each of the MUs stores a pair

of coefficients (h2(k) and g2(k)) of the low-pass and high-

pass filters of the stage-2. The four MUs of subcell-2 perform

the multiplications concurrently. The addition operations are

implemented by the ACs operated in a systolic pipeline. The

function of each AC is depicted in Fig. 5(b). After a latencyof (K + 3P 1) cycles (where 3P cycles are required to fill

the SR) subcell-2 produces two subband components in each

cycle; and in P successive cycles, it computes the subband

components of a particular row. During two consecutive sets

of P cycles, it computes four subband components of a given

column. All the (Q/2) modules of the linear array structure

thus produce a block of Q/2 pairs of 2-D DWT subbands,

and one complete row of each of the four subbands of a given

frame in every sets of 2P cycles. The first level decomposition

of the n1th frame of size (MN), therefore, can be obtained in

MP cycles after an initial latency of (K + 3P) cycles. Each of

-

8/3/2019 05523919

5/10

1204 IEEE TRANSACTION S ON CIRCUI TS A ND SYSTEMS FOR VIDEO TECHNOLOGY, VOL. 20, NO. 9, SEPTEMBER 2010

Fig. 6. Internal structure of subcell-3 and intermediate buffer. Input-1and Input-2, respectively, represent [vll(n1, n2, q)p] or [vhl(n1, n2, q)p] and[vlh(n1, n2, q)p] or [vhh(n1, n2, q)p]. The output-1 and the output-2, respec-tively, represent the 3-D DWT coefficients [zlll(n1, n2, q)p], [zhll(n1, n2, q)p],[zlhl(n1, n2, q)p], [zhhl(n1, n2, q)p] and [zllh(n1, n2, q)p], [zhlh(n1, n2, q)p],[zlhh(n1, n2, q)p], [zhhh(n1, n2, q)p] in time-multiplexed form.

the subcell-2 gives a pair of outputs in parallel in each cycle,

such that in MP successive cycles it gives four columns of

subbands [Vll], [Vlh], [Vhl], and [Vhh] of a particular frame,

where the subband components of ([Vll] and [Vhl]) or ([Vlh]

and [Vhh]) are obtained in time-multiplexed form.

The output of subcell-2 gets queued in the intermediate

buffer (shown in Fig. 6) to be processed for stage-3 of the com-

putation. The intermediate buffer consists of seven SRs. Each

of the SRs holds MP words of either the columns of ([Vll] and

[Vhl]) or of ([Vlh] and [Vhh]), such that the even-numbered SRs

hold the elements of [Vll] and [Vhl] alternately, while the odd-

numbered SRs hold the elements of [Vlh] and [Vhh] alternately.

Note that each successive SRs hold the subband components

corresponding to the successive frames. The buffering of

subband components of successive frames in the SRs is done

to perform the inter-frame wavelet filtering of stage-3.

In stage-3 of the computation, low-pass and highpass filtering are performed across the frames on the

four subband components of stage-2 to obtain the eight-

oriented selective subband components [Zlll], [Zllh], [Zhll],

[Zhlh], [Zlhl], [Zlhh], [Zhhl], [Zhhh] of 3-D DWT. The compu-

tation of each subcell-3 is thus performed by a pair of low-

pass and high-pass filters, where the filtering of [Vll], [Vhl],

[Vlh], and [Vhh] are time-multiplexed. Structure of subcell-

3 (shown in Fig. 6) is similar to the structure of subcell-

1, except that it contains four MUXes to select the required

input samples from the intermediate buffer. The intermediate

buffer provides the required subband components [Vll], [Vhl],

[Vlh], and [Vhh] of a given frame to subcell-3. The extra

SR-1 provides one complete column-delay to the input [Vlh]/

[Vhh], so that for the first MP cycles, the inter-frame wavelet

filtering is performed on the components of [Vll]/[Vhl] and

in the second MP cycles such filtering is performed on the

components of [Vlh]/[Vhh]. This process is repeated such that

the components of [Vll]/[Vhl] and [Vlh]/[Vhh] are processed in

alternate MP cycles.

The MUXes of each subcell-3 select the inputs of ([Vll]or [Vhl]) and ([Vlh] or [Vhh]) of consecutive frames from the

intermediate buffer in alternate MP cycles, and compute the 3-

D DWT coefficients such that, during every odd set ofMP suc-

cessive cycles, one complete column of DWT components of

four subbands [Zlll], [Zllh], [Zhll], and [Zhlh] are obtained from

subcell-3, where the subband components of ([Zlll], [Zllh]) and

([Zhll], [Zhlh]) are time-multiplexed. Similarly during every

even-numbered set of MP successive cycles, one column of

other four subband coefficients [Zlhl], [Zlhh], [Zhhl], [Zhhh] are

obtained from subcell-3. The linear array of Q/2 processing

modules thus produces Q/2 coefficients of each of the eight

subbands in every computational cycle, such that the 3-D DWT

computation of a given frame is completed in MP cycles. Thecomplete 3-D DWT of size (M N R) can be obtained in

MPR cycles, where R is the frame-rate of the video stream and

M, N are, respectively, the height and width of each frame.

The entire linear array of Q/2 processing module can be

implemented in a processing unit (PU-1) for first level DWT

computation. Similar processing units can also be designed

for computation of higher level decomposition, and all those

processing units can be integrated into a pipeline structure for

concurrent implementation of multilevel 3-D DWT.

IV. Proposed Pipeline Architecture for

Multilevel 3-D DWT

In multilevel 3-D DWT, the [Zlll] subband of current decom-

position level is further processed to calculate the 3-D DWT

of the next higher level of decomposition. Since the 3-D DWT

structure for each level of decomposition performs decimated

filtering, the number of arithmetic operations to calculate 3-D

DWT of each higher level decreases consistently by a factor

of eight. The amount of hardware resources for calculating

the DWT coefficients of every higher level of decomposition

should, therefore, be reduced by a factor of 8, in order to

achieve 100% HUE. Based on this point of view, we have

derived a fully pipelined structure for the implementation of J

level 3-D DWT as shown in Fig. 7, where J = log8(Q/2)+1.

It is comprised of J PUs, where PU-j, for (1 j J)performs the computation of the jth decomposition level. The

PUs are connected in a linear structure, and work in separate

pipeline stages. The structure of each PU is similar to the

1-level 3-D DWT structure shown in Fig. 1.

PU-1 consists of Q/2 processing modules to calculate the

3-D DWT of the first level decomposition. It gives one row

(of size N/2) of four out of eight subbands in 2P successive

cycles, such that all the M/2 rows of those subbands are

obtained in MP successive cycles. Similarly, all the M/2 rows

of other four subbands of 3-D DWT are obtained during the

next MP cycles. Note that the low-low subband components

-

8/3/2019 05523919

6/10

MOHANTY AND MEHER: PARALLEL AND PIPELINE ARCHITECTURES FOR HIGH-THROUGHPUT COMPUTATION OF MULTILEVEL 3-D DWT 1205

Fig. 7. Proposed pipeline structure multilevel 3-D DWT, where J = log8(Q/2) + 1 and (Q/23J3) 2.

of the first level (Z1lll) of a particular frame are obtained from

PU-1 during alternate sets of MP cycles. Each output block

(consisting of Q/2 samples) corresponding to Z1lll are folded

by a factor of 4, and fed to PU-2 in four successive cycles, such

that one row of Z1lll is fed in 4P cycles, and a complete frame

in 2MP cycles. PU-2, therefore, performs the processing of

half of the components of the matrix Z1lll (of size [M/2N/2]

of a particular frame) in MP cycles, while PU-1 generates

the entire DWT components of Z1lll of a particular frame in

alternate sets of MP cycles. PU-2 uses an input-buffer (IB2)

of size MN/8 words to store half of the output values of

Z1lll corresponding to a frame. PU-2 is comprised of (Q/16)

processing modules arranged in a linear array structure similar

to that of PU-1. It receives a block of Q/8 intermediate outputs

through IB2 in every cycle, and calculates a block of Q/16

components of a pair of subband matrices in every cycle. The

subcell-1 and intermediate-buffer of each processing module

of PU-2 are the same as those of PU-1. But, each SR of

subcell-2 (Fig. 4) and subcell-3 (Fig. 6) of PU-1 are replaced

by SRs of size 4P and 2MP, respectively, to obtain thecorresponding subcells of PU-2. It calculates one complete

row of N/4 coefficients of all the eight subband matrices in

16P cycles; and completes the 2-level decomposition in MPR

cycles. Similarly, the low-low subband components of ZJ1lllare buffered in IBJ of size MN/2

2J1 words. The Jth PU is

comprised ofQ/23J2 identical modules, and receives a block

of Q/23J3 intermediate outputs from IBJ in every cycle,

where Q/23J3 2. The SR size of subcell-2 and subcell-

3 of each module of Jth PU are, respectively, (4J1P) and

(2J1MP) words. It calculates one row of N/4 coefficients of

a pair of subbands in (4J1P) cycles, and one row of all the

eight subband matrices in (4JP) cycles. It takes MPR cycles to

complete the calculation of all the eight subbands of [each ofsize (MR/4JN/2J)]. The PUs of the proposed structure work

in separate pipeline stages, and compute the J level 3-D DWT

computations of an input video stream (MNR) in MPR

cycles. The latency of the proposed structure is estimated to be

Latency =

J

j=1

(PUj )latency

=

J

j=1

K + 4j1(K 1)P + 2j1(K 1)MP

= KJ + (4J)(K 1)P/3 + (2J)(K 1)MP. (8)

Note that (4J)(K 1)P/3 + (2J)(K 1)MP cycle delay is

introduced to fill the SRs of subcell-2 and intermediate-buffer

of each the module where K is the filter order.

V. Hardware and Time Complexity

The proposed 3-D DWT structure for J levels of decompo-

sition involves J processing units, where J = log8(Q/2) + 1.

Each of the processing units (PU) operates in separate pipeline

stage. The jth PU involves (Q/23j2) identical modules to

perform 3-D DWT ofjth level with 100 % hardware utilization

efficiency. Each module has three subcells (subcell-1, subcell-

2, and subcell-3).

Each subcell of the proposed structure requires 2K mul-

tipliers and (2K 2) adders. In addition to this, subcell-2

and intermediate-buffer, respectively, involve 22j1(K 1)Pand 2j1(2K1)MP delay registers. Each module, therefore,

involves 6K multipliers, 6(K1) adders and 22j1(K1)P+

2j1(2K 1)MP registers. Along with (Q/23j2) processing

modules, the jth PU (except the first PU) involves an input-buffer (IBj ) of size MN/2

2j1, for 2 j J. The size of

each subband matrix of the jth level 3-D DWT is [(MR/4j )

(N/2j )] for input size (M N R). Eight such subband

matrices are computed by the jth PU in MPR cycles. The

PUs of the proposed pipeline structure concurrently calculate

the 3-D DWT of an input video stream of size (M N) and

frame-rate R in approximately in MPR cycles.The hardware

complexity of the proposed structure for J-level 3-D DWT is

estimated as follows.

Number of multipliers:

3KQ + 3KQ/8 + + 6KQ/23J2 =24

7KQ(1 23J)

Number of adders:

3(K 1)Q + 3(K 1)Q/8 + + 6(K 1)Q/23J2)

=24

7(K 1)Q(1 23J)

Number of pipeline/data registers:

[(K 1)N + (2K 1)MN/2] + [(K 1)N/2

+(2K 1)MN/8] + + [(K 1)N/2J1

+(2K 1)MN/22J1]

= 2(K 1)N(1 2J) +2

3(2K 1)(1 22J)MN

-

8/3/2019 05523919

7/10

1206 IEEE TRANSACTION S ON CIRCUI TS A ND SYSTEMS FOR VIDEO TECHNOLOGY, VOL. 20, NO. 9, SEPTEMBER 2010

Input-buffer size in words

MN/8 + MN/32 + + MN/22J1

= MN(1 22J+2)/6

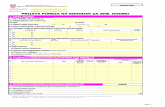

A. Performance Comparison

The hardware and time complexity of the proposed structure

and the existing structures of [16], [19], and [20] are listed in

Table I in terms of cycle period, ACT1 in clock cycles, storage

space and frame-buffer size in words, along with the number ofmultipliers and adders for comparison. The overall hardware-

complexity of the structures has two major components:

1) complexity of arithmetic units, and 2) on-chip storage and

external frame-buffer. When the frame-size (MN) is small,

the complexity of arithmetic units is more significant than

that of the storage component. But, the frame-size for video

processing varies from as low as 176144 for the 3G phones

to 640480 in standard definition format and 1280720 in the

commonly used high definition format, while the frame-rate

can vary from 15f/s in mobile phones to 60f/s for HDTV

application Therefore, in practice, the on-chip storage and

frame-buffer have dominant contribution to the hardware of

the overall structure.

As shown in Table I, the proposed structure requires

4Q/7 more multipliers and adders compared to those of [16]

and [19]. Compared with [20], the proposed one requires

Q/7 times more multipliers and adders. The on-chip storage

of the proposed structure is 4/3 times and 2M/3R times

of the structures of [19] and [20], respectively. Compared

with the 3DW-I and 3DW-II structures of [16], it involves

nearly 2K/3R times less and 4MN/3K2 times more on-chip

storage. But, it is interesting to note that, unlike the existing

structures, the frame-buffer of the proposed structure is in-

dependent of the frame-rate R; it involves (3R/4) times less

frame-buffer than the others; and offers higher throughput rate.Moreover, the proposed structure provides 4Q/7 and Q/7

times higher throughput per cycle compared with ([16], [19])

and [20], respectively, with a slightly higher clock period.

Compared with block-based 3DW-II structure of [16], the

proposed one can offer K2Q/7 times higher throughput rate.

Since the frame buffer as well as the on-chip storage of the

proposed structure is independent of input block size (Q), the

throughput per clock cycle of the proposed structure can be

increased proportionately, by using more number of processing

modules, without increasing its memory component. When

higher processing speed is not required, the structure could

be operated by a slower clock, where speed could be traded

for power by scaling down the operating voltage [24]. On theother way, the multiplications and additions of the proposed

structure either could be time-multiplexed in side the process-

ing modules, or the could be implemented by slower hardware-

efficient multipliers and adders. Moreover, the proposed struc-

1ACT is the number of cycles required for the computation of all the J-levels of 3-D DWT after the initial latency. In case of proposed structures itis calculated by dividing the total number of 3-D DWT coefficients by thethroughput per cycle. In case of the structure of [19] and [20], the ACTis calculated by the sum of the ACTs of each individual levels, becausethey compute the 3-D DWT of different levels sequentially. For the proposedstructures the ACT is MNR/Q cycles, since all the levels of multilevel DWTcomputation are performed in separate pipeline stages.

ture involves a small output latency of the O(MN/Q); and

calculates the multilevel 3-D DWT computation with 100%

utilization efficiency.

Since the throughput rates of the existing structures and the

proposed structures are different, we have compared the area-

delay-products pertaining to different hardware components,

such as the multiplier-delay-product (MDP), Adder-delay-

product (ADP), storage-word-delay-product (SWDP), and

frame-buffer-delay-product (FBDP), estimated by the productof the respective hardware component with the computation-

time, where the computation time is estimated as the product

of cycle time with ACT in cycles (since the structures are

fully pipelined and there is no inter-frame latency). We have

estimated the MDP, ADP, SWDP and FBDP of the proposed

structure and those of existing structures; and listed those in

Table III. The proposed structure is found to involve nearly

1.33 times more MDP and ADP than the structures of [19],

[20] and 3DW-I of [16] for the first level DWT computation,

if we assume the multiplication-time to be twice that of

addition-time.2 For each of the subsequent levels, the MDP

and ADP of the proposed structure fall by a factor 8. SWDPs

of 3DW-I structure of [16], and the structures of [19] and[20] are, respectively, QR times, Q times and QR/M

times higher than those of the proposed structure. However, the

block-based (3DW-II) structure of [16] is (MN/K4Q) times

less than the proposed structure. It is found that FBDP of the

proposed structure is QR times less than the existing structure.

The hardware and time-complexities pertaining to frame-

size 176 144 and frame-rates of 15, 30, and 60f/s are

estimated for the proposed structure for input block size (16,

48, 72, 144) and for J = 2; and compared them with those

of the existing structures in Tables III. As shown in Tables

III, the structure of [20] involves four times the multipliers

and adders as those of [19], but offers nearly four times more

throughput of the other. The structure of [20] requires nearly

11.6 times less on-chip storage, respectively for Daub-4 filter

compared to that of [19] for 15 f/s. The block-based structure

of [16] requires nearly 78.2 times less on-chip storage for

Daub-4 filters and offers nearly 28 times less throughput rate

those of [20] for 15 f/s. As shown in Table III, the proposed

structure for Daub-4 filter requires nearly (2.25, 6.75, 10.125,

20.25) times more multipliers and adders of [20] for input

block size (Q = 16, 48, 72, 144), respectively, and provides

nearly (2.25, 6.75, 10.125, 20.25) times more throughput rate

over the latter. For 15 f/s, it requires 12.73 times more on-chip

storage than the structure of [20], but for 60 f/s, it involves

3.27 times more on-chip storage of [20] for input block size(Q = 16, 48, 72, 144). The storage-complexity of proposed of

structure remains the same for different frame-rates, but in

case of [20] the on-chip storage as well as the frame-buffer

size increase linearly with the frame-rate.

To estimate the transistor counts, we have assumed ripple-

carry adders (RCA) and RCA-based multipliers of 8-bit input

2We have synthesized the multipliers and adders for 8-bit and 12-bit signedas well as unsigned numbers by Synopsys Design Compiler using DesignWarebuilding block library and estimated the multiplication-time and addition-timepertaining to TSMC 90 nm process technology. The average multiplication-time is found to be nearly 2.1 times the average addition-time.

-

8/3/2019 05523919

8/10

MOHANTY AND MEHER: PARALLEL AND PIPELINE ARCHITECTURES FOR HIGH-THROUGHPUT COMPUTATION OF MULTILEVEL 3-D DWT 1207

TABLE I

General Comparison of Hardware and Time-Complexities of the Proposed Structure and the Existing Structures

Structures Multipliers Adders On-Chip Storage Frame-Buffer Cycle Period ACT

Weeks et al. [16] 6K 6(K 1) 2MNR + 2MN + 6K 18 MNR T M + TA 47 MNRx1

(3DW-I)

Weeks et al. [16] 2K 2(K 1) K3 + 2K2 + 4K MNR T M + TA 17 (K

2 + 2K + 4)(3DW-II) MNRx1

Das et al. [19] 6K 6(K 1) KMN+ (K 2)N + 2K 18 MNR T M + TA 47 MNRx1

Dai et al. [20] 24K 24(K 1) 2R(K 2)(N + 2) + 8K 18 MNR T M + TA 17 MNRx1

Proposed 247 KQx1

247 (K 1)Qx1

2(K 1)Nx2+ MNx4/6 TM + 2TA MNR/Q

2(2K 1)MNx3/3

J: maximum of levels of 3-D DWT decomposition, M: image height, N: image width, R: frame-rate, K: order of low-pass/high-pass filter.x1 = (1 2

3J), x2 = (1 2J), x3 = (1 2

2J), x4 = (1 22J+2), K is the filter length, N is power of 2.

TABLE II

Comparison of Hardware-Component-Delay Product of Proposed Structure and the Existing Structures

Structures MDP ADP SWDP FBDP

Weeks et al. [16] 10.28KMNRx1TA 10.28(K 1)MNRx1TA 3.42(MNR + MN + 3K)MNRx1TA3

14 M2N2R2x1TA

(3DW-I)

Weeks et al. [16] 0.85KMNRzx1TA 0.85(K 1)MNRzx1TA 0.42(K3 + 2K2 + 4K)MNRzx1TA

37 M

2N2R2zx1TA(3DW-II)

Das et al. [19] 10.28KMNRx1TA 10.28(K 1)MNRx1TA 1.71(KM+ (K 2))N + 2K)MNRx1TA3

14 M2N2R2x1TA

Dai et al. [20] 10.28KMNRx1TA 10.28(K 1)MNRx1TA 0.85(R(K 2)(N + 2) + 4K)MNRx1TA3

14 M2N2R2x1TA

Proposed 13.71KMNRx1TA 13.71(K 1)MNRx1TA 8[(K 1)x2 + (2K 1)Mx3/3]MN2RTA/Q

23Q

M2N2RTA

K: filter length, J: 3-D DWT levels, M: image height, N: image width, R: frame-rate.MDP: multiplier-delay-product, ADP: adder-delay-product, SWDP: storage-word-delay product, FBDP: frame-buffer-delay product,x1 = (1 2

3J) y = (1 2J), x2 = (1 23J+3), z = (K2 + 2K + 4). We have assumed TM = 2TA.

TABLE III

Comparison of Hardware and Time-Complexities of the Proposed and the Existing Structures for Different Video Applications

Using Four-Tap Daub-4 Wavelet Filters, J = 2

Structures Frame-Size and Rate Multipliers Adders On-Chip Storage Frame-Buffer ACT(M N R) (Register Words) (RAM Words) in Cycles

Das et al. [19] (176 144 15) 24 18 101 672 47 520 213840

Dai et al. [20] (176 144 15) 96 72 8760 47 520 53460

Weeks et al. [16] 3DW-II (176 144 15) 8 6 112 380 160 1496880

Proposed (Q = 16) (176 144 15) 216 162 111 528 3168 23760

Proposed (Q = 48) (176 144 15) 648 486 111 528 3168 7920

Proposed (Q = 72) (176 144 15) 972 729 111 528 3168 5280

Proposed (Q = 144) (176 144 15) 1944 1458 111 528 3168 2640

Das et al. [19] (176 144 30) 24 18 101 672 95 040 427680

Dai et al. [20] (176 144 30) 96 72 17 520 95 040 106920

Weeks et al. [16] 3DW-II (176 144 30) 8 6 112 760 320 2993760

Proposed (Q = 16) (176 144 30) 216 162 111 528 3168 47520Proposed (Q = 48) (176 144 30) 648 486 111 528 3168 15840

Proposed (Q = 72) (176 144 30) 972 729 111 528 3168 10560

Proposed (Q = 144) (176 144 30) 1944 1458 111 528 3168 5280

Das et al. [19] (176 144 60) 24 18 101 672 190 080 855360

Dai et al. [20] (176 144 60) 96 72 34 042 190 080 213840

Weeks et al. [16] 3DW-II (176 144 60) 8 6 112 1 520 640 5987520

Proposed (Q = 16) (176 144 60) 216 162 111 528 3168 95040

Proposed (Q = 48) (176 144 60) 648 486 111 528 3168 31680

Proposed (Q = 72) (176 144 60) 972 729 111 528 3168 21120

Proposed (Q = 144) (176 144 60) 1944 1458 111 528 3168 10560

M: image height, N: image width, R: frame-rate.

-

8/3/2019 05523919

9/10

1208 IEEE TRANSACTION S ON CIRCUI TS A ND SYSTEMS FOR VIDEO TECHNOLOGY, VOL. 20, NO. 9, SEPTEMBER 2010

Fig. 8. Transistor count of proposed structure and existing structures usingDaub-4 filters of frame-size (176 144) and frame-rate 15 f/s.

Fig. 9. Transistor count of proposed structure and existing structures usingDaub-4 filters of frame-size (176 144) and frame-rate 60 f/s.

width for all the structures. The on-chip storage and the frame-

buffer are assumed to be of D flip-flops, and SRAM, respec-

tively. The multiplier, adder, 8-bit register, and 8-bit SRAM

are taken to be 1085, 248, 128, and 48 transistors, respectively

[25]. The transistor counts of proposed structure using Daub-

4 filters are estimated accordingly for 2-level decompositions

for input frame-size (176144) and frame-rates 15 and 60 f/s

(shown in Figs. 8 and 9). It can be observed from these figures

that, for 15 f/s the structure of [20] involves considerably less

transistors than all the structures. It is shown that, the proposed

structures for Q = 16, 48, 72, and 144, respectively, involve

4.17, 4.32, and 4.44, and 4.79 times more transistors than that

of [20] and offer 2.25, 6.75, 10.125, and 20.25 times more

throughput rate. For 60 f/s (Fig. 9), the proposed structure

involves 1.08, 1.12, 1.15, and 1.24 times more transistors

than the corresponding structures of [20], but offer nearly

2.25, 6.75, 10.125, and 20.25 times more throughput than the

other. An interesting feature of the proposed structures is that

its throughput rate can easily be scaled by simply increasing

the number of processing modules without increasing the on-

chip storage and frame buffer size. The transistor counts of

the proposed structures therefore do not increase remarkably

with the throughput rate.

We have coded the proposed structure (based on Daub-

4 filters) for input block size Q = 16, 48, 72, and 144 forthe first-level decompositions of input-size (176 144 15),

in very high speed integrated circuit hardware descriptive

language and synthesized using Xilinx ISE 11i. The proposed

designs are then implemented in Virtex-5 field programmable

gate array (FPGA) platform using 5VLX330 device. The

results obtained from the synthesis report are listed in Table

IV in terms of number of slices and maximum clock frequency

in MHz. The designs for different input block size have the

same cycle period, as expected from the theoretical estimation

shown in the comparison Table I. It can be observed from

Table IV that the proposed design for Q = 48, 72, and 144,

TABLE IV

Synthesis Results of Proposed Structures for FPGA Device

5VLX330

Proposed Designs Number of Slices Max. Clock Frequency

Q = 16 29 812 582.74MHz

Q = 48 43 898 582.74MHz

Q = 72 53 532 582.74MHz

Q = 144 82 008 582.74MHz

respectively, involves 1.47, 1.79, and 2.75 times more slices

than those required for (Q = 16) and offers, respectively,

3, 4.5, and 9 times more throughput rate. The increase in the

number of slices could be due to difference in the way of

implementation of adders and multipliers in FPGA although

we find that the transistor count does not increase remarkably

with Q.

VI. Conclusion

A throughput-scalable parallel and pipeline architecture was

proposed for high-throughput computation of multilevel 3-DDWT with 100% hardware utilization efficiency. Each level

of 3-D DWT computation was split into three distinct stages

and the computations of all the three stages are implemented

concurrently in a parallel array of pipelined processing mod-

ules. For multilevel DWT computation, we have proposed a

cascaded structure where each level of decomposition was

performed by a processing unit in a separate pipeline stage. An

interesting feature of the proposed structure is that it involves

relatively small frame-buffer than the existing structures; and

size of the frame-buffer is independent of frame-rate. Besides,

the size of the on-chip storage and frame-buffer is independent

of the input block size. The latency of the proposed structure

for multilevel 3-D DWT is O(MN/Q) while that of the

existing structures is O(MNR), where (M N) is the frame-

size. Compared with best of the existing structures, we find

that for frame size (176144) and frame-rate (15 and 60) f/s,

the proposed structure for input block size (16, 48, 72, 144),

respectively, involves (4.17, 4.32, 4.44, 4.79) and (1.08, 1.12,

1.15, 1.24) times more transistors of [20] and offers (2.25,

6.75, 10.125, 20.25) times more throughput rate. The overall

area-delay products of proposed structure is significantly lower

than the existing structures, although they involve slightly

more multiplier-delay product and more adder-delay product,

since it involves significantly less frame-buffer and storage-

word-delay product. It was found that the throughput rate ofthe proposed structure can easily be scaled without increas-

ing the on-chip storage and frame-memory by using more

number of processing modules; and for higher-frame-rates

and higher input block-size it provides greater advantage over

the existing designs. It was also found that the full-parallel

implementation of proposed scalable structure provides the

best of its performance. But, the computational speed of the

full-parallel structure on one hand might be too high for the

required application, and on the other hand it may involve too a

large hardware unit. Therefore, when higher processing speed

is not required, the structure could be operated by a slower

-

8/3/2019 05523919

10/10

MOHANTY AND MEHER: PARALLEL AND PIPELINE ARCHITECTURES FOR HIGH-THROUGHPUT COMPUTATION OF MULTILEVEL 3-D DWT 1209

clock, where speed could be traded for power by scaling down

the operating voltage [24]. To see the other possibilities of

using the proposed high-throughout structure, we have shown

that it could be implemented in FPGA for slow and low-

cost implementation. On the other way, the multiplications

and additions of the proposed structure either could be time-

multiplexed inside the processing modules, and/or could be

implemented by slower (possibly bit-serial or digit-serial kind

of) but hardware-efficient multipliers and adders, such that theclock period will increase and hardware requirement could be

reduced. Future work still needs to be carried out to determine

how the full-parallel structure could be used by slow and low

cost implementation by appropriate algorithm architecture co-

design to match the real-time processing requirement.

References

[1] A. Wang, Z. Xiong, P. A. Chou, and S. Mehrotra, 3-D wavelet codingof video with global motion compensation, in Proc. IEEE CS DataCompression Conf., May 1999, p. 404.

[2] G. Minami, Z. Xiong, A. Wang, and S. Mehrotra, 3-D wavelet codingof video with arbitrary regions of support, IEEE Trans. Circuit Syst.

Video Technol., vol. 11, no. 9, pp. 10631068, Sep. 2001.[3] A. M. Baskurt, H. Benoit-Cattin, and C. Odet, 3-D medical image

coding method using a separable 3-D wavelet transform, in Proc. SPIE Med. Imaging: Image Display, vol. 2431. Apr. 1995, pp. 173183.

[4] V. Sanchez, P. Nasiopoulos, and R. Abugharbieh, Lossless compressionof 4D medical images using H.264/AVC, in Proc. IEEE ICASSP, vol. II.May 2006, pp. 11161119.

[5] J. Wei, P. Saipetch, R. K. Panwar, D. Chen, and B. K. Ho, Volumetricimage compression by 3-D DWT, in Proc. SPIE Med. Imaging: Image

Display, vol. 2431. Apr. 1995, pp. 184194.[6] L. Anqiang and L. Jing, A novel scheme for robust video watermark

in the 3-D DWT domain, in Proc. ISDPE, Nov. 2007, pp. 514516.[7] A. Grzeszczak, M. K. Manal, S. Panchanathan, and T. H. Yeap, VLSI

implementation of discrete wavelet transform, IEEE Trans. VLSI Syst.,vol. 4, no. 4, pp. 421433, Dec. 1996.

[8] J. C. Limpueco and M. A. Bayoumi, A VLSI architecture for separable2-D discrete wavelet transform, J. VLSI Signal Process. Syst., vol. 18,

pp. 125140, Feb. 1998.[9] C. Chrysafis and A. Ortega, Line-based reduced memory, wavelet

image compression, IEEE Trans. Image Process., vol. 9, no. 3,pp. 378389, Mar. 2000.

[10] F. Marino, Efficient high-speed/low-power pipelined architectures fordirect 2-D wavelet transform, IEEE Trans. Circuit. Syst. II: Analog

Digital Signal Process., vol. 47, no. 12, pp. 14761491, Dec. 2000.[11] F. Marino, Two fast architectures for direct 2-D wavelet transform,

IEEE Trans. Signal Process., vol. 49, no. 6, pp. 12481259, Jun. 2001.[12] C. Yu and S. J. Chen, VLSI Implementation of 2-D wavelet transform

for real-time video signal processing, IEEE Trans. Consumer Electron.,vol. 43, no. 4, pp. 12701279, Nov. 1997.

[13] P.-C. Wu and L.-G. Chen, An efficient architecture for 2-D discretewavelet transform, IEEE Trans., Circuit Syst. Video Technol., vol. 11,no. 4, pp. 536545, Apr. 2001.

[14] S. B. Pan and R. H. Park, Systolic array architectures for computation ofthe discrete wavelet transform, J. Visual Commun. Image Representat.,

vol. 14, no. 3, pp. 217231, Sep. 2003.[15] C.-T. Huang, P.-C. Tseng, and L.-G. Chen, Generic RAM-based ar-

chitectures for 2-D discrete wavelet transform with line-based method,IEEE Trans., Circuit Syst. Video Technol., vol. 15, no. 7, pp. 910920,Jul. 2005.

[16] M. Weeks and M. A. Bayoumi, 3-D discrete wavelet transformarchitecture, IEEE Trans. Signal Process., vol. 50, no. 8, pp. 20502063, Aug. 2002.

[17] M. Weeks and M. A. Bayoumi, Wavelet transform: Architecture,design and performance issues, J. VLSI Signal Process., vol. 35,no. 2, pp. 155178, Sep. 2003.

[18] W. Badawy, M. Talley, G. Zhang, M. Weeks, and M. A. Bayoumi, Lowpower very large scale integration prototype for 3-D discrete wavelettransform processor with medical application, J. Electron. Imaging,vol. 12, no. 2, pp. 270277, Apr. 2003.

[19] B. Das and S. Banerjee, A memory efficient 3-D DWT architecture,in Proc. 16th Int. Conf. VLSI Design, Aug. 2003, p. 208.

[20] Q. Dai, X. Chen, and C. Lin, A novel VLSI architecture formultidimensional discrete wavelet transform, IEEE Trans. Circuitand Syst., Video Technol., vol. 14, no. 8, pp. 11051110, Aug.2004.

[21] ITRS. (2005). International Technology Roadmap for Semiconductors[Online]. Available: http://public.itrs.net/

[22] G. E. Moore, Cramming more components onto integrated circuits,Proc. IEEE, vol. 86, no. 1, pp. 8285, Jan. 1998.

[23] G. E. Moore, Lithography and the future of Moores law, inProc. 12th SPIE Adv. Resist Technol. Process., vol. 2438. Jun. 1995,pp. 217.

[24] K. K. Parhi, VLSI Digital Signal Processing Systems: Design andImplementation. New York: Wiley, 1999.

[25] N. H. E. Weste and D. Harris, CMOS VLSI Design: A Circuitsand Systems Perspective. Boston, MA: Pearson/Addison-Wesley,2005.

[26] A. Vincent, D. Wang, and L. Zhang. (2006, Dec.). Codec CRC-WVC Outperforms H.264 Video with Wavelets [Online]. Available:http://www.byte.com/documents

Basant K. Mohanty (M06) received the B.Sc. andM.Sc. degrees in physics from Sambalpur Univer-sity, Orissa, India, in 1987 and 1989, respectively,and the Ph.D. degree in the field of VLSI for

digital signal processing from Berhampur University,Orissa, in 2000.In 1992, he was selected by the Orissa Public

Service Commission to become a Faculty Memberwith the Department of Physics, SKCG CollegeParalakhemundi, Orissa. In 2001, he was a Lecturerwith the Department of Electrical and Electronic En-

gineering, BITS Pilani, Rajasthan, India. Then, he was an Assistant Professorwith the Department of Electrical and Computer Engineering, Mody Instituteof Science and Technology, Deemed University, Rajasthan. In 2003, he joinedthe Jaypee Institute of Engineering and Technology, Guna, Madhya Pradesh,India, as an Assistant Professor. He was promoted to Associate Professor in2005 and Professor in 2007. He has published nearly 25 technical papers. Hisname has appeared in Marquis Whos Who in the World in 1999. His cur-rent research interests include design and implementation of re-configurableVLSI architectures for resource-constrained digital signal processingapplications.

Dr. Mohanty is a lifetime member of the Institution of Electronics andTelecommunication Engineering, New Delhi, India.

Pramod Kumar Meher (SM03) received the B.Sc.and M.Sc. degrees in physics and the Ph.D. degreein science from Sambalpur University, Sambalpur,India, in 1976, 1978, and 1996, respectively.

He has a wide scientific and technical backgroundcovering physics, electronics, and computer engi-neering. Currently, he is a Senior Scientist with theInstitute for Infocomm Research, Singapore. Prior tothis assignment he was a Visiting Faculty Memberwith the School of Computer Engineering, NanyangTechnological University, Singapore. Previously, he

was a Professor of computer applications with Utkal University, Bhubaneswar,India, from 1997 to 2002, a Reader of Electronics with Berhampur University,

Berhampur, India, from 1993 to 1997, and a Lecturer of physics withvarious government colleges in India from 1981 to 1993. He has publishednearly 150 technical papers in various reputed journals and conferenceproceedings. His current research interests include design of dedicated andreconfigurable architectures for computation-intensive algorithms pertain-ing to signal processing, image processing, communication, and intelligentcomputing.

Dr. Meher is a Fellow of the Institution of Electronics and Telecommunica-tion Engineers, India, and the Institution of Engineering and Technology, U.K.He is currently serving as an Associate Editor for the IEEE Transactionson Circuits and Systems II: Express Briefs, IEEE Transactionson Very Large Scale Integration Systems, and Journal of Circuits,Systems, and Signal Processing. He was the recipient of the Samanta Chan-drasekhar Award for Excellence in Research in Engineering and Technologyfor 1999.