Lamb Ek 2004

description

Transcript of Lamb Ek 2004

-

A COMPUTATIONAL ALGEBRAICAPPROACH TO ENGLISH GRAMMAR

J. Lambek

Abstract. Whereas type-logical grammars treat syntactic derivations as logical proofs,usually represented by two-dimensional diagrams, I here wish to defend the view thatpeople process linguistic information by one-dimensional calculations and will explorean algebraic approach based on the notion of a pregroup, a partially ordered monoidin which each element has both a left and a right adjoint. As a first approximation,say to English, one assigns to each word one or more syntactic types, elements of thefree pregroup generated by a partially ordered set of basic types, in the expectationthat the grammaticality of a string of words can be checked by a calculation on thecorresponding types. This theoretical framework provides a simple foundation for akind of feature checking that may be of general interest. According to G. A. Miller,there is a limit to the temporary storage capacity of our short-term memory, whichcannot hold more than seven (plus or minus two) chunks of information at any time.I explore here the possibility of identifying these chunks with simple types, which areobtained from basic types by forming iterated adjoints. In a more speculative vein,I attempt to find out how so-called constraints on transformations can be framed in thepresent algebraic context.

1. Introduction

The present paper falls under the scope of what is known as categorial or typegrammar. The basic assumptions underlying this kind of grammar are thatsentences are made up of words, that sentencehood may be calculated from thesyntactic types assigned to these words, and that the types live in a kind oflogical or algebraic system.This is not the place for a comparison of categorial grammar with

mainstream syntactic theories such as the generative transformationalapproach and its current minimalist development or such as the generalizedphrase structure approach, nor am I the person to carry out such a comparison.For a comparison of the present approach with earlier forms of categorialgrammar, see Casadio and Lambek 2002. The present approach relies on anordered algebraic system called a pregroup, which is a little more general thana partially ordered group. This concept is explained in section 2.Traditional grammars are viewed as representing the knowledge of a language

by speakers and hearers, as expressed by Chomskys notion of competence.Pregroup grammars were introduced for the same reason and have been exploredin describing portions of English, German, French, Italian, Polish, Latin, Arabic,and Japanese in a number of articles and two Masters theses. However, it wasnoticed early on that they are particularly well suited for calculations and permit

* I wish to thank two anonymous referees for their constructive criticism. I have tried to addressmost of their concerns in this revised text. Support from the Social Sciences and HumanitiesResearch Council of Canada is herewith acknowledged.

Blackwell Publishing Ltd, 2004. Published by Blackwell Publishing, 9600 Garsington Road, Oxford OX4 2DQ, UK and 350Main Street, Malden, MA 02148, USA

Syntax 7:2, August 2004, 128147

-

access to the study of what Chomsky calls performance. It is this aspect ofpregroup grammar that the present article aims to explore.In my opinion, perhaps not shared by most linguists, mental calculations

take place in the single dimension of time, as does the so-called stream ofconsciousness. Whereas two-dimensional representations of parsing diagramsand proof trees are useful to the professional grammarian, I do not believe thatthey have any psychological reality. One might point out that Chomsky hadalready taken an early step in this direction when he replaced parsing trees byequivalent one-dimensional labeled bracketed strings.I present the mathematical background for my present approach to grammar

with some misgivings, as it might discourage some readers with a phobiaabout mathematics. To such readers I suggest to skip section 2, retaining onlythe rewrite rule; if a fi b then ba fi 1 and abr fi 1, and familiarizingthemselves with the symbols pk, sj, q j, and o.

2. Pregroups and Pregroup Grammar

Although monoidsin particular, free monoidsare well known in formallanguage theory, some linguists may not be familiar with them. A monoid is aset equipped with a binary operation, here denoted by juxtaposition, satisfyingthe associative law (ab)c a(bc), and with a unity element 1 satisfyinga1 a 1a.A set is partially ordered if it is equipped with a binary relation, here denoted

by an arrow, satisfying the reflexive, transitive, and antisymmetric rules:

a ! a; a ! b b ! ca ! c ;

a ! b b ! aa b :

For a monoid to be partially ordered, we also require that the partial orderbe compatible with the binary operation, as expressed by the rule ofinference

a ! b c ! dac ! bd

or, equivalently, by the substitution rule

a ! buav ! ubv :

Linguists are invited to read a fi b as a may be rewritten as b.A pregroup is a partially ordered monoid in which to each element a there

are associated elements a and ar satisfying aa fi 1 fi aa andaar fi 1 fi ara. We call a the left adjoint and ar the right adjoint of a.When the two adjoints coincide, the pregroup becomes what mathematicianscall a partially ordered group.It is easy to prove from the definition of a pregroup that adjoints are unique

(hence my choice of the definite article in the left adjoint) and that

A Computational Algebraic Approach to English Grammar 129

Blackwell Publishing Ltd, 2004

-

1 1 1r; ar a ar;

ab ba; abr brar;

and that a fi b implies b fi a and br fi ar. For readers who wish tosee proofs of these assertions, here are some sample arguments.Suppose a and a are both left adjoints of a, then a a1 fi

a(aa) (aa)a fi 1a a and similarly a fi a, hence a a andso left adjoints are unique.To prove that (ab) ba, it therefore suffices to show that ba is a left

adjoint of ab. Indeed

baab baab ! b1b bb ! 1

and

1 ! aa a1a ! abba abba:

Thus ba is a left adjoint of ab and so, because left adjoints are unique,ba (ab).To prove that a fi b implies b fi a, assume that a fi b, then

b b1 ! baa ! bba ! 1a a:

A pregroup is said to be freely generated by a given partially ordered set Aprovided all elements of the pregroup are made up from the elements of A (and1) by means of the binary operation (juxtaposition), and provided the pregroupadmits no rules of the form a fi b unless a and b are elements of A anda fi b already holds in A.For application to English grammar, we work with the free pregroup

generated by a partially ordered set of basic types, such as:

pk k-th person (k 1, 2, 3),where k 2 represents the modern second-person singular, as well as allthree persons of the plural,

sj declarative sentence in the j-th tense ( j 1, 2),with j 1 for the present and j 2 for the past,

q j question in the j-th tense,i infinitive of intransitive verb phrase,o direct object,

and so on. Sometimes the feature indicated by the subscript j or k is irrelevant,so we omit it and postulate sj fi s, q j fi q, and pk fi p.From the basic types we build simple types by taking adjoints or

repeated adjoints. Thus, from the basic type a we obtain simple typesa, a, a, ar, arr, Compound types, or just types, are strings of simpletypes. They are the elements of the free pregroup generated by the set ofbasic types:

130 J. Lambek

Blackwell Publishing Ltd, 2004

-

1 is the empty string;

multiplication is concatenation;

adjoints are defined inductively: 1 1, (ab) ba, (ab)r brar;the partial order is defined by the rules

a ! bb ! a

;a ! bbr ! ar

and the substitution rule.There is a metatheorem (Lambek 1999): to show that a1am fi b1bn,

where the ai and bj are simple types, we may assume, without loss ingenerality, that all generalized contractions

ba ! 1; abr ! 1 a ! b

precede all generalized expansions

1 ! ab; 1 ! braa ! b:

In particular, when n 1, as will be the case in most intended linguisticapplications, only contractions are needed, although expansions may still playa theoretical role.The importance of the metatheorem is that it offers a procedure for deciding,

by a sequence of contractions, when a string of simple types can be rewrittenas a single simple typefor instance, the type of a sentence. If expansionswere needed in addition to contractions, we could not be sure that thecalculation would ever end.Pregroups may be viewed as realizations of a certain substructural logical

systemnamely, compact bilinear logicjust as Boolean algebras may beviewed as realizations of the classical propositional calculus. WojciechBuszkowski (2002) has shown that the above metatheorem gives rise to a cut-elimination theorem for compact bilinear logic. Note, however, that this logicis rather unorthodox, inasmuch as it identifies bilinear analogues of disjunctionand conjunction.

3. Calculations with Types

It seems evident that, when people generate or analyze strings of words, theyperform rapid mental calculations, albeit at a subconscious level. It is thecontention of the categorial school of linguistics (Ajdukiewicz, Bar-Hillel,Curry, Moortgat, Oehrle, Morrill, Carpenter, Casadio, Steedman, Keenan, etc.)that these calculations are not performed on the words themselves, but on thetypes (a.k.a. categories) that have been assigned to the words in the mentaldictionary. In my present view, these types are the elements of a pregroup, infirst approximation a free pregroup.We shall assign one or more (compound) types to words in the dictionary

and proceed to check whether given strings of words are grammaticalsentences by performing a calculation in the pregroup.

A Computational Algebraic Approach to English Grammar 131

Blackwell Publishing Ltd, 2004

-

To get my point across, I confine attention here mainly to verbs andpronouns; but I also admit some plural nouns, because plurals may occurwithout determiners, which I do not wish to discuss here.In the examples below, we only exhibit successful calculations. It seems to

me that calculations are not performed in parallel, but that people abortcalculations that lead nowhere and backtrack to start all over again. I do notsee how parallel computations can fit into the stream of consciousness;however, if subconscious calculations can be shown to be performed inparallel, I may reconsider my opinion.Let us look at some examples. Note that a dash indicates a Chomskyan

trace, for comparison with the prevailing literature.

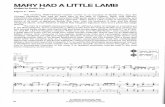

(1) He likes her.

(2) Does he like her?

(3) Whom does he like ?

where we postulate o ! o.1A few comments may be in order. First, does can also have type pr3s1i

inemphatic statements. Second, I follow the late Inspector Morse in preferringwhom to who in the accusative case. Third, note that qq1 fi qq fi 1,oo ! o o ! 1, since o ! o implies o ! o . Finally, note that,

regarding example (2), the surface structure in (4a) is replaced by the deepstructure (apologies to Chomsky) in (4b).

(4) a. q1ip3p3ioob. q1ip3p3ioo

I use parentheses to separate the types contributed by different words, therebeing technical difficulties in ensuring that the type always appear directlyunder the words to which they have been assigned. The square brackets hereplay a role similar to that played by parentheses in algebra and logicforexample, to distinguish (a + b) c from a + (b c) and (p^q) r fromp^(q r).

1 We might have taken o o here, but it is useful to distinguish these two types. Readersinterested in the reason for this distinction will find it in the Postscript (section 10).

132 J. Lambek

Blackwell Publishing Ltd, 2004

-

To display the two structures in (4) simultaneously, we have adopted thelinkages2 under the types, in place of square brackets, as easier on the eye.Actually, the right brackets are redundant; I could have just writtenq1ip3p3ioo.However, we shall retain left brackets only when a potential contraction

must be blocked for successful calculation, as discussed in section 6. Theseleft brackets play a role similar to the modalities of Moortgat (1996) andMorrill (1994), but here they have the mere status of punctuation marks andare not elements of the pregroup.

4. Indirect Sentences

The word say requires a sentential complement, say of type r, hence itsinfinitive has type ir and its inflected forms have type prksjr

. Among thecomplements we admit both direct and indirect statements and questions. Letme introduce a few new basic types:

r sentential complementsj indirect statement in j-th tense~qj indirect question in j-th tense

and we postulate s fi r, q fi r, sj ! s ! r, ~qj ! ~q ! r.The notation s agrees with the standard X-bar theory (Jackendoff 1977), but

we write ~q rather than q, because indirect questions are not formed from directquestions (with inversion) but from direct statements (without inversion).Thus, the complementizers that and whether have types ss and ~qs,respectively. Here are some examples:

(5) He says he saw her.

(6) Did he say he saw her?

(7) Whom did he say he saw ?

(8) Whom did he say that he saw ?

2 The linkages indicating (generalized) contractions may be considered as degenerate forms ofthe proofnets fashionable in Linear Logic, although they had previously been used by Zelig Harris(1966) in a different context, as was pointed out by Aravind Joshi.

A Computational Algebraic Approach to English Grammar 133

Blackwell Publishing Ltd, 2004

-

(9) He knows whom he saw

The word whom, introducing an indirect question, here has type ~qos.

On second thought, the two types of whom can be combined into one:qo

r.Up to now, we have typed the accusative whom but not the nominative who.

We are tempted to analyze:

(10) Who saw her?

But this type assignment will not account for (11).

(11) Who did he say saw her?

We therefore follow a different strategy and look at the pseudo-sentence (12)with p3 ! p3 but p3 6! p3.

(12) *Saw her he?

Perhaps an early form of English, like present-day German, might have allowedSaw her the man? We can now assign the type qp3 q

to who and analyze:

(13) Who saw her ?

(14) Who did he say saw her ?

The new type assignment explains why the following are ungrammatical:

(15) *Who did he say that saw her ?

(16) *Who did he say whether saw her ?

134 J. Lambek

Blackwell Publishing Ltd, 2004

-

(17) *Who works and she rests?

5. Limitations on Short-term Memory

In 1956, the psychologist George A. Miller published an influential paper withthe title The magical number seven plus or minus two: Some limits on ourcapacity for processing information. The title almost says it all; but Millerelaborates by asserting that we can hold at most 7 2 chunks of informationin temporary storage. He does not give a precise definition of the wordchunk, and I would like to explore the possibility that, in the present context,chunk simple type. Let us look at a few examples.

(18) Whom did I say he saw?

Beginning with the types of the first two words, we repeatedly reduce as faras possible and then append the type of the following word. Thus, usingq2 fi q, we may rewrite

reducing six chunks to four. Again, using p1 fi p, we rewrite

reducing five chunks to three, and once more , but these fourchunks cannot be reduced. Finally, we rewrite

using o ! o (since o ! o) and s2 fi s fi r, thus reducing sevenchunks to one.In fact, as long as we stick to the grammar developed so far, it seems

difficult to exceed seven chunks in temporary storage. Let us enlarge ourgrammar somewhat by adopting the following new basic types:

i complete intransitive infinitive (with to)pj participle in j-th tensep plural noun phraseo indirect object

A Computational Algebraic Approach to English Grammar 135

Blackwell Publishing Ltd, 2004

-

Now consider (19), in which Millers magical number seven is neverexceeded:

(19) What did she tell you that he had asked me to give her ?

qo qq2ipp3iroossp3prs2p2p2iooiiioo0o0 ! q

Here are successive stages in the calculation with different numbers of chunksin temporary storage:

However, it is quite possible to get more than seven chunks:

(20) What did I say you gave him ?

After having performed the indicated contractions and listening to the nexttwo words, we find eight chunks in temporary storage:

136 J. Lambek

Blackwell Publishing Ltd, 2004

-

(21)

An indirect question of type ~q can be the subject of a sentence, hence wepostulate ~q ! p3. Now look at the awkward question in (22).

(22) Whom does what he drinks bother ?

Having processed the first three words and hearing the next two, we mustbriefly hold (23), with nine chunks in temporary storage.

(23)

Of course, most speakers would rephrase this question more pliably aswhom does it bother what he drinks? I shall not analyze this sentence here.With some effort, I can produce an example that exceeds Millers upper

limit of 7 + 2.

(24) I know people whom what he drinks bothers ?

Here the plural people of type p could be the object of know; in fact we mustpostulate p fi o. However, in the present situation, we do not want (25).

(25) I know people.

So, we block the contraction op fi 1 with the help of a left square bracket.Instead, we perform the contraction ppr fi 1 and calculate that the initialstring of four words has type s1 o

s. Hearing the next three words (beforebother), we must temporarily store the compound type (26), which has 10chunks.

(26)

Again, most speakers would avoid this by saying instead: I know people whomit bothers what he drinks.

A Computational Algebraic Approach to English Grammar 137

Blackwell Publishing Ltd, 2004

-

6. The Block Constraint

Modern linguists of the Chomskyan school have been much concerned withconstraints on transformations, more recently called barriers to movement(Chomsky 1986). It is my impression that such constraints should not bethought of as restrictions on grammatical rules (in fact, traditional grammarsdont mention them), but as obstructions to language processing. How theseconstraints are expressed will, of course, depend on how the grammar hasbeen formulated. Our present approach employs neither transformations normovement, but talks about adjoints and blocks. In the following section, I willspeculate on the pros and cons of formulating constraints in terms of thepresent machinery.In Lambek 2000 I had proposed a constraint, best expressed in terms of the

hypothetical identification of our simple types with Millers chunks:

I. Our short-term memory cannot hold two consecutive chunks, both ofwhich are blocked.

In other words, we cannot process a[b[c when ab fi 1 and bc fi 1.3 Thiswill take care of one instance of what linguists call the coordinate structureconstraint, other cases of which will be discussed in section 7. First look at(27) as a mathematician would write it, to indicate that the object of loves isnot you but the constituent you and me. In (28), these parentheses are reflectedin the square brackets occurring in the corresponding string of types.

(27) She loves (you and me).

(28) p3p3s1ooorooo

Leaving out the redundant right bracket, we obtain:

(29) She loves you and me.

Given that she loves you is not a constituent of this sentence, the contractionoo fi 1 before you is blocked. (I will not discuss here why many people say*She loves you and I.) We also have the question (30) but will not accept (31).

(30) Does she love you and me?

3 When ab fi 1 and bc fi 1, there is an ambiguity in how to analyze the string of typescontaining abc. Anne Preller has observed that this is the only way in which a string of types canbe interpreted in syntactically different ways. The constraint here ensures that not both interpre-tations should be ruled out.

138 J. Lambek

Blackwell Publishing Ltd, 2004

-

(31) *Whom does she love you and ?

Although this is of type q, it is ruled out by constraint I. (The first squarebracket is needed to prevent the question whom does she love? from appearingas a constituent.)There is a problem however. Constraint I rules out not only (32), as it

should, but also the grammatical (33), which it should not, because bothcalculations give rise to qo

op at the penultimate stage.

(32) *What will he paint pictures or ?

(33) What will he paint pictures of ?

However, we can avoid the first block by invoking o fi p (since p fi o)after hearing the word paint. Now p 6! o (since o 6! p), so the first block isno longer needed. Thus (33) gives rise to (34), but (32) gives rise to (35) sincepo 6! 1.

(34)

(35)

7. Coordinate Structures

In section 6 I assigned the type oroo to the conjunctions and and or. Actually,they can have polymorphic type xrxx for many, but not all, values of x,combining two expressions of type x to form another such. In addition toobvious values of x such as i, s, p, and so on, (36) shows some other permittedvalues of x accompanied by the corresponding values of xr(x being similar).

(36) a. I saw and heard them x prs2o; xr osr2prrb. Saw you and heard them x prs2; xr sr2prrc. Whom did I see and hear ? (x io, xr oir)d. Whom did you see and I hear ? (x pio, xr oirpr)

However, there are many forbidden values of x. For example, x p3 isruled out, because he and she has type p2.

4 Also x pi is ruled out, because

4 For or the story is even more complicated. As noticed by Fisher (1986), the AmericanHeritage Dictionary of the English Language manages to formulate a rule in a statement thatbreaks it: When the elements [connected by or] do not agree in number, or when one or more ofthem is a personal pronoun, the verb is governed by the element which is nearer.

A Computational Algebraic Approach to English Grammar 139

Blackwell Publishing Ltd, 2004

-

one does not say *Did he come and I see her? Hence, without invoking anyconstraint, we know that *Whom did he come and I see ? is not acceptable.Constraint I of section 6 applies only when x o. The usual coordinate

structure constraint says no element can be removed from a coordinatestructure. Here I formulate this tentatively as follows:

II. When x i,s,p,, one cannot process x[xxrxx following a doubleadjoint.

Thus, we cannot process (37) *Whom did you sleep and hear ?where we arrive at:

(38)

at the penultimate stage. Nor can we process (39) where one arrives at anintermediate stage at (40).

(39) *People whom he came and she saw

(40)

But this is ruled out by Constraint II.Our formulation of the coordinate structure constraint will have to be

modified to account for such constructions as both and , either or , andneither nor , where the words both, either, and neither act like left bracketsbut are actual English words. I shall refrain from discussing this problem here.Finally, consider this example:

(41) I saw photos of him and (paintings of her).

If we try to turn this into a wh-question as in (42), we are led to theintermediate stage shown in (43).

(42) *Whom did I see photos of him (and paintings of )?

(43) qoop

So, Constraint I applies. However, we can circumvent the first square bracketby applying o fi p, since p 6! o . To proceed, we would have toreassign the type prpp to and. But then we obtain qo

ppprpp, whichviolates Constraint II.

140 J. Lambek

Blackwell Publishing Ltd, 2004

-

8. The Double Adjoint Constraint

Let us look at the question in (44) and try to form a wh-question (45) from this.

(44) Should I say who saw her ?

(45) *Whom should I say who saw ?

Note the two consecutive traces; but this will not serve as an explanation(see below) of why this apparently grammatical sentence is not acceptable. Atthe penultimate stage of the calculation, we obtain (46).

(46) qor~qp3 q

! qo p3 q

So, I will conjecture the following constraint:

III. We cannot process two consecutive double adjoints.

Before passing to other examples illustrating Constraint III, let us check thattwo consecutive traces are permitted. We look at the passive construction in(47), recalling that o ! o .

(47) He was killed

This may be turned into a yes/no-question:

(48) Was he killed ?

However, according to the strategy proposed in section 4, we should considerthe pseudo-question in (49), where p3 6! p3 before introducing the wh-question in (50).

(49) *Was killed (the man) ?

(50) Who did I say was killed ?

A Computational Algebraic Approach to English Grammar 141

Blackwell Publishing Ltd, 2004

-

Here p3 and o never occur in juxtaposition, so Constraint III is not violated,

although two consecutive traces do appear.According to the same strategy, we should invoke the pseudo-question in

(51) to justify the noun phrase in (52).

(51) *Like him people?

(52) people who like him

Now look at the unacceptable (53).

(53) *Whom did I see people who like ?

This violates Constraint I, but the double block can be avoided if we replace o

by p. Then, at the penultimate stage, we arrive at (54) and run againstConstraint III.

(54) qoppprpp2 q ! qo

p2 q

Let us look at two more examples. First consider (55).

(55) What does she like reading books about ?

Here we obtain (56) at the last stage, which is ruled out by Constraint I.

(56) qo opprpo

Circumventing this by making use of o fi p, we obtain (57).

(57)

Second, consider (58).

(58) *Whom does she like reading books which discuss ?

qo qq1ip3p3ip1p1opprpp2 qq1p2o

Here we obtain (59) at the penultimate stage, to which Constraint I applies.

(59) qo opprpp3 q

142 J. Lambek

Blackwell Publishing Ltd, 2004

-

Again, we can circumvent this by using o fi p and obtain (60).

(60) qo pp pr p p2 q ! qo p2 q

But now Constraint III appears.

9. Concluding Remarks

I have explored here a variant of categorial grammar, in which types areassigned to each word in the mental dictionary and grammaticality ofsentences is checked by a calculation in an algebraic (or logical) system. Herethe types are elements of a pregroup, a partially ordered monoid in which eachelement has a left and a right adjoint.Confining attention to a minuscule portion of English grammar, we work in

the free pregroup generated by a partially ordered set of basic types. In the freepregroup, types are constructed as strings of simple types, which are obtainedfrom the basic types by forming repeated adjoints.We conjecture that the simple types can be identified with Millers chunks

of information and have verified that usually not more than seven simple typesneed to be held in the short-term memory, although some examples requireeight and some more far-fetched examples nine or ten.I believe that algebraic presentations of linguistic information should be

essentially associative and noncommutative. Any occurrence of commutativityor any lack of associativity should be explicitly indicated. To licensecommutativity locally, we assign multiple types to individual words,particularly to verbs. For example, the Latin amat should probably be givensix types to allow for all the permutations of puer puellam amat, puer amatpuellam, and so forthnamely the types orpr3s1, pr1s1o, and so on.(The parentheses here indicate that both object and explicit subject can beomitted.) To block associativity we have introduced square brackets, but takeadvantage of the fact that left brackets suffice for our purpose.I have explored three formal constraints for acceptability of sentences,

hoping that these will replace many of the constraints (on transformations)found in the literature: some instances of the coordinate structure constraintand some of the so-called Ross island constraints. I believe that suchconstraints should not be thought of as rules of grammar, but as restrictions onour ability to process information. More examples should be investigated tosee whether our three constraints always apply and whether they are sufficient.One may also ask whether they can be unified under a single principle.Finally, it should be admitted that free pregroups are not likely to explain all

of syntax: not all grammatical rules can be stored in the mental dictionary inthe form of type assignments. Some additional grammatical rules seem to berequired, thus rendering the pregroup no longer free.For example, the accusative relative pronoun whom/which/ that of type

prpos can be omitted, for instance in the noun phrase in (61).

A Computational Algebraic Approach to English Grammar 143

Blackwell Publishing Ltd, 2004

-

(61) people police control

Instead of assigning a type to the empty string , we may adopt a grammaticalrule: pso fi p. Although this rule is frequently invoked in English, it canlead to sentences which are difficult to parse. Let the reader try her hand atPolice police police police police. Not only is this an acceptable grammaticalsentence, it can be parsed in two distinct ways, one of which even gives rise toa tautology.5

This article, like most of my linguistic research, has been confined to syntax,with proper attention to morphology, but ignores semantics and, a fortiori,pragmatics.Whenever I give a talk onmypresent approach to grammar, someonein the audiencewill raise the question butwhat about semantics? I usually replythat I prefer Capulet to Montague semantics; but this joke usually falls flat.6

Theoriginal syntactic calculus, or rather its proof theory, had a promising link toMontague semantics. As was pointed out by van Benthem (1990) and elaboratedimpressively by a number of people, as in the books by Morrill (1994) andCarpenter (1997), one can exploit the Curry-Howard isomorphism to associate alambda term to each deduction. This assumes implicitly that one has firstintroduced Gentzens structural rules and then converted the syntactic calculusinto Currys semantic calculus, namely positive intuitionistic propositional logic.Unfortunately, the logic of pregroups, namely compact bilinear logic, is not

a conservative extension of the syntactic calculus. Yet, van Benthemsprogram can still be carried out in a less deterministic way. For example, aderivation a fi bcd may be interpreted as a function from a to b cd or as afunction from a to (b c)d.7

10. Postscript

Pregroup grammars were introduced by me only recently at a conference inNancy in 1996. What I had found most persuasive for adopting this newvariant of categorial grammar was the observation that double adjoints wereuseful for treating Chomskyan traces in modern European languages. Laterthey also turned out to help with the analysis of clitic pronouns in Romancelanguages (in collaboration with Daniele Bargelli [2001] and Claudia Casadio[2001]). So far, no evidence for double adjoints has been found in Latin,Arabic, and Japanese. I am tempted to conjecture that no language requirestriple adjoints, if only for reasons of unmanageable complexity.As of now, we are only at the beginning of a long-range project and only a few

of the problems of interest to modern linguists have been investigated by the

5 Hint: police can be a verb meaning control.6 Except in Verona, where I recently stayed at Hotel Capuleti.7 An exposition of the interplay between these various systems may be found in Casadio and

Lambek 2002.

144 J. Lambek

Blackwell Publishing Ltd, 2004

-

pregroup approach. Not wishing to assume that readers of Syntax are familiarwith the published and current work on pregroup grammars, I have considered atiny representative portion of English here. The main purpose of this paper,however, was to show that the algebraic machinery of pregroups is particularlywell suited for investigating the computational aspect of language processing. Itseems that linguistic performance breaks down as soon as mental calculationsbecome too complicated. In particular, I believe that some so-called constraintscan be explained by saying that a certain degree of complexity cannot beexceeded. Although I have tried to express some of these constraints in algebraicterms, I still do not know how exactly the complexity should be measured.Grammars based on the original syntactic calculus (Lambek 1958) were

conjectured to be context-free by Chomsky early on, but this conjecture wasonly proved many years later by Pentus (1993). As far as I know, calculationsin such grammars have not yet been shown capable of being carried out inpolynomial time.According to Buszkowski (2001), grammars based on free pregroups are also

context-free, but of much smaller complexity. Unfortunately, it is known thatsome languages (e.g., Dutch) cannot be described completely by context-freegrammars. The known proofs of this fact imitate a result in formal languagetheory which asserts that the intersection of two context-free languages need notbe context-free. We could get around this objection by looking at latticepregroupsthat is, pregroups with an additional operation ^ (usually calledmeet) satisfying c fi a^b if and only if c fi a and c fi b. So far, I amnotaware of a decision procedure for free lattice pregroups.When I first proposed pregroup grammars at the Nancy conference, I had

assigned to the interrogative pronoun whom the type qoq. I might haveadopted the same type assignment here and avoided much discussion,irrelevant to the aim of the present article. Having opted for qo

q instead, Inow find it necessary to satisfy the referees curiosity about the o and am ledinto an unintended digression.Michael Moortgat, who had expanded the syntactic calculus into his

powerful multimodal type logic (Moortgat 1996), was present at the Nancymeeting. He asked the pertinent question: what about Whom did you seeyesterday? To answer his question I was ultimately led to revise my typeassignments as follows:

(62) a. whom: qoq

b. yesterday: ac. seen: p2o

; p2ao; p2 o

a

where o ! o but o 6! o . Since o ! o implies o ! o , the contractionoo ! 1 is still justified, and we obtain (63).

(63) Whom has he seen yesterday?

A Computational Algebraic Approach to English Grammar 145

Blackwell Publishing Ltd, 2004

-

Note (64), however, since the contraction oo ! 1 is not justified.

(64) *Has he seen yesterday her?

If it were justified, then o 1o ! o o o ! o , contrary to the stipulation thato 6! o . However, (65) works out as expected, as long as seen is given the typep2a

o.

(65) Has he seen her yesterday?

Having answered one question, I am now led to consider another, whichreally falls outside the scope of this paper: Does the dictionary have to list allthree types of seen?In fact, seen is only listed in the dictionary at all because it is an irregular

past participle of the transitive verb see, whose listed type would be io. Toderive other types from listed types there would be certain metarules. Onesuch metarule would ensure that any transitive verb also has type iao, toallow for optional adverbial complements. A second metarule would allow apotential interchange between object and adverb by assigning the new typeio

a, the hat guarding against unwanted contractions. A third metarule wouldderive the type of the past participle from that of the infinitive, thus yieldingthe types p2o

, p2ao, and p2 o

a for seen. Much work still has to be done todistinguish between different kinds of adverbial complements and proposi-tional phrases, as in whom has he seen yesterday in the shopping center?While checking the final version of this paper, I received a surprising

communication from Anne Preller that may deprive sections 68 of theirintended significance. She showed that, by clever type assignments, theconstraints can be completely avoided. For example, nonsentences such as*Whom did you sleep and hear ? then actually fail to be grammatical andtherefore no restrictions on our ability to process information need be invoked.

References

BARGELLI, D. & J. LAMBEK. 2001. An algebraic approach to French sentencestructure. In Logical aspects of computational linguistics, ed. P. de Groote,G. Morrill & C. Retore, 6278. Berlin: Springer.

BARR, M. 1999. *-autonomous categories: Once more around the track. Theory andApplications of Categories 6:524.

BUSZKOWSKI, W. 2001. Lambek grammars based on pregroups. In Logical aspectsof computational linguistics, ed. P. de Groote, G. Morrill & C. Retore, 95109.Berlin: Springer.

BUSZKOWSKI, W. 2002. Cut elimination for the Lambek calculus with adjoints. InNew perspectives in logical and formal linguistics, Proceedings of the fifth RomeWorkshop, ed. V. M. Abrusci & C. Casadio, 8593. Rome: Bulzoni Editore.

146 J. Lambek

Blackwell Publishing Ltd, 2004

-

CARPENTER, B. 1997. Type Logical semantics. Cambridge, Mass.: MIT Press.CASADIO, C. 2002. Logic for grammar: Developments in linear logic and formallinguistics. Rome: Bolzoni Editore.

CASADIO, C. & J. LAMBEK, 2001. An algebraic analysis of clitic pronouns inItalian. In Logical aspects of computational linguistics, ed. P. de Groote, G. Morrill& C. Retore, 95109. Berlin: Springer.

CASADIO, C. & J. LAMBEK. 2002. A tale of four grammars. Studia Logica 71:315329.

CHOMSKY, N. 1986. Barriers. Cambridge, Mass.: MIT Press.CURRY, H. B., R. FEYS & W. CRAIG. 1958. Combinatory logic. Amsterdam: NorthHolland.

FISHER, J. C. 1986. Do as I say, not as I do. American Mathematical Monthly 93:664.HARRIS, Z. S. 1966. A cyclic cancellation automaton for sentence wellformedness.International Computation Centre Bulletin 5:6994.

JACKENDOFF, R. 1977. X-bar syntax: A study of phrase structure. Cambridge, Mass.:MIT Press.

LAMBEK, J. 1958. The mathematics of sentence structure. American MathematicalMonthly 65:154170.

LAMBEK, J. 1999. Deductive systems and categories in linguistics. In Logic, Lan-guage and Reasoning, Essays in honour of Dov Gabbay, ed. H. J. Ohlbach &U. Reyle, 279294. Dordrecht: Kluwer.

LAMBEK, J. 1999. Type grammars revisited. In Logical Aspects of ComputationalLinguistics, ed. A. Lecomte, F. Lamarche & G. Perrier, 127. Berlin: Springer.

LAMBEK, J. 2000. Pregroups: A new algebraic approach to sentence structure. In:Recent topics in mathematical and computational linguistics, ed. C. MartinVide &G. Paun, 182195. Bucharest: Editura Academici Romane.

LAMBEK, J. 2001. Type grammars as pregroups. Grammars 4:2139.MCCAWLEY, J. D. 1988. The syntactic phenomena of English. Chicago: University ofChicago Press.

MILLER, G. A. 1956. The magical number seven plus or minus two: Some limits onour capacity for processing information. Psychological Review 63:8197.

MOORTGAT, M. 1988. Categorial investigations: Logical and linguistic aspects of theLambek Calculus. Dordrecht: Foris.

MOORTGAT, M. 1996. Multimodal linguistic inference. Journal of Logic, Language,and Information 5:349385.

MOORTGAT, M. 1997. Categorial type logics. In: Handbook of Logic and Language,ed. J. van Benthem & A. ter Meulen, 93177. Amsterdam: Elsevier.

MORRILL, G. V. 1994. Type Logical Grammar: Categorial logic of signs. Dordrecht:Kluwer.

PENTUS, M. 1993. Lambek grammars are context free. Proceedings of the eighthLICS Conference,Montreal 1993, 429433.

OGRADY, W. & M. DOBROWOLSKY. 1996. Contemporary linguistic analysis: Anintroduction. 3rd edn. Toronto: Copp Clark Ltd.

VAN BENTHEM, J. 1990. The semantics of variety in categorial grammar. In Cate-gorial Grammar, ed. W. Buszkowski, W. Marciszewski & J. van Benthem, 3755.Amsterdam: John Benjamin.

J. LambekMcGill University

Department of Mathematics and Statistics805 Sherbrooke St. W

Montreal, QC H3A 2K6Canada

A Computational Algebraic Approach to English Grammar 147

Blackwell Publishing Ltd, 2004